Hearing other people speak a foreign language can be dizzying. How can they speak so fast? Why don’t they pause between words, like we do? Actually, foreign-language speakers do pause: but despite how it sounds to us in our native tongue, spoken language is not neatly broken up by silence between words.

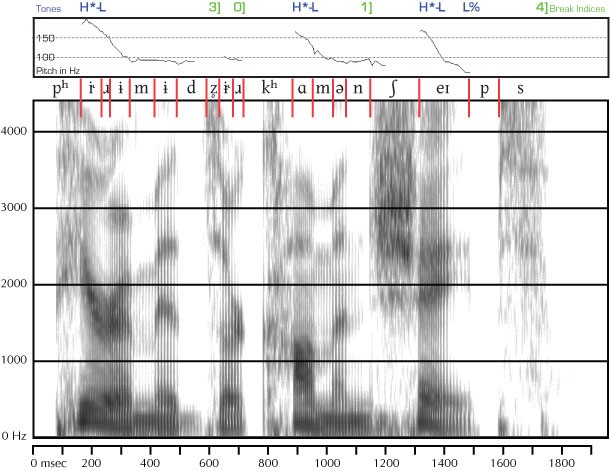

Not convinced? Take a look at the figure below, which is a display of auditory speech, called a spectrogram. A spectrogram displays frequency on the Y-axis and time along the X-axis. Darker bands indicate greater intensity of the sound at that frequency; whiter areas indicate silence. See if you can figure out where the word breaks might be in this stream.

Here’s a hint, there are four English words. (Click through to this link for the answer).

Clearly, the periods of silence in English speech do not correspond to the breaks between words. This means that when we hear an unfamiliar language, we cannot parse words by listening for the silent periods. But does this mean that information about word breaks isn’t in the sound stream at all?

Take a look at the video below which uses common English phonemes, but scrambles them together. It still sounds like you are hearing words, despite the fact that they (and the sentences they form) have no meaning:

[youtube https://www.youtube.com/watch?v=Vt4Dfa4fOEY]

A recent paper in Memory and Cognition by Mikhail Ordin and colleagues highlights two aspects of the structure of spoken language, which may allow people to segment words directly from the information contained in speech. One aspect—transition probability—is highly consistent across languages. The second—final lengthening—may differ across languages.

Transition probability is the likelihood that one syllable will occur immediately after another. Different languages are likely to have syllable pairs occur next to each more frequently within words than between words. For example, the words “salad” and “cucumber” may be easily parsed because the syllables “sa” and “lad” are more likely to occur together in the same word than “ad” and “cuke,” leading to the interpretation “salad cucumber” rather than “sal adcucumber.” After a lot of exposure to a language, these transition probabilities can be learned implicitly.

Final lengthening is the extension in duration of the final syllable in a phrase. That is, the last sound in a phrase will be elongated, providing the listener with an auditory cue that the sentence has ended.

Although speakers of all languages may do this, Ordin and colleagues point out that duration is also a cue to lexical stressing. Allow Mike Myers to demonstrate.

[youtube https://www.youtube.com/watch?v=vpqaBA_k0Ew]

As you can tell, emPHAsis lengthens the stressed syllable, too, which might interfere with final lengthening.

While all languages have higher transition probabilities within than between words, different languages tend to stress different syllables. In their study, Ordin and colleagues selected speakers of four languages, which vary where emphasis tends to be in words. German tends to place stress on the first syllable; Basque varies a lot; and Spanish and Italian, both place stress on the penultimate syllable (think lasagna or burrito).

The researchers predicted that when learning a new language, this penultimate lengthening in Spanish and Italian will interfere with transition probabilities. To test this, the researchers created a brand new “language,” consisting of six 3-syllable words, made up of unique combinations of syllables (“vaputa” and “dubipo”). Individual syllables were not repeated across words. This meant that every time you heard a “va,” it was immediately followed by a “pu.” On the other hand, every time you heard a “ta,” it only had a 16% chance of being followed by a “du,” because the other 84% of the time, it was followed by another word (which started with a different syllable). So, the transition probability within words was 100% and much lower between words.

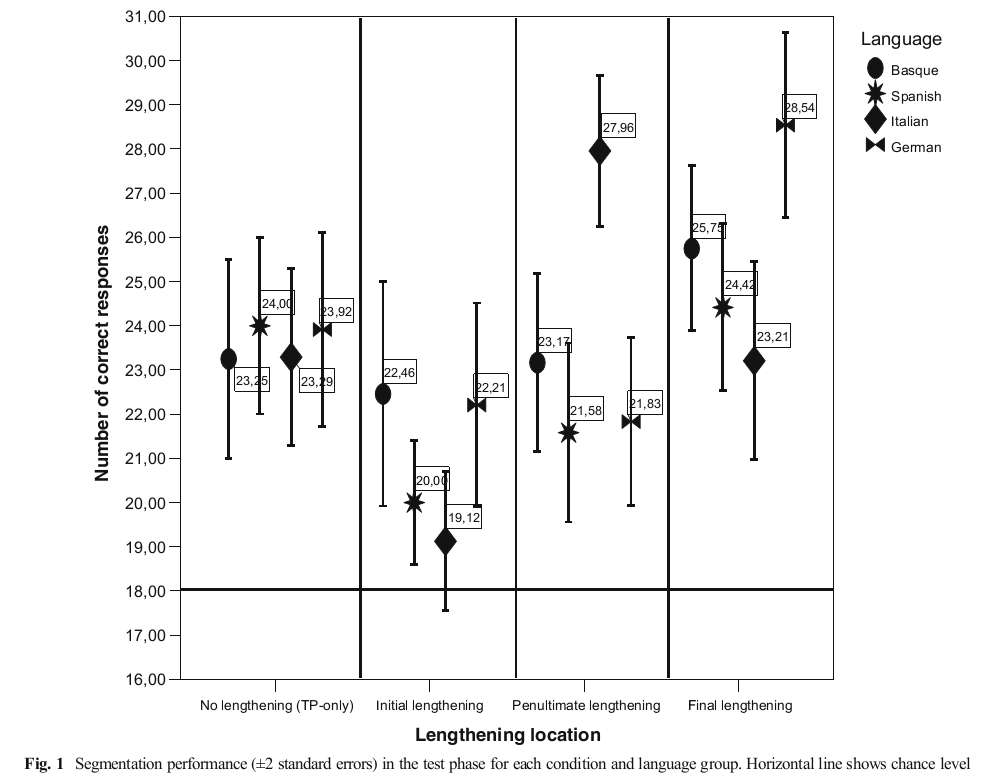

Participants had to listen to streams of these words, then performed a task where they had to pick which was a “word” in the language they just learned. With transition probability alone, participants in all languages learned the words above chance, answering correctly about 65% of the time (see “No lengthening” in the figure below). Clearly, transition probabilities are sufficient for participants to pick up on word divisions rather quickly.

How did this interact with emphasis? To test this, participants learned another three “languages.” These languages now had one of the three syllables lengthened for each word. Recall that Spanish and Italian speakers expect the emphasis to fall on the penultimate syllable in their native languages. If this cue interfered with the information from the transition probability, they should be impaired when other syllables are lengthened, but helped when the penultimate syllable is lengthened.

For Italians, at least, this is what the researchers found (in the figure below, note that the diamonds are lower for Initial Lengthening, but higher for Penultimate Lengthening). In other words, the emphasis on the non-penultimate syllable (“vapuTA dubiPO bolaTU”) was telling the Italian speakers “va puTAdu biPObo,” which violated the transition probabilities. The stressed syllable interfered with transition probabilities to offer a different parsing possibility.

Speech is complex. That is part of the reason why natural language processors, like Siri, have a hard time understanding you. Research on how different languages solve this problem can reveal what might trip up natural language processor for speakers of different languages. It also helps explain a natural strategy we have when interacting with non-English speakers: speaking. very. slowly. We emphasize the pauses between words, because they aren’t actually pauses.

One remarkable thing about language to me is how many different ways the problem of communication has been solved by humans. We hear language, but doing so puts no audible spaces between words, the units of meaning. Instead, the brain must infer them. We see language, in writing, and can not only discriminate barely perceptually different visual symbols, but process them instantaneously, recover the auditory code to which they refer, and ultimately derive meaning. At least in written language, the spaces between words and clearly demarcated. These are, of course, generalizations. Studies like the one by Ordin and colleagues reiterate the importance of considering the idiosyncrasies of individual languages while uncovering the universal properties.

Article focused on in this post:

Ordin, M., Polyanskaya, L., Laka, I., & Nespor, M. (2017). Cross-linguistic differences in the use of durational cues for the segmentation of a novel language. Memory & Cognition. DOI: 10.3758/s13421-017-0700-9.

1 Comment