When you sit down at the end of a long day and turn on Netflix, do you turn the subtitles on? If so, you are joined by many other viewers who prefer watching television and movies with the closed captions feature. In 2006, the Office of Communications in the UK found that 7.5 million people (18% of the UK population) used subtitles, and this estimate has likely grown significantly across the world since then.

Many viewers feel that subtitles improve their viewing enjoyment because they enhance clarity in the dialogue. Personally, subtitles were the saving grace that allowed me to finish the Game of Thrones series. Subtitles also expand the reach of filmmakers and provide viewers easy access to foreign films. In 2020, South Korean film Parasite won the Academy Award for Best Picture, and director Bong Joon Ho famously said:

Once you overcome the 1-inch-tall barrier of subtitles, you will be introduced to so many more amazing films.

Now we see that the “1-inch-tall barrier of subtitles” is contributing to science, as well! In a recent study published in Psychonomic Society journal Behavior Research Methods, van Paridon and Thompson used subtitles to advance statistical models in psycholinguistic research. This particular research is focused on creating models that implicitly learn lexical relationships between words.

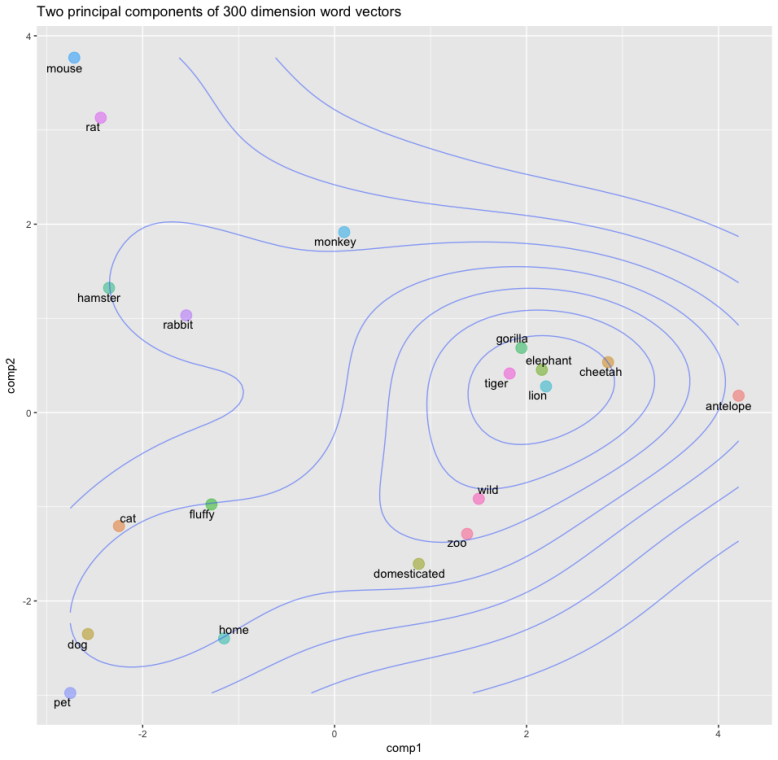

Statistical models can use word vectors or embeddings to provide a numerical representation of the meaning of a word. Vectors treat words as having multidimensional meanings, and each number within the vector represents some dimension of the word’s meaning. For example, a dog can be characterized as an animal, a pet, and living in a home. A vector would give weight to each of these characteristics to represent meaning. Based on these characteristics, a dog would have a similar vector to a cat, but a less similar vector to an elephant or a lion.

Given vector representations, we can train models to determine how similar words are to each other. Words can then be laid out in vector space; words that are close to each other have similar meanings, and words that are far apart are less like each other. Some word pairs with similar vectors might include: Microsoft and Windows; Zoom and quarantine; Netflix and chill.

So how are word embeddings generated, you ask? Well, one solution is to use a popular neural network model called word2vec. This model is trained on a large corpus of text, and it estimates the probability that one word will appear close to another word. If two words have a high probability of appearing near each other, they likely have similar meanings. This philosophy comes from the old adage by J.R. Firth:

“You shall know a word by the company it keeps.”

Researchers often need to train this model on a large corpus, and one method that has been used frequently is to train it on massive amounts of text from Wikipedia. This is a great way to get a lot of text, which is offered in many different languages, and it is able to account for more obscure words and relationships. The trouble with using Wikipedia for this purpose is that it is an encyclopedia, and encyclopedias do not reflect the way humans talk or learn language.

Now let’s return our focus to television and film subtitles. Subtitles offer transcriptions of pseudo-conversations (pseudo because the conversations are often scripted), which are much more representative of the human linguistic experience than an encyclopedia. Furthermore, researchers have access to a large number of subtitles from the OpenSubtitles.org corpus.

In their recent paper, van Paridon and Thompson describe how they used this subtitle corpus to train word embeddings, and they have made these embeddings available in 55 languages in their subs2vec Python package. As part of this work, they compared the output of three separate models:

- One trained on Wikipedia (wiki model)

- One trained on OpenSubtitles (subs model)

- One trained on a combination of both (wiki+subs model)

They found that predictive accuracy for lexical norms was similar across languages for the wiki and subs models, except the subtitle data performed better for words that were taboo or offensive. The wiki+subs model performed marginally better than each of the corpora separately.

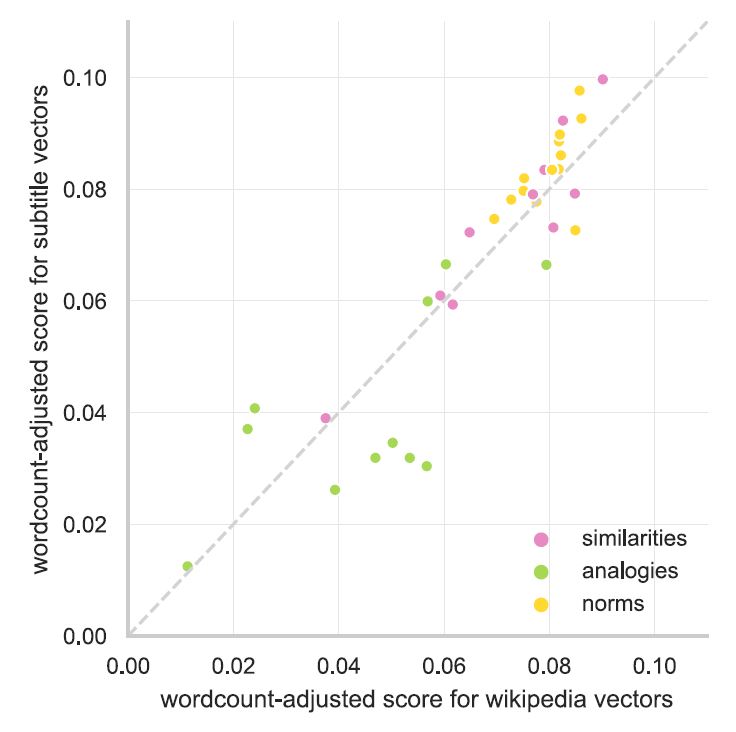

They tested these models on three tasks: semantic similarities, solving analogies, and lexical norm prediction. The size of each corpus differed based on how much text was available in each language, so they adjusted their scores based on corpus word count. In the graph below, they plotted the mean evaluation score for each language and task. Points above the diagonal line indicate better performance for subtitles, and points below the line indicate better performance for Wikipedia. Overall, the points are fairly close to the line, demonstrating only minor differences between the models.

While the subtitle data capture a more natural depiction of human language, the model performance was generally similar to the Wikipedia data. These datasets represent separate but overlapping semantic spaces, so it makes sense that the combined model had slightly better performance.

With the introduction of the subs2vec package, this paper opens the door for many new research opportunities. The OpenSubtitles corpus is markedly larger than Wikipedia offerings in some languages, so the word embeddings provided by subs2vec allow researchers to explore less-studied languages. Furthermore, this new collection of word embeddings can advance statistical models involved with deep learning and natural language processing. Thanks to these recent advancements, the fields of psychology and linguistics have now overcome the 1-inch-tall barrier of subtitles.

Psychonomic Society article featured in this post:

van Paridon, J., & Thompson, B. (2020). subs2vec: Word embeddings from subtitles in 55 languages. Behavior Research Methods. https://doi.org/10.3758/s13428-020-01406-3