Few areas of psychology research are as controversial as ‘brain training’. For the last 10 years, we have seen an influx of apps and games that purport to improve users’ cognitive capabilities.

The appeal is simple. Play a game, get smarter.

The controversy, as we’ve covered here, is that unbiased research on whether brain training games actually work is decidedly mixed. One issue is how to define the cognitive constructs we would like to train. A second issue is determining what constitutes effective brain training.

In psychology parlance, brain training has typically focused on improving working memory. Working memory is the (set of) cognitive abilities that allow us to hold information in mind and manipulate that information. Listening to a phone number as you type it in, remembering the items you need at the store, imagining your friend’s face, all potentially involve working memory. These are all very different examples, which illustrate the difficulty in capturing an individual’s working memory capacity.

Ideally, a brain training game would yield improvement on the games themselves, on related games, and on unrelated cognitive tasks. As an analogy, consider weight training. A weight training regimen would be of little use if doing bench presses didn’t improve the amount you could bench press, or the number of reps. It would be equally bad if training by doing bench presses didn’t improve pushups. Most likely, you’re training with bench pressing to improve some other task that depends on arm strength—like throwing a football.

Psychologists refer to improvements on related tasks (pushups) as near transfer and improvements on less related tasks (throwing a football) as far transfer. Usually, training boosts the underlying capacities of a system to aid in the performance of many other tasks. You train by doing biceps curls, thereby improving your strength and endurance for other things.

Assessing whether brain training games work has encountered just this problem. Does brain training improve the task you’re training on, other sorts of cognitive assessments, or cognition in general? A recent study by Anna Soveri and colleagues in the Psychonomic Bulletin and Review reported a meta-analysis to examine whether brain training studies show transfer.

This meta-analysis provides a novel approach to examining the brain training literature by being very strict about what is considered transfer, and by making predictions about how training one aspect of working memory should transfer to related aspects of cognition.

Soveri and colleagues focus on training in the n-back task, which we have also discussed here earlier. In the n-back task, a participant views a constant stream of stimuli (they could be numbers, letters, images, spoken words, or almost anything). The participant then has to respond by deciding for each stimulus whether the same stimulus occurred N times ago. If the stimuli were numbers, in a 2-back task the stream “1 4 2 4 4” would require the correct response series “No No No Yes No”. The sole yes response occurs because that 4 appeared 2 spots prior. If the same stream occurred for a 3-back task, the correct response series would be “No No No No Yes”. (Try for yourself here: good luck).

In previous meta-analyses looking at working memory training, researchers were somewhat lax on what was considered near transfer and what was considered a far transfer task. Soveri and colleagues took a more conservative approach – only including untrained n-back tasks as near transfer measures, and separating far transfer measures from each other. In that way, the potential effect on fluid intelligence, or other working memory measures could be isolated. Previous meta-analyses also did not restrict their samples to just one kind of training, including other types of brain training which could muddle the results of transfer.

Soveri and colleagues also used a methodological technique called multi-level modeling (a useful but technical description is here: PDF), which allows for the incorporation of multiple effects from the same paper. Typically, a meta-analysis is hamstrung because different effect sizes are nested within a single paper, which is almost always involving studies that are run on similar (if not the same) sample, by the same researchers. This is a problem because there are uncontrolled correlations within the same paper, so including multiple effects from it potentially biases the overall analysis. The tradeoff is that only including one effect per paper drastically reduces the power of the meta-analysis. Multi-level modeling controls for the possibility of correlations among effects from the study, giving the approach taken by Soveri and colleagues much more statistical power than previous meta-analytic attempts.

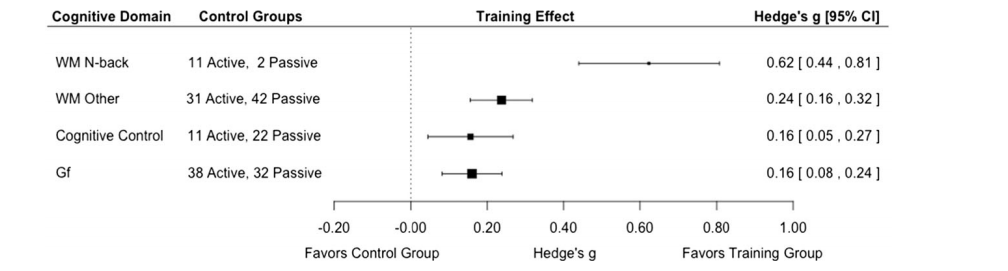

The main findings are displayed in the figure below. Soveri and colleagues find an incredibly large effect of training on the n-back task improves performance on other n-back tasks (the first row in the figure). So far, so good – bench pressing improves bench pressing!

The other rows show the somewhat small effects of training on working memory measures besides the n-back, measures of cognitive control (like the Stroop task), and on fluid intelligence (Gf). Interestingly, there is no decrease in the effect of training on far transfer tasks like fluid intelligence compared to near transfer tasks. As Soveri and colleagues point out, this suggests that trainees are learning working memory specific strategies, not strengthening the underlying components of their memory. People aren’t getting stronger; they’re learning how to bench press effectively.

There are reasons these studies are controversial. Enhancing cognition is an important goal, which drives some to pills. Research on the effectiveness of training cognitive abilities is vital. But some brain training game creators have gone too far with too little data. The Federal Trade Commission has already fined one of the top brain training companies, and leading psychologists have spoken out against the industry at large.

Meta-analyses like these are particularly important – they break down generalized statements like brain training enhances cognition into testable parts. They show how training helps, but also when it might not. So play on your phone, if you like, but don’t mistake it for a general intelligence panacea.

Reference for the article discussed in this post:

Soveri, A., Antfolk, J., Karlsson, L., Salo, B., & Laine, M. (2017). Working memory training revisited: A multi-level meta-analysis of n-back training studies. Psychonomic Bulletin & Review. DOI: 10.3758/s13423-016-1217-0.