When I first dove into research at my current laboratory, I inherited a grab bag of R code snippets, shell scripts, tasks, paradigms, and measures. I’m sure I am not alone in this experience. Using existing tools and frameworks in research is common practice—it’s often more efficient and can yield better outcomes than building everything from scratch. However, what happens when these inherited resources fall short?

While many lab- or field-specific standards serve their intended purposes, using them without question may inadvertently limit what we can learn by using these measures. Heesu (Ally) Kim et al. (2024) make this exact point in a recently published Behavior Research Methods paper.

“Our science can only be as good as our measures.”

They urge us all to reconsider what truly constitutes a “good” measure in cognitive science.

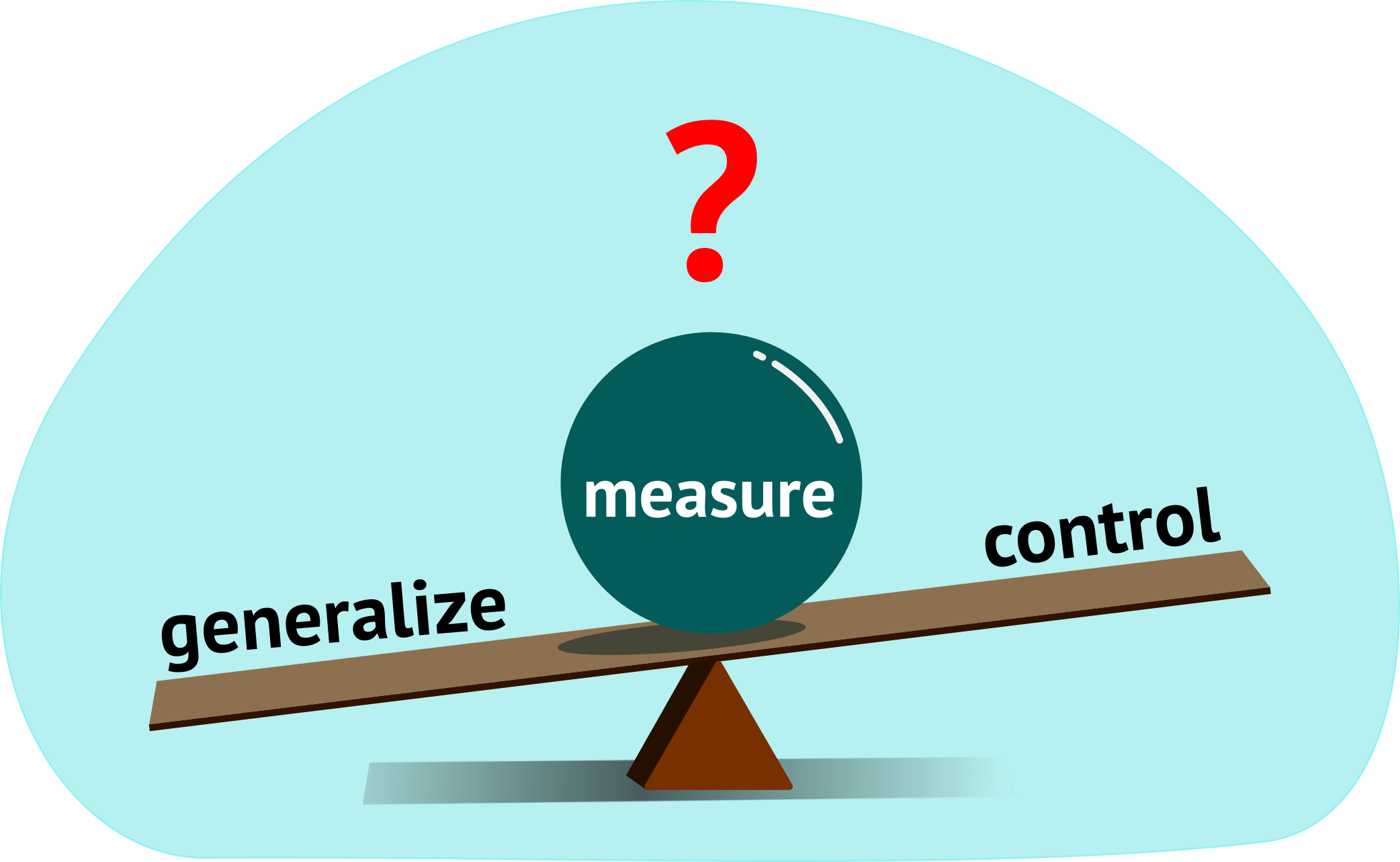

In the cognitive sciences, there’s a prevailing belief in the inherent tradeoff between control and generalizability. Conventional wisdom suggests that loosening control of a myriad of variables weakens the consistency (i.e., reliability) and validity (i.e., confidence that it measures what it’s intended to) of a tool or task—both critical aspects of its effectiveness. Unfortunately, tightly controlling the stimuli, experimental conditions, and participant demographics often limits our ability to generalize our findings. This assumed empirical teetertotter forces researchers to balance experimental control and generalizability as they design and use various cognitive tools.

However, it’s plausible that this balancing act is less precarious than we think. Luckily, the notion that increased variation always leads to worse validity and reliability is a testable one—prompting an intriguing question: Can a tool that prioritizes inclusivity and representation, resulting in more variation in its materials, have comparable outcomes to a less representative and highly used tool?

Heesu (Ally) Kim, Jasmine Kaduthodil, Roger Strong, Sarah Cohan, Laura Germine, and Jeremy Wilmer (pictured above) present the newly minted Multiracial Reading in the Minds Eye Test (MRMET); an updated version of the widely cited Reading in the Minds Eye Test (RMET). The updated test prioritizes inclusivity across multiple dimensions, including race, gender, and vocabulary level. Recently published in Behavior Research Methods, comparisons between the new test (MRMET), existing test (RMET), and other cognitive measures show that the Multiracial Reading in the Minds Eye Test meets and exceeds the standards set by its predecessor. These findings demonstrate that boosting the inclusivity of test materials does not compromise their reliability or validity.

About the new test, the authors wrote,

“[The MREMT] provides a touchstone for working toward, and demanding, greater inclusivity in scientific measures of human behavior more broadly.”

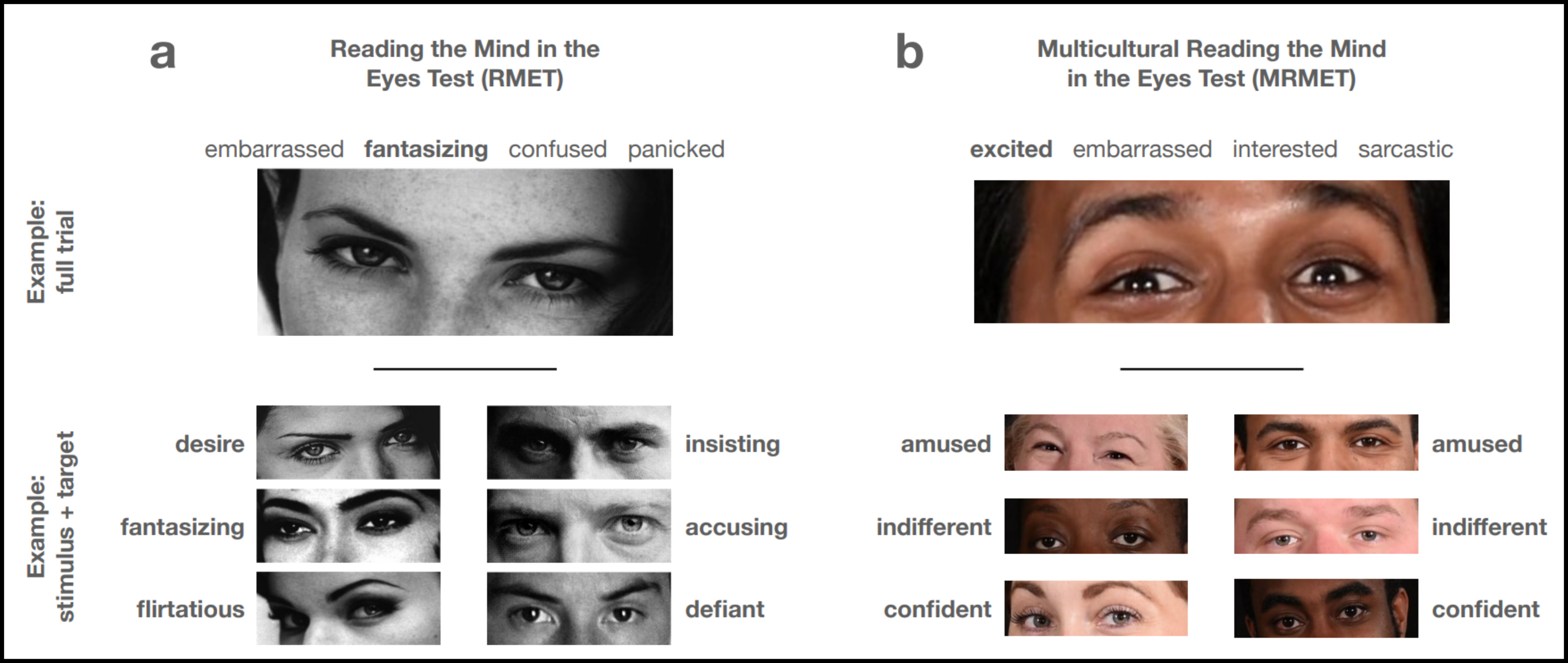

The original Reading in the Minds Eye Test evaluates a person’s ability to interpret facial expressions and engage in ‘theory of mind,’ or the ability to think about another person’s thoughts, perspectives, emotions, and goals. This ability—to read people and their inner states—is important for effective social interactions, and thus this test is a mainstay in the fields of social cognition and autism research.

The test itself is deceptively simple. Participants are shown a rectangular cutout of a person’s face, centered on their eyes, on a screen. They are then asked to choose from a set of four options, each describing that person’s potential mental state. Options can range from ‘relaxed’ to ‘cautious about something over there.’

The original Reading in the Minds Eye Test has high levels of reliability and validity, and it distinguishes itself from other tests of reading facial expressions by avoiding common ceiling effects. However, it is not without its limitations. One notable drawback is its lack of diversity in facial representation, as there are exclusively White faces and the female faces wear heavy makeup. There are also gender-stereotyped target states (‘desire’ for female faces and ‘assertive’ for male faces). Additionally, the word choices for the options require a high vocabulary level, which may present a barrier for some participants. These limitations leave many open questions about the generalizability of the test outcomes to faces of diverse races, less gender-stereotyped physical and emotional characteristics, and its validity across participants with varying vocabulary levels.

In the updated Multiracial Reading in the Minds Eye Test, the four aforementioned aspects were altered to be more representative and inclusive. The authors iteratively honed the materials in the new test through systematic comparison with the original test—ensuring that the new items correlated highly with the items in the RMET, an indication of validity. Once a candidate set of items was selected, they distributed the test to a diverse sample of over 8,000 participants spanning ages 12 to 89 years. They evaluated its internal reliability (how well responses on one-half of the test correlate with the other half), how it correlated with vocabulary knowledge, and social skills (assessed via the Autism Spectrum Communication questionnaire).

Across the board, the new test was shown to be either on par with or surpassed the original RMET:

- The Spearman correlations of the measures’ split-half reliability were statistically identical between the two tests.

- The updated test correlates less strongly with vocabulary than the original test indicating less reliance on vocabulary to perform well on the test.

- The more representative measure correlated just as strongly with the questionnaire on social skills as did the original test.

The MRMET is a much-needed update to a valuable measure and demonstrates that we can all work towards creating excellent tools, tasks, paradigms, and measures that are infused with equitable representation and inclusivity. Which, sometimes, means taking a good measure and devoting the time to making it even better. You can find the MRMET materials here: https://osf.io/ahq6n/

Innovation and iteration are cornerstones of science. Representation and inclusion are critical ingredients to ensure that cognitive science truly captures the full range of human variation. After all, one of the primary goals in cognitive science is to take what we learn from our often tightly controlled research environment and explain the behavior of real people out in the world. Perhaps we should all question the standards in our field more often and ask, “Is this the best we can do?”

Featured Psychonomic Society article

Kim, H., Kaduthodil, J., Strong, R. W., Germine, L., Cohan, S., & Wilmer, J. B. (2024). Multiracial Reading the Mind in the Eyes Test (MRMET): An inclusive version of an influential measure. Behavior Research Methods. https://doi.org/10.3758/s13428-023-02323-x