Harvey, Irma, Jose, … and now Maria. Hurricanes have been wreaking havoc in the tropical and subtropical Atlantic for much of the past few months. Their power and consequences fueled by climate change, we can expect more hurricanes that are followed by the retirement of their names: When a named storm was particularly destructive and lethal, the name is “retired” by the World Meteorological Organization—there will never be another Katrina or Sandy, although there will likely be more storms of that magnitude or worse.

The accuracy of hurricane forecasting is an important factor that contributes to a storm’s lethality. Fortunately, there have been considerable improvements in forecasting accuracy: Whereas in 1992 the forecasting error three days out was around 480 km (300 miles) along either side of a storm’s current trajectory, this has been reduced threefold according to NOAA, the National Oceanic and Atmospheric Administration.

However, even the best and most accurate forecasts of hurricanes and other storms come with a confidence envelope—that is, there is some uncertainty about a storm’s future path.

And that is where the physics of meteorology meets cognitive science: How can policy makers be provided with effective visual displays that help them make decisions about whom to evacuate and where to deploy emergency management resources.

A recent article in the Psychonomic Society’s journal Cognitive Research: Principles and Implications examined the utility of various different types of visual displays for the forecasting of hurricanes. Researchers Lace Padilla, Ian Ruginski, and Sarah Creem-Regehr were particularly concerned with the differences in efficacy between ensemble displays and summary displays.

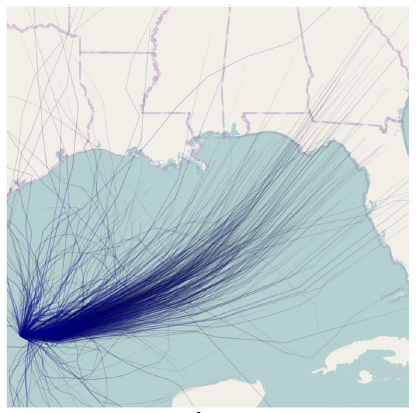

In an ensemble display, multiple predictions (or “ensemble members”) are obtained and are plotted on the same graphical plane. Each ensemble member represents a different prediction, for example the output from one particular forecasting model (forecasting models differ widely in their complexity; NOAA has provided a summary here). The figure below shows a typical ensemble display, where each plotted line represents the forecast of one hurricane model.

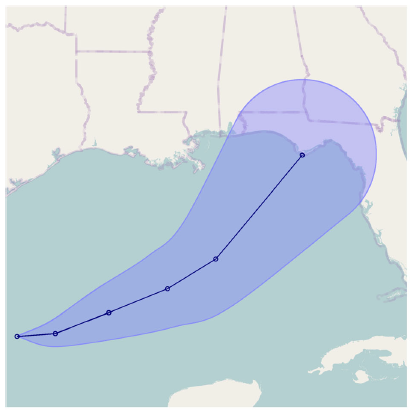

Summary displays, by contrast, plot statistical parameters that summarize the ensemble of predictions. For example, summary displays may show the mean, median, and confidence interval of the prediction ensemble. The figure below shows the summary display for the same hurricane just displayed as an ensemble.

There has been considerable debate about the relative efficacy of the two types of displays. Supporters of ensemble displays point out that the displays preserve information about the distribution of forecasts—such as bimodality or clustering—that gets lost in summary displays. Ensemble displays also preserve outlier information, such as the paths leading straight into Texas in the first figure above, that gets lost in the summary display which, in the above example, is pointing straight at Florida’s Apalachicola Bay. There are, however, also clear drawbacks to ensemble displays, foremost among them the visual crowding that arises when many ensemble members overlap, thereby losing important information about the probability density of the predictions.

The advantages and disadvantages of summary displays represent the mirror image of the attributes of ensemble displays: The problem of visual crowding may be avoided but information about the distribution is necessarily lost. A further striking implication of summary displays is that their “cone of uncertainty” (as in the above figure) is often misinterpreted, by non-experts, as depicting the spatial expansion of a hurricane over time, rather than as representing the growing uncertainty about the hurricane’s future position.

Padilla and colleagues designed two experiments to compare ensemble and summary displays by focusing on the role of the salient features of each display.

In the first experiment, novice participants were shown one of the two types of displays with either the initial size or intensity of the hurricane superimposed. The actual forecast information being shown was the same for both types of display. In addition, an “oil rig” in the potential path of the hurricane was identified by a red marker, and participants had to indicate either the intensity or size of the hurricane if it were to hit that rig using the corresponding multiple-choice display in the figure below. (So if size was initially depicted, the task would involve choice of the size from the lower row of alternatives in the figure, and conversely if strength was initially depicted the top row of choices would be presented.)

In line with previous results, Padilla and colleagues found that the size of a hurricane was judged to be considerably larger with the summary display than the ensemble display, all other variables (e.g., position of the oil rig) being equal.

Turning to intensity, the summary display again elicited higher judgments of intensity than the ensemble display overall. However, this overall effect was qualified by an interaction involving the lateral distance of the oil rig from the center of the predicted path of the storm. This interaction is shown in the figure below:

For both types of display, the hurricane is judged to lose intensity the further away from its expected average path it hits the oil rig. That presumed loss of intensity is greater with the ensemble display, presumably reflecting the lesser density of ensemble paths further from the central core.

In the second experiment, Padilla and colleagues focused on the ensemble display only and examined whether people’s judgments were affected by whether or not an oil rig was located precisely on one of the projected paths or in between ensemble members. To illustrate, in the figure below rig “a” is on a projected path whereas rig “b” is in between two paths. Participants had to decide which of the two rigs would receive the most damage from the hurricane.

The results showed that participants predominantly chose the rig closer to the center of the hurricane forecast, although that tendency was reduced when the rig further from the center fell directly onto an ensemble member rather than in between projections. Clearly, ensemble displays invoke their own interpretative biases, in this case an over-weighting of individual ensemble members.

Taken together, the two experiments illustrate various biases associated with hurricane prediction displays: Viewers are more likely to incorrectly interpret the “cone of uncertainty” in a summary display as indicating a storm’s size. Viewers are more likely to interpret distance from the center in an ensemble display as indicating a weakening of the storm’s intensity. Finally, with an ensemble display people are paying undue attention to the precise location of a single ensemble member, rather than interpreting the density of ensemble members as an indication of a probability distribution.

And to take these findings from the lab to the real world, imagine how viewers might have interpreted the size of Maria from this forecast:

Article focused on in this post:

Padilla, L. M., Ruginski, I. T., & Creem-Regehr, S. H. (2017). Effects of ensemble and summary displays on interpretations of geospatial uncertainty data. Cognitive Research: Principles and Implications. DOI: 10.1186/s41235-017-0076-1.