There are absolutely no swear words in Toy Story 3. This is something that probably should go without saying, if you’re even vaguely familiar with the Disney/Pixar approach to family entertainment. And yet, as I paused in my scrolling through a popular short video app, this was exactly the thought that skipped through my mind as I listened to the voice of Michael Keaton drop a clear-as-day F-bomb in his portrayal of Ken the doll, who was the midst of being tortured through fashion by none other than Barbie herself. (No comment on the apparent nature of wholesome family entertainment portrayed here.) But even more surprising than hearing the English language’s most famous no-no word was the transience of its presence. After reading in the comments that the line is actually “Oh Barbie!”, the illusion seemed to completely evaporate upon the video’s next playthrough, only to return on the next.

This and other examples of auditory illusions seem to make up a popular genre of internet content, where a messy sound signal can be perceived to be more than one thing. You might have encountered this in Yanny vs. Laurel or green needle vs. brainstorm or any of the hundreds of videos featuring a rainbow of different phrases displayed over a looping audio track. In each of these, perception of what is being said in the audio track seems to depend on which caption you pay attention to. The core phenomenon underlying each of these examples is the fact that speech perception is inherently multisensory. When we understand spoken language, we’re not just focusing on the audio of speech; we also take cues from our other senses, including what we see.

One of the most famous manifestations of the multisensory nature of speech processing is the McGurk effect, where perception of a word or syllable on an auditory track can be influenced by simultaneously playing a video of a person saying a different word or syllable. For example, when audio of a person saying “ba” is played at the same time as a silent video of someone saying “ga”, people will often report that they hear “ga” or even “da”—even though the actual sound is “ba”. In other words, a visual signal coming from watching a person pronounce a totally different sound changed the way that the auditory signal was perceived.

McGurk effects are a useful tool for cognitive psychologists studying how and when multisensory information comes together during speech perception. Traditionally, many of these studies use non-word syllables like “ba” and “va” to elicit McGurk effects, but as Josh Dorsi, Rachel Ostrand, and Lawrence D. Rosenblum (pictured below) have pointed out in their recent Attention, Perception, & Psychophysics paper, these non-word syllables don’t allow us to study these questions within lexical processing, where we identify what words are being spoken to us. Without the capacity to study multisensory processing of words, it becomes unclear whether individual words are first identified in different sensory streams before the streams come together, or whether it’s the streams that combine first before words are identified.

Dorsi, Ostrand, and Rosenblum investigate this question in their article, “Semantic priming for McGurk words: Priming depends on Perception” by taking advantage of another phenomenon called semantic priming, where words (such as “nurse”) are generally easier to process if they have been preceded by a semantically related word (like “doctor”). In semantic priming paradigms, the word that is presented first is called a “prime”, and the word that is presented later and can be easier to process, is called a “target”.

In their main experiment, the authors used McGurk stimuli—where an audio recording of a word (like “bale”) was played at the same time as a silent video of a person pronouncing a different rhyming word (such as “veil”)—as primes. The targets that followed were related to either the auditory word (like “hay” which relates to “bale”) or to the visual word (like “wedding” which is associated with “veil”). After seeing and hearing a McGurk stimulus, participants then listened to an audio-only target and indicated, as quickly as possible, whether or not the target was a real word (there were non-words items in the study for the sake of this lexical decision task, but the authors did not analyze data for these non-words).

The general aim of the experiment was to see which component of the McGurk stimuli—auditory or visual—had greater priming power. If participants responded more quickly to targets that were related to the auditory word, then that would imply that people were identifying words within the auditory signal without too much influence from the visual signal. On the other hand, if the visual signal caused more priming (in the form of faster response times to targets related to the visual word), that would imply that people identified words after the visual stream combined with the auditory stream, since any perception of the audio-only targets other than what was played in the raw sound signal necessarily involves some influence from another sensory stream.

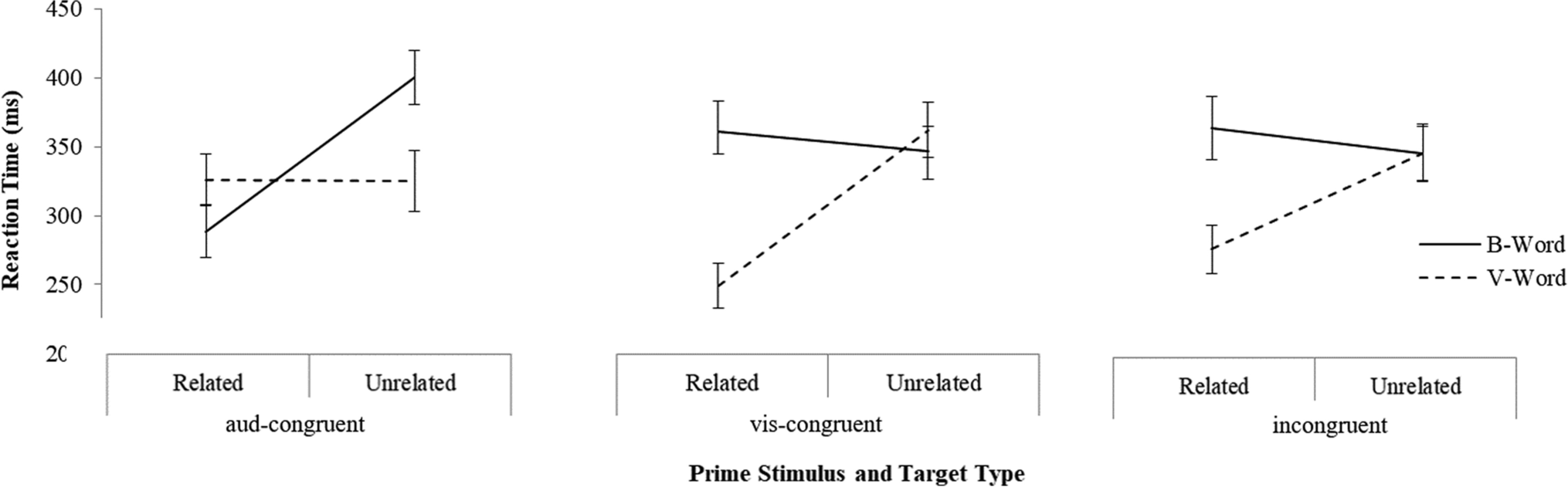

Their response time results indicate that people were more primed by the visual component of the McGurk stimuli, than by the auditory component. To use the example above, participants responded to “wedding” faster than to “hay”, indicating that the visually presented word “veil” had more priming influence than the auditory component, “bale”. These results therefore suggest that during speech perception, audio and visual information combine into one signal before people start identifying words.

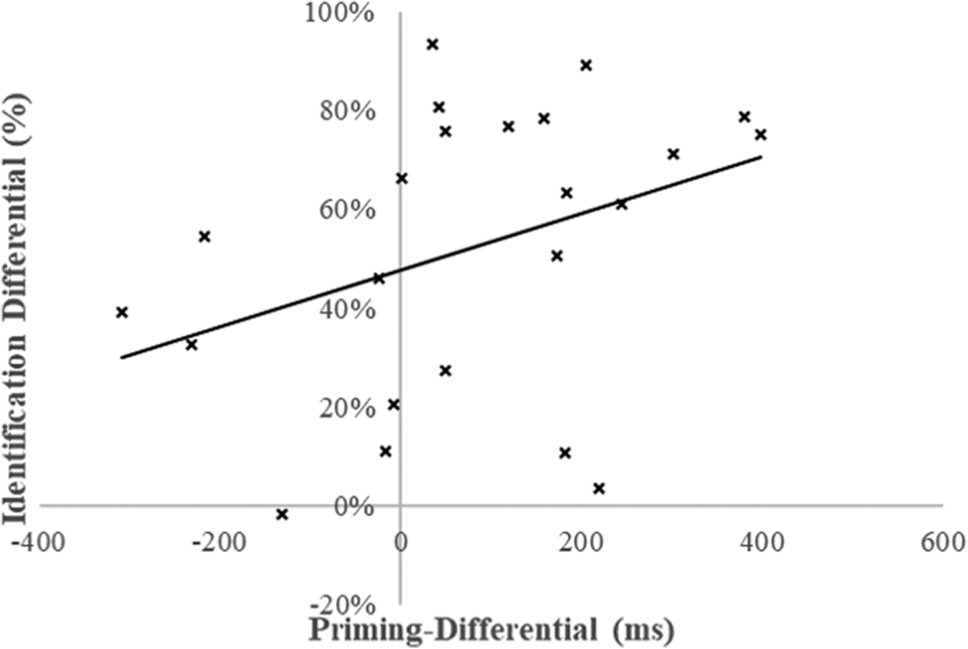

But the authors wanted to be sure that these priming patterns could indeed be linked to the experience of the McGurk effect. In the second part of their study, they therefore had participants listen to all of the McGurk primes and explicitly state what word they heard. Using these data in conjunction with the response time data, they calculated two measures for each of their McGurk primes: the relative likelihood that each prime would elicit a McGurk effect, as well as the relative strength of the McGurk effects elicited by each prime. They then correlated these two measures and found that stronger McGurk effects do indeed relate to stronger priming from a combined multisensory signal.

Critically, the authors also contextualized their findings with a previous version of the study conducted by Ostrand, which found an opposite pattern of results: the auditory signal, and not the visual signal, elicited stronger priming patterns. Believing that these opposing patterns may have been driven by weaker McGurk effects in the previous study, the authors assessed the effectiveness of the previous study’s McGurk primes by presenting them to a new group of participants and having them report what sound they believed each item began with. The authors found that, indeed, the primes used in the previous study elicited fewer McGurk effects than those from their current experiment.

Taken together, it seems that information from multiple sensory streams does indeed come together before lexical processing; when they combine effectively enough to produce McGurk effects, the combined stream of multisensory information can create auditory illusions that are powerful enough to override the actual auditory signal! For some speech perception researchers, that’s a finding that could warrant a “F— yeah!” For anyone offended, just pretend they said “Barbie”.

Featured Psychonomic Society article

Dorsi, J., Ostrand, R. & Rosenblum, L.D. (2023). Semantic priming from McGurk words: Priming depends on perception. Attention, Perception & Psychophysics, 85, 1219–1237. https://doi.org/10.3758/s13414-023-02689-2