Reproducibility is the hallmark of science. It has been argued that a finding needs to be repeatable to count as a scientific discovery and that replicability is a line of demarcation that separates science from pseudoscience.

The fact that a recent large replication effort of 100 studies found that fewer than half of cognitive and social psychology findings could be replicated thus gave rise to considerable concern in the scientific community and beyond.

The concern about reproducibility, often labelled the “replication crisis”, has also stimulated a flurry of research activity. We reported on reproducibility-related issues on this blog here, here, and here. We have also discussed how to increase the replicability of studies (e.g. here, here, here, and here), and we have covered a few meta-analyses (here, here).

In this post, we continue our coverage of reproducibility issues based on a recent article in the Psychonomic Society’s journal Behavior Research Methods. Researchers Robbie van Aert and Marcel van Assen addressed the question of how to combine the results of a replication study with the results of an original published experiment that was significant.

This is a non-trivial issue because even putting aside publication bias, the effect size reported by the original study will be larger than the true value because its effect size was observed conditional upon the result being significant. For the follow-up study, which has not been selected for significance, the effect size is not truncated at a significance cut-off and hence will, on average, be smaller and closer to the true effect.

This statistical fact can give rise to a dilemma: Suppose that a researcher has replicated a published study and that this replication falls short of significance. It is nonetheless possible that the combined effect size across both studies, obtained by means of a (small) meta-analysis, will be significantly different from zero (because the combined power of both studies may be sufficiently large). But how is a researcher to interpret this result given that the effect size of the first study is most likely too large?

van Aert and van Assen provide a solution to this dilemma by introducing a “hybrid” meta-analysis technique that combines a statistically significant original study with a replication while correcting for the likely overestimation of the effect size by the original study.

The hybrid method makes use of the fact that the distribution of p-values under the null hypothesis is uniform. That is, when no effect is present, any value of p is equally likely, including values of .05 or less. (You may find this surprising but we have posted on this phenomenon before. A good explanation and pointers to teaching resources can be found here.)

Armed with this mathematical background, the hybrid method can deal with the inflation of the effect-size estimate in the original, significant, study by transforming its p value (the details of the transformations can be safely ignored for now) before combining it with the p value of the subsequent replication study. The composite of those p values has a known sampling distribution and can therefore be used to estimate the combined effect size and draw statistical inferences about the evidence provided by both studies together.

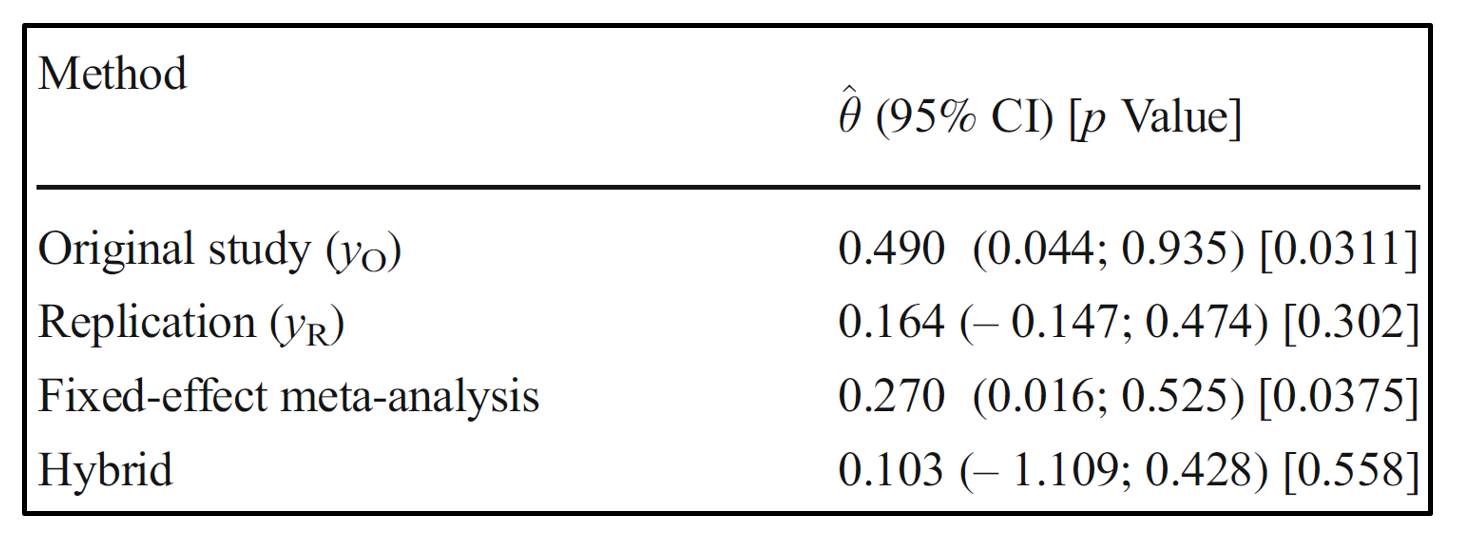

The implications of the hybrid method are illustrated in the figure below, using a hypothetical but plausible example. In this scenario, the original study was significant (hence p = .03 in the final column) and the estimated standardized mean difference was reasonably large (.490). The replication failed to reach significance and yielded a smaller effect size (.164), as would be expected for a study whose effect-size estimate is not selected on the basis of it being significant.

The last two rows in the figure are of greatest interest: if the two studies are combined in a conventional fixed-effect meta-analysis, the aggregate estimate of the effect size is again significantly greater than zero. As we noted above, this aggregated estimate is however inflated—by an unknown amount—because the first study was selected for its significance.

The final row shows the results of the hybrid analysis, which corrects for that inflation. When this is done, it can be seen that the combined results no longer provide evidence for a significant overall effect.

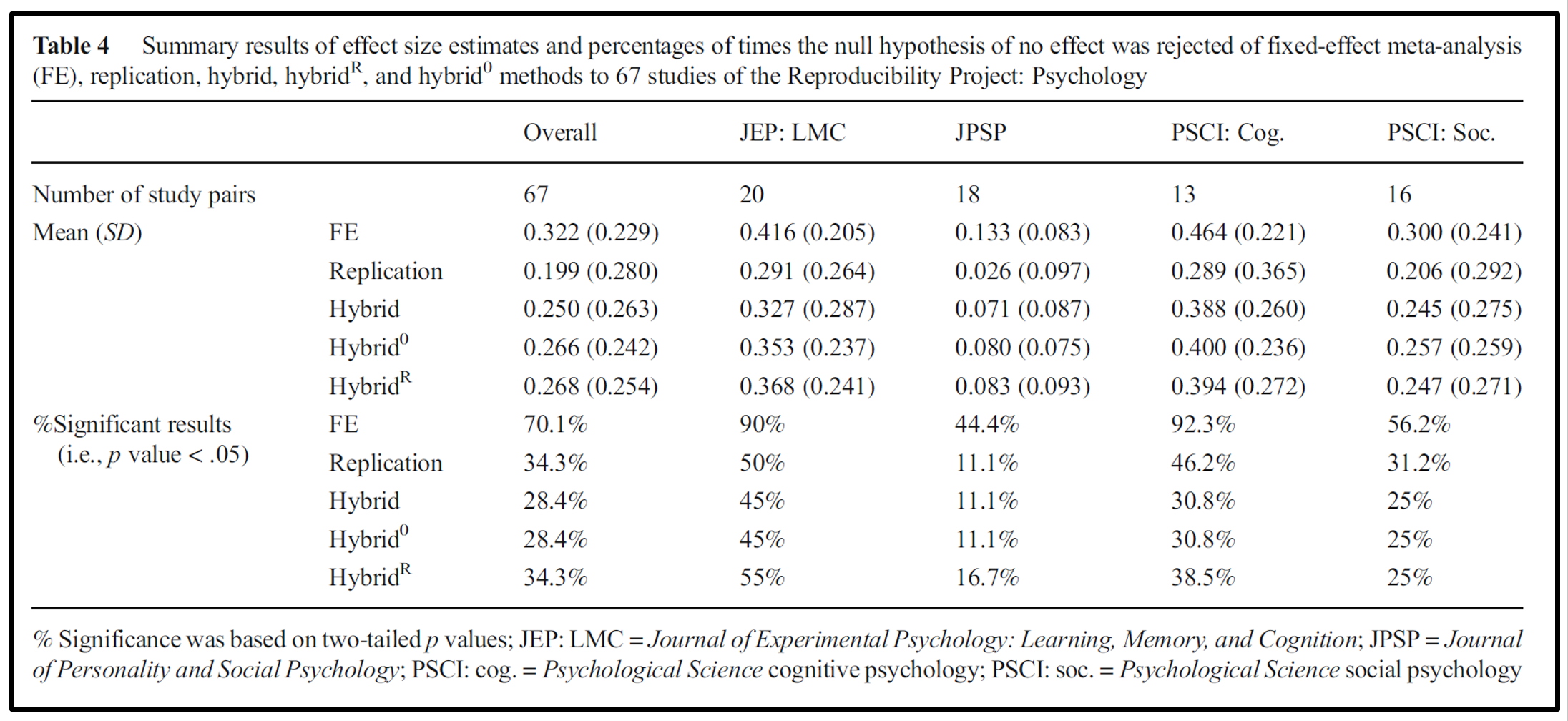

van Aert and van Assen applied the hybrid method to the results of the large replication effort that investigated the replicability of 100 studies drawn from a variety of journals and two areas of psychology, cognitive and social. The results for 67 studies that satisfied the requirements of the hybrid meta-analysis are shown in the table below.

Several aspects of the results are noteworthy:

- if only the replication is considered (rows labelled Replication), then overall only 34% of the replications were successful using the conventional frequentist criterion of significance (bottom panel, p < .05).

- this rate of successful replications was considerably higher for cognitive psychology than social psychology.

- when the original study and the replication were combined using conventional fixed-effect meta analysis, the overall rate of significant effects jumped to 70% (and exceeded 90% for the cognitive findings). Note, however, that this result is too optimistic because the original study almost certainly over-estimated the actual effect size.

- when the original study and the replication are combined using the hybrid method, the combined overall rate of significance drops considerably, as would be expected from correction of the bias in the original significant study (van Aert and van Assen discussed three variants of the methodology; the minor differences between them need not concern us here).

What can we conclude from this result?

It is clear that the hybrid analysis is far more conservative than conventional fixed-effect meta analysis and that its overall effect-size estimate was even lower than that obtained in the replications.

How can we know that this strong conservatism of the hybrid method is appropriate? Is the method over-correcting the bias in the original study? Might we miss out on detecting true effects?

These questions were also answered by van Aert and van Assen, except that the details of that aspect of their article are beyond the scope of a blog post. In a nutshell, the researchers applied the hybrid method to synthetic data whose properties (such as true effect sizes) were known. Across a wide range of parameter values (i.e., different true effect sizes and sample sizes), the hybrid method performed noticeably better than a conventional fixed-effect meta-analysis. With moderately large sample sizes for the replication (N > 50), the calibration of the hybrid method was quite impressive, and its estimates for the effect size differed little from the known true value in the synthetic data.

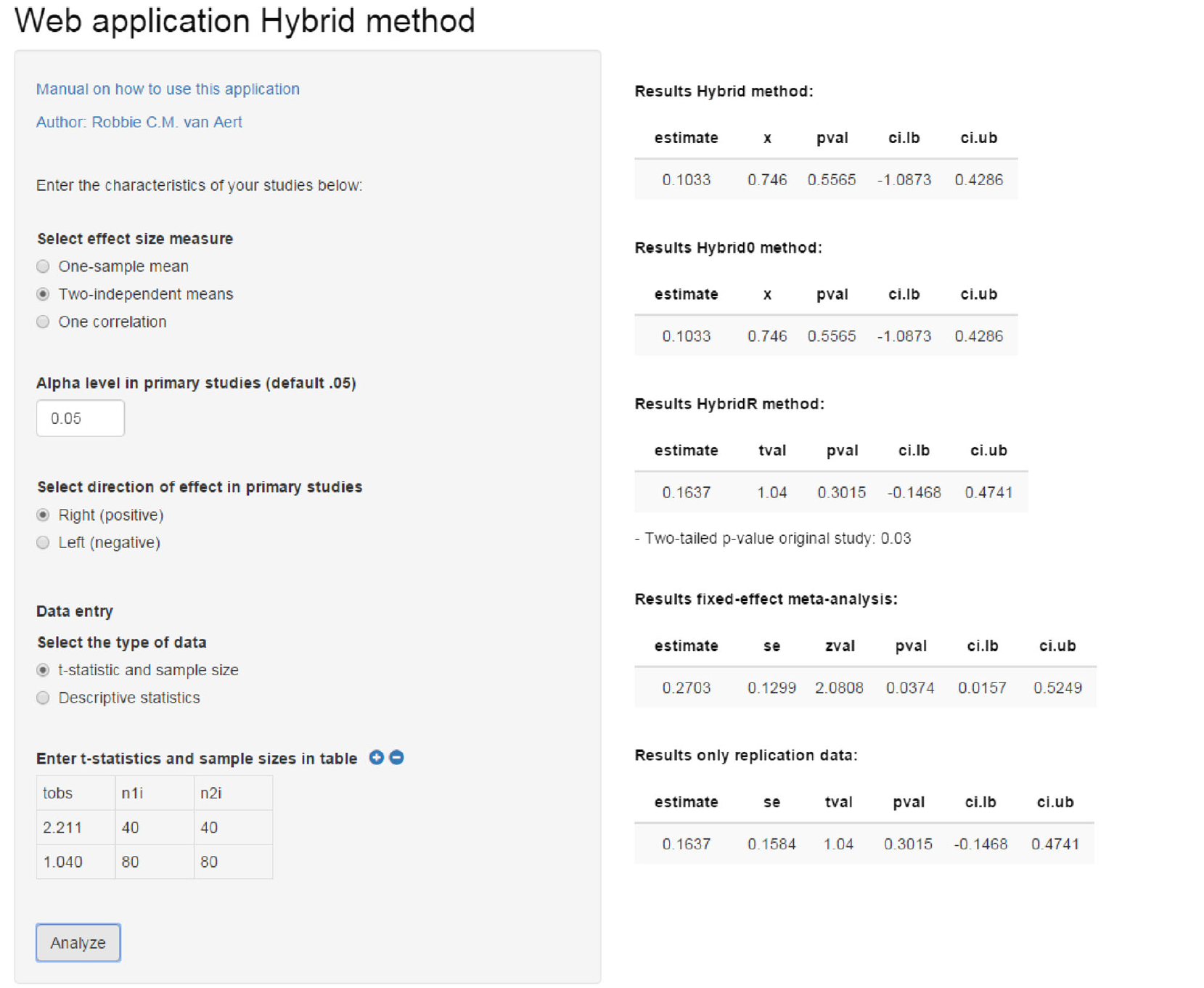

If you find these results promising and if you wish to try out the method yourself, you can go to this web app. Click a few buttons and you get results like this:

Psychonomics article featured in this post:

van Aert, R. C. M., & van Assen, A. L. M. (2018). Examining reproducibility in psychology: A hybrid method for combining a statistically significant original study and a replication. Behavior Research Methods, 50, 1515-1539. DOI: 10.3758/s13428-017-0967-6.