Asking your waiter what beers are on tap should come with a trigger warning, because the simple question may trigger a cognitive challenge. From Heineken to Becks and some other local boutique IPAs whose names you cannot even pronounce, the list can be so extensive that you are put into frantic rehearsal mode just to recycle all the options while you make up your mind. (Chances are you’ll pick the first or the last beer in the list.)

The ability to encode and then immediately reproduce a short list of verbal items is one of several verbal short-term memory (vSTM) tasks. Decades of research have revealed that this ability is related to other cognitive skills, such as vocabulary acquisition and even problem solving.

But what is the precise nature of that association? And given that correlation cannot imply causation, is it good vSTM performance that enables people to do better at higher-level cognitive tasks, or is vSTM performance the outcome of better cognitive performance?

A recent article in the Psychonomic Society’s journal Memory & Cognition tackled this question. Researchers Gary Jones and Bill Macken presented the view that vSTM is on outcome rather than a cause of language learning. Moreover, they suggested that instead of being domain-specific (e.g., verbal vs. visual material), performance on vSTM tasks primarily reflects domain-general associative learning abilities.

In support, previous research has shown that long-term learned associations—such as varying interitem transitional probabilities—can facilitate vSTM performance even when other factors, such as familiarity of the verbal material, are controlled.

Jones and Macken tackled the problem by designing a computational model of associative learning that they then used to derive predictions for how people should handle vSTM lists of a specified type. Their CLASSIC (Chunking Lexical and Sublexical Sequences In Children) model learns increasingly larger chunks from the sequential input presented for training.

The learning mechanism in CLASSIC is simple: each utterance is recoded into as few meaningful units (chunks) as possible, based on already-learned chunks. The model then learns a new chunk for each adjacent pair of chunks in the recoded representation of an utterance. At the outset, the model is endowed with knowledge of the basic phonemes in English. Over time, those phonemes are then composed into chunks based on their co-occurrence. To guard against any pairing of phonemes generating their own chunk, the learning rate is sufficiently small to filter out chance co-occurrences.

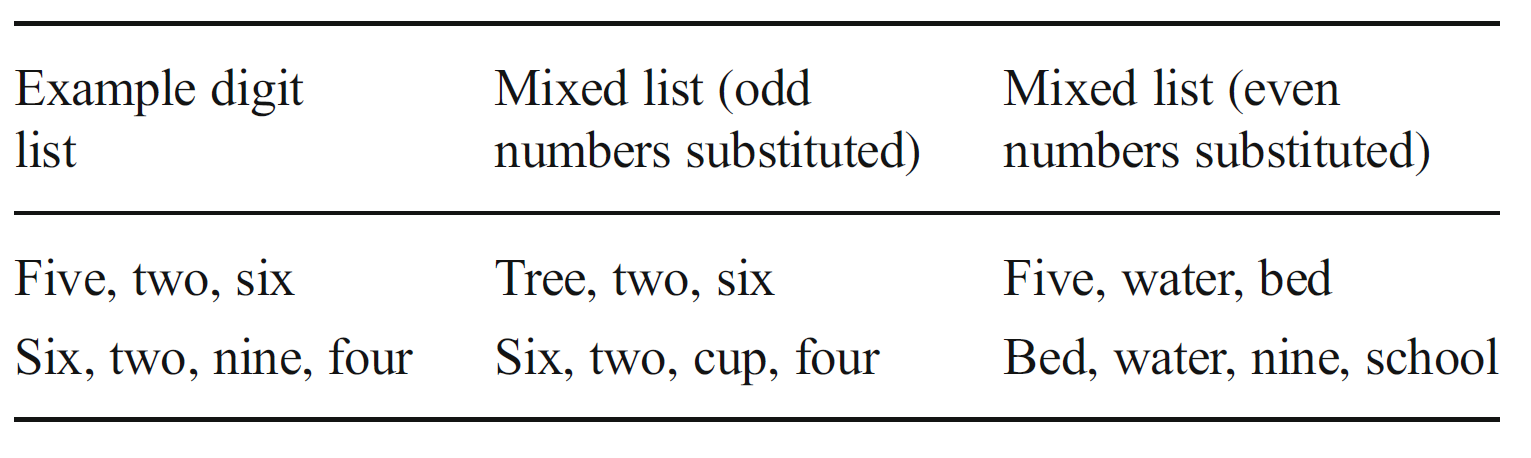

The model was trained by presenting it with transcribed utterances that were directed at young children. After it had acquired “language”, the model generated predictions for a vSTM experiment for lists of digits in which some of the time some of the digits were replaced by words. The figure below shows the list structure:

The rationale behind this manipulation was to examine whether the recall advantage that lists of digits commonly enjoy over lists of words reflected the specific learned associations for the co-occurrence of digits, or something more basic about digits. The learned-associations hypothesis would predict that when a digit is neither preceded nor succeeded by another digit, it should no longer enjoy an advantage over a word in the same isolated position.

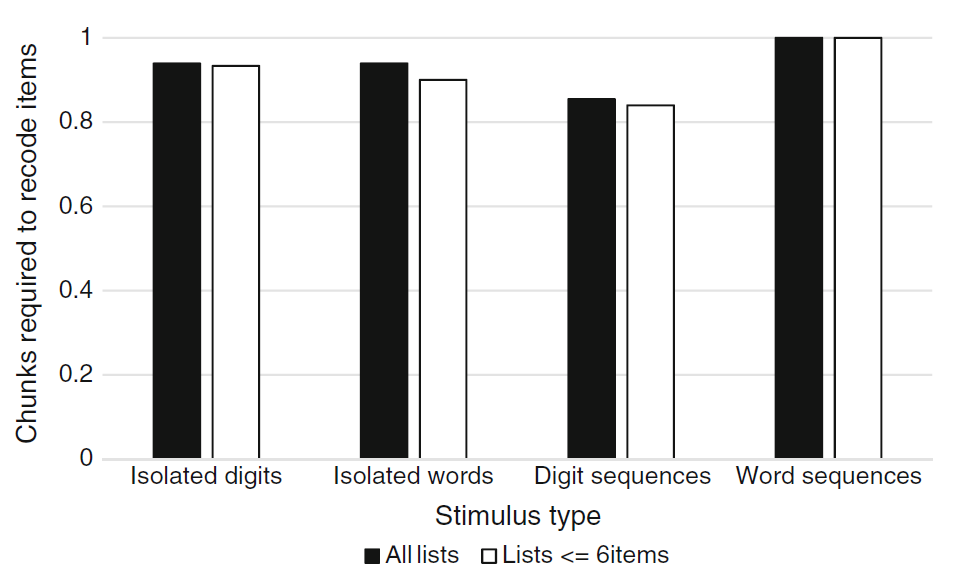

The associative-learning based model predictions are shown in the next figure.

Performance here is expressed as the number of chunks required to recode list items, where a larger number indicates worse recall performance. The advantage for digit sequences, based on the extended learning of all possible transitions within this limited ensemble of stimuli, is clearly visible in comparison to word sequences. It is also clear that this advantage disappears when a digit is presented in isolation: the number of chunks required for that list does not differ from its counterpart in which a word is isolated.

Jones and Macken tested the model predictions in 6-year old children. The children were presented with lists of the different types shown in the figure above by listening to a taped recording. The children recalled the list immediately upon encoding.

The results showed the usual digit superiority: Children recalled more than 6 digit lists correctly on average, compared to just under 5 word lists. For isolated words and digits, the digit advantage disappeared, exactly as predicted by the model. Indeed, the data showed an advantage for isolated words over digits—although this advantage went beyond what the model predicted, it supports the idea that the digit-superiority arises due to the greater experience with digit sequences, rather than being due to any inherent characteristics of digits.

In a second experiment, Jones and Macken presented the children with a nonword repetition task. In this task, the participant listens to a nonword and then attempts to reproduce it aloud immediately afterwards. This task is another common vSTM task. The CLASSIC model makes distinct predictions for each particular nonword, based on the learned transition probabilities between the phonemes/chunks that make up the nonword.

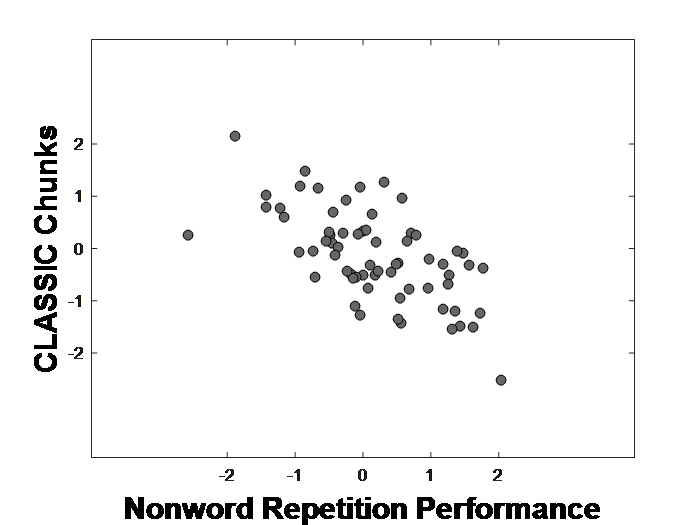

In support of the learned-association account, there was a fairly strong correlation (r= -.63) between the children’s performance on each item and the number of chunks required by CLASSIC to recode each particular word. (The correlation is negative because fewer chunks translate into better performance, as in the preceding figure). Just for fun, I illustrate a correlation of exactly -.63 below for the number of items used by Jones and Macken (on entirely arbitrary scales):

The figure illustrates the magnitude of the association nicely. Given that the CLASSIC predictions arise entirely from its associative-learning mechanism during language acquisition, the data from the second experiment provide further support for the notion that vSTM performance is a consequence—and not cause—of long-term associative learning of linguistic material.

Based on these results, Jones and Macken offer a provocative and intriguing conclusion:

“In short, our computational model of associative learning provides a parsimonious explanation of performance in vSTM tasks without the need for additional bespoke processes such as a short-term memory system. Rather than being viewed as a specific processing system, we suggest that vSTM be viewed as a particular setting in which the participant applies his or her language knowledge to the task at hand.”

So the next time you need to choose from among a dozen draft beers, just think of it as an exercise of linguistics rather than memory. Hint: Beck’s takes fewer chunks to encode than Heineken, so go for that.

Psychonomics article highlighted in this blogpost:

Jones, G., & Macken, B. (2018). Long-term associative learning predicts verbal short-term memory performance. Memory & cognition, 46, 216-229. DOI: 10.3758/s13421-017-0759-3.