I was hurriedly looking through a messy drawer the other day in search of a retractable tape measure for a project I was working on around the house. A roll of adhesive tape caught my attention. No, not that. Then I reached for a ruler. Nope―that wasn’t quite what I was looking for, either. I finally spied the tape measure I sought, grabbed it, and quickly shut the offending drawer while vowing, yet again, to straighten it up soon.

What had just happened? Despite the fact that our visual attention and eyegaze are guided by search templates (visual goal states), we are unwittingly distracted by non-goal objects that share features with our desired target object. Things that share features are considered to be “neighbors” in the sense that they are close in representational “space”. And objects that are close neighbors of our target are typically more difficult to reject than items that are far neighbors.

Intuitively, it makes sense that objects that look similar to a retracted tape measure―say, a small box of about the same size―are distracting. After all, we have in mind the appearance of that object during our search. When our search template matches features of an object in the array, a cognitive cost is exerted as we further scrutinize the object to assess whether it is, in fact, our target.

Perhaps more surprisingly, recent research has shown that objects also capture our visual attention when they share manipulations (here, pinching and pulling, as is the case with the adhesive tape) or goals (to measure, as is true of the ruler) with target objects. It is our brain’s task to resolve the competition engendered by the distracting adhesive tape and ruler so that our attention and eyegaze are free to fixate on the tape measure in preparation for grasping it.

This means that our search templates are not only visual, but that we may also have in mind object manipulations and task goals when we search for targets. How do these various forms of feature-sharing influence the competition between target and competitor objects? And what is the time-course of this competition? Several recent investigations have pursued these questions using the Visual World Paradigm.

In this well-studied experimental paradigm, participants typically search for a target object (or word) in one of four possible locations; the other locations are occupied by distractor objects (or words). Depending on the study questions and hypotheses, these distractor objects share various features with targets, and participants usually click on the target once they have located it. (Often a brief preview period is provided on each trial before participants hear the name of the target). The time course of fixations on targets and distractors tells us about the successive engagement and disengagement of visual attention on the objects in the array.

A recent study by Jérémy Decroix and Solène Kalénine in in the #time4action special issue of Attention, Perception, and Psychophysics used a variant of the typical Visual World paradigm that focused not solely on objects, but rather on the features that determine the allocation of attention to object-related actions. Participants were not given the name of the target, but saw pictures of someone holding an object, and had to locate the image displaying the correct use action.

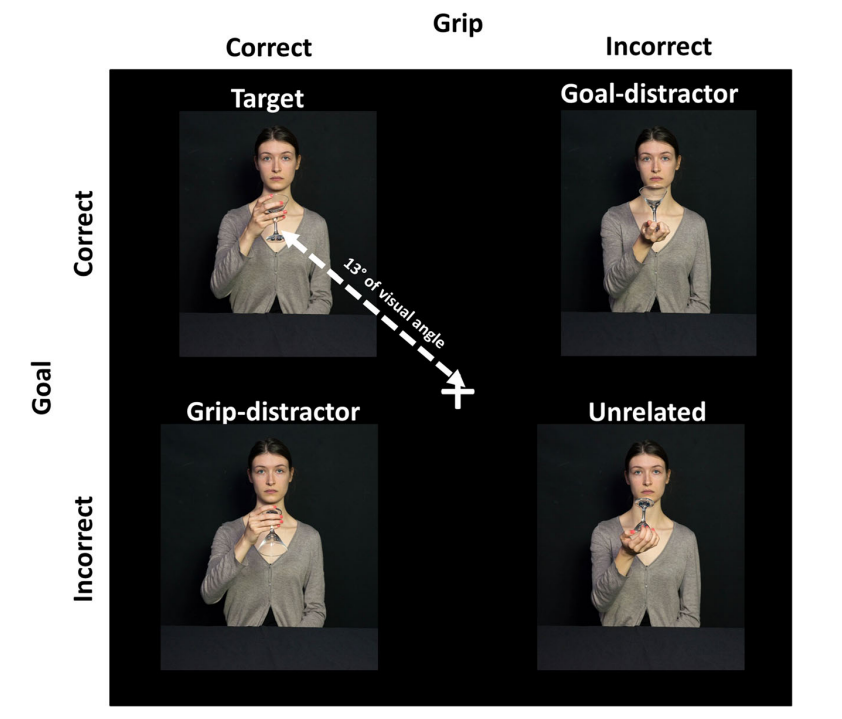

The distractor images were incorrect by virtue of the grip with which objects were held (manipulation) or the object orientation/congruence with the actor’s body (which was termed “goal”).

On each trial, the grip distractor shared manipulation but not orientation/goal with the target, while the goal distractor shared orientation/goal but not manipulation with the target. So, for example, as illustrated in the figure below, on a trial where the correct action was a glass held upright with a clench (power grip), the grip distractor was an inverted glass held with a power grip, and the goal distractor was an upright glass held in the palm.

As in prior similar studies, Decroix and Kalénine measured the proportion of gaze fixations on each of the 4 objects across a series of time bins, and averaged these fixations across subjects to obtain a series of curves describing the trajectory of visual attention to the target and distractors. They then fit the data to multinomial equations (called Growth Curve Analyses) that compared the shape of curves that described fixation proportions to each type of distractor over time. They also controlled for the relative salience of each image.

Decroix and Kalénine found that fixations are first driven to distractor pictures in which objects share goals with targets, then subsequently to grip distractors. They interpreted these results in terms of predictive approaches to action understanding: to comprehend another person’s actions, we first try to match the observed action to an internal prediction of what the actor’s goal is. Next, we attend to visuo-spatial details (or, as the investigators claim, kinematic information) in order to further test and update the prediction.

By moving beyond typical Visual World Paradigm methods to focus on actions rather than objects or words, this study opens a path for further exploration. As noted by the investigators, the goal distractors shared object identity and orientation with targets, and in some cases, the grip distractors were mis-oriented 180°―perhaps rendering them clearly incorrect and hence less distracting. In addition, since static visual images were used, movement kinematic information was not directly available. If kinematic information is indeed necessary to update predictions about action identity, it is likely accessed from static pictures via visuo-spatial and/or motor imagery, which may make kinematics less rapidly accessible (and hence, less informative about whether the static action is correct) than object information. These continued questions notwithstanding, this study marks an important step in our understanding of how various kinds of visual features influence action recognition.

One interesting aspect of this result is that the opposite pattern has been shown in studies where targets are objects and not actions, such as in some of our prior work. In such cases, distractors sharing manipulation features with targets attract earlier looks than distractors sharing higher-level functional goals, even when controlling for similarity in visual appearance. One explanation for this is that manipulations are often closely related to 3-dimensional object structure, which (as compared to functional purpose) is relatively rapidly gleaned from 2D visual images.

When considered together with the Decroix and Kalénine study, it appears that we first attend to features of the visual world that are most salient/rapidly available for informing our early predictions, shifting our gaze subsequently to obtain less readily-available information to test and refine those predictions.

Next time I am rummaging frantically in that drawer looking for a certain object, I’ll try very hard not to think about how the object is used or what I intend to do with it. I hope this will make my search easier, as I doubt I will get around to cleaning the drawer anytime soon.

Psychonomics Article considered in this post:

Decroix, J and Kalénine, S. (2019). What drives visual attention during the recognition of object-directed action? The role of kinematics and goal information” Attention, Perception, & Psychophysics. DOI: 10.3758/s13414-019-01784-7.