Apparently the internet, video games, and social media are damaging our children’s development, and are responsible for the increase in autism over the last few decades—or so it has been claimed, although that claim hasn’t withstood scrutiny. Similarly, Wikipedia has an entry for something known as Internet addiction disorder, which apparently occurs when internet use interferes with daily life. Although this disorder was initially cooked up as a joke (no kidding), the term now yields 8,000 hits on Google Scholar.

Apparently video games are good for you. President Ronald Reagan opined that “young people have developed incredible hand, eye, and brain coordination in playing these games” and thus “the air force believes these kids will be our outstanding pilots.” Some 30 years after President Reagan boldly set the stage, at least some researchers have concluded that video games “can do serious good.” (That claim, too, has not gone unchallenged.)

Lest you think that being really bad and being really good at the same time is enough of a challenge for anyone, let alone the internet, it also turns out that people’s online habits have turned them into multi-taskers. Instead of focusing on a single task or stream of information, people now try to monitor and interact with multiple streams at the same time. Instagram, Twitter, Facebook, Github, and OSF… all in parallel real time.

An influential report from 2009 by Ophir and colleagues suggested that people who indulge in multi-media-multi-tasking performed worse on a battery of cognitive tasks that required some form of filtering out irrelevant content, such as a change detection task, in which people’s memory is tested for relevant items while they try to ignore irrelevant items. Unfortunately, follow-up research has provided a more mixed picture and the evidence regarding the relationship between media multitasking and distractibility is in need of further scrutiny

A recent article in the Psychonomic Society’s journal Attention, Perception, and Psychophysics has provided some of that scrutiny. Researchers Wisnu Wiradhany and Mark Nieuwenstein tackled the issue with a two-pronged approach: First, they conducted two replication studies using the methodology of Ophir and colleagues that initially established the association between media multitasking and distractibility. Second, they conducted a meta analysis of existing research to pin down the association more broadly.

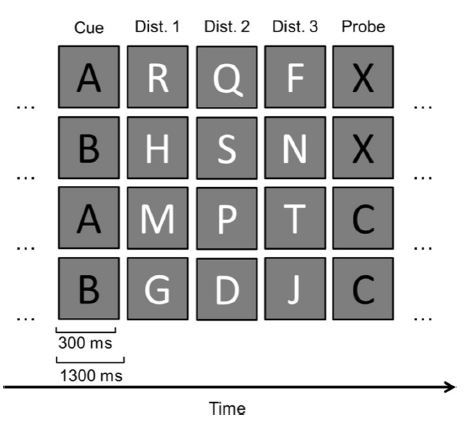

Turning first to the replication studies, Wiradhany and Nieuwenstein aimed to follow the original method of Ophir and colleagues as closely as possible, to the point of using the original experimental program to deliver the stimuli and using the exact same questionnaire to establish participants’ media multi-tasking habits. To illustrate their battery of tasks, consider the AX-CPT task shown in the figure below.

Each row of the matrix represents a trial of 5 stimuli, where the black letters were actually presented in red. Participants had to detect a red “X” that was preceded by a red “A” while ignoring the distractors presented in white—as in the first row above. People pressed one response key whenever that occurred, and another key when the sequence of red letters was something different.

In their first experiment, Wiradhany and Nieuwenstein found that people who were high in media-multi-tasking performed the AX-CPT task more slowly than people who scored lower on that measure. This replicated the original finding by Ophir and colleagues, although that result was qualified in several ways: First, the difference only arose for one type of trial (BX; see second row in above figure) but not the other (AX; first row). Second, the difference for BX trials was significant by frequentist statistics but not when Bayesian statistics were used. We recently organized a digital event on Bayesian statistics that explored their advantages relative to their frequentist counterpart. Third, in their second experiment, Wiradhany and Nieuwenstein found yet another different set of results: high media-multitaskers were slower in the AX, but not in the BX trials.

To cut a complex and nuanced set of results short, Wiradhany and Nieuwenstein found that, overall, their replication attempts were indecisive (i.e., most Bayes factors in both studies did not provide strong evidence for or against the null hypothesis). Nonetheless, the outcomes of both studies generally pointed in the direction of the original results by Ophir and colleagues, keeping open the possibility that there is an association between multi-media-use and distractibility.

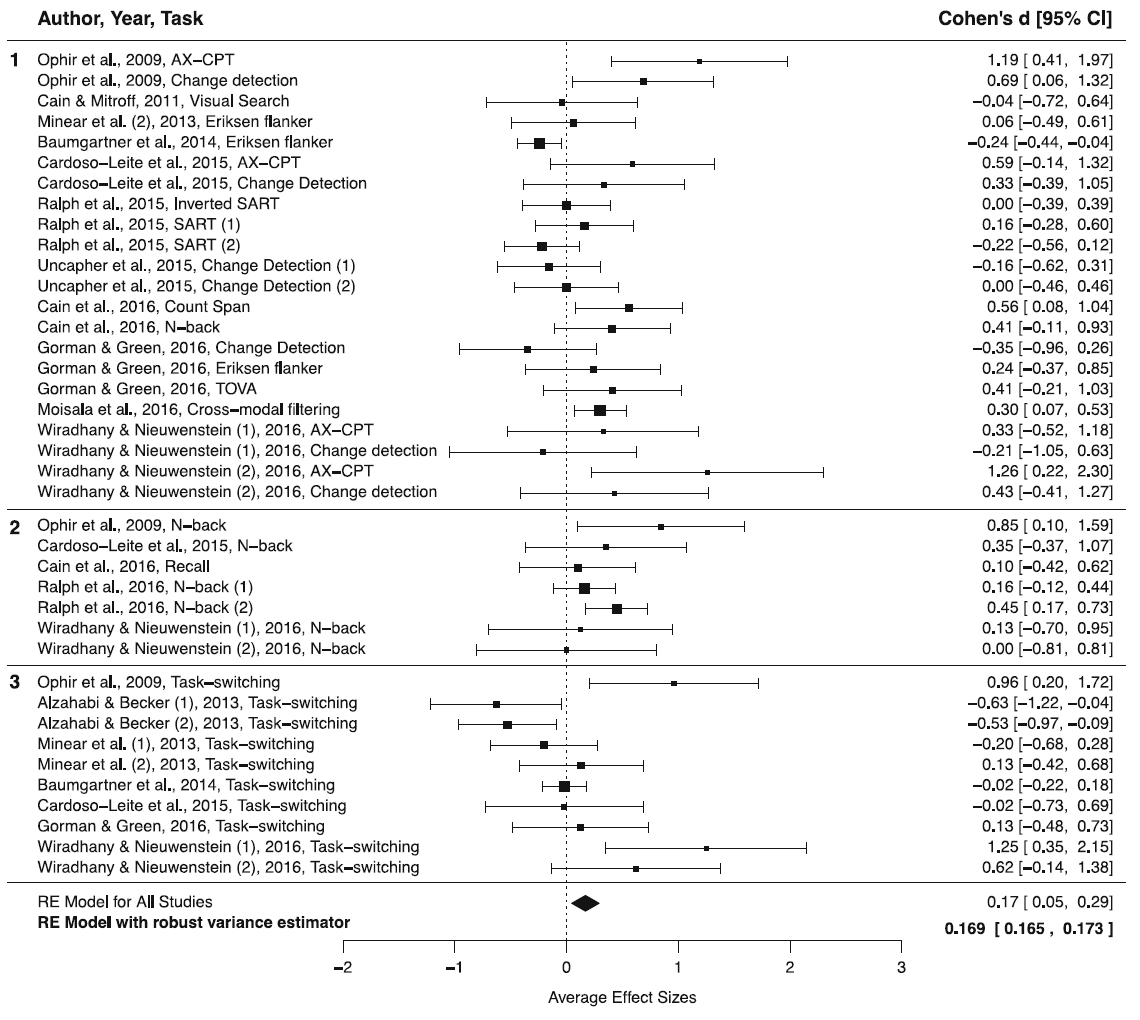

Wiradhany and Nieuwenstein turned to a meta-analysis of around 40 published studies on this issue. The results are shown in the “forest plot” below:

The three panels of the plot refer to different tasks being used in the various studies. The earlier figure corresponds to the top panel here (AX-CPT task). The figure makes several interesting points: First, not surprisingly, the original study by Ophir and colleagues reported large effect sizes for all three tasks. Second, most of the studies published subsequently report small and non-significant effects (most of the confidence intervals overlap with zero). Third, notwithstanding the small effects and heterogeneity among studies, overall there was a small but significant positive association between media multitasking and distractibility.

The story does not end there, however.

In a further analysis, Wiradhany and Nieuwenstein examined whether a small-study bias might have been responsible for the small effect uncovered in their meta-analysis. The small-study effect refers to the influence of relatively many small-N studies that report large, positive effects, and relatively few small-N studies with negative or null effects. The typical interpretation of the small-study bias is that it results from researchers’ (and journals’) reluctance to publish small studies that failed to yield a significant result, even though the same reluctance does not apply to publication of a significant result based on a small sample size.

This final analysis suggested that a small-study bias may have been present. When that bias was statistically corrected, the resulting estimate of the overall effect size was .001.

Zero, in other words.

Wiradhany and Nieuwenstein conclude that:

“Taken together, the results of our work present reason to question the existence of an association between media multitasking, as defined by the MMI or other questionnaire measures, and distractibility in laboratory tasks of information processing.”

Maybe media-multi-tasking does not have any adverse long-term consequences. However, it does not follow that using GitHub and Instagram simultaneously is a good idea: I am not aware of any study that has investigated the consequences of such multi-tasking during the multi-tasking. It may well be the case that your GitHub forks and branches would suffer from a simultaneous conversation on Facebook or Twitter.

Psychonomics article focused on in this post:

Wiradhany, W., & Nieuwenstein, M. R. (2017). Cognitive control in media multitaskers: Two replication studies and a meta-Analysis. Attention, Perception, & Psychophysics, 79, 2620-2641. DOI: 10.3758/s13414-017-1408-4.