We are born with the neural circuitry required to detect the emotion in facial expressions. By the age of 7 months, human infants clearly distinguish between faces that are angry and others that are happy or neutral. At the same age, effects of parenting are also beginning to emerge, with infants of parents who are particularly sensitive in their interactions showing amplified responding to happy faces. By the time we are adults, we have no difficulty classifying faces rapidly according to their expression. Which of the faces below express a positive emotion? Which expressions are negative?

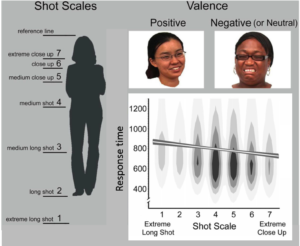

This ability has an obvious boundary condition: if the faces are too small or too crowded, then recognition of affect becomes impossible. Picking a smiling face from a picture full of thousands of people would be next to impossible. Although this boundary condition is self-evident, it gives rise to several interesting research questions: First, how much does size really matter? At what point is a face large enough to permit interpretation? Second, is interpretation of facial expression impaired by visual background clutter? Do we recognize a person’s smile better when they are standing in an empty field than if they are in front of a supermarket shelf? And third, do we intuitively know and understand our own limitations? Can we make use of this understanding when we can control how faces are presented? A recent article in the Psychonomic Society’s journal Attention, Perception, & Psychophysics examined those questions. Researchers James Cutting and Kacie Armstrong presented participants with a long sequence of faces and asked them to classify each face by the valence of the depicted expression, which was either positive or negative/neutral. Two sample stimuli from one of Cutting and Armstrong’s studies are shown in the top right of the figure below. The figure also displays the main experimental manipulation in the left-hand panel. Across stimuli, the scale of each shot was varied from “extreme close up” to “extreme long shot.” You can imagine what each shot looked like by considering the distance from the reference line at the top to the corresponding horizontal line for each shot. Thus, a “close up” would be a shot that includes everything from the reference line down to line 6, and a long shot would extend down to line 2, and so on. As each stimulus was shown at the same size, the different shots corresponded to the size of faces ranging from very large (“extreme close up”) to very small (“extreme long shot”).  The figure above also shows the data from one of Cutting and Armstrong’s studies in the bottom-right panel. The data show people’s response times as a function of the scale of each stimulus. It can be seen that responding was speeded by around 120 ms (1/8th of a second) when the face was presented as an extreme close up, compared to a shot from extremely far away. Although 1/8th of a second may not sound like much, it represents a speed-up of responding of around 15%. Cutting and Armstrong additionally examined the role of visual “clutter” in one of their studies by using movie stills as stimuli. The figure below explains what is meant by clutter and how it was manipulated in the experiment. Participants only viewed the pictures on the left; the right-hand column of computer-processed images were used to compute a numeric index of clutter.  The effects of clutter on response times are shown in the top panels of the next figure. Regardless of clutter, we see the familiar effect of shot scale, but that effect is enhanced considerably when there is visual clutter surrounding the face. For cluttered scenes, scale can speed responding by 200 ms (1/5th of a second) or more. This effect is far from trivial and corresponds to around 25% of the total response times. It has long been known that visual detection is impaired by clutter, and the results below extend this phenomenon to the recognition of facial expressions. Now let’s consider the bottom panels in the figure below, which reports data from another “study” conducted by Cutting and Armstrong. This study is particularly intriguing because the “participants” were …. movies. Yes, movies.  We take it for granted that movies include scenes in which the camera focuses on the actor’s face. Some of those scenes, like the screen test for Greta Garbo below, are formidable in their power of expression and emotion. [youtube https://www.youtube.com/watch?v=GnalOW-pguE] However, our familiarity with close ups betrays their rather more complex history. When motion pictures were first invented, close ups were unknown, and directors preferred to frame their actors in long shots, similar to the view of a stage in a live theater. Indeed, when D. W. Griffith first used a close-up, Lillian Gish recalled that “The people in the front office got very upset. They came down and said: ‘The public doesn’t pay for the head or the arms or the shoulders of the actor. They want the whole body. Let’s give them their money’s worth.’ “ Fortunately, Griffith persisted and a century later we think nothing of viewing close-up scenes in movies. It turns out that movie directors didn’t stop learning after Griffith introduced the close up: Cutting and Armstrong’s final study, which is reported in the bottom panels of the above figure, demonstrates that movie makers appear to be finely attuned to people’s perceptual ability. For that study, the researchers obtained more than 50,000 stills from 6 popular movies (Mission: Impossible II; Erin Brockovich; What Women Want; The Social Network Valentine’s Day; and Inception) and used those to measure the duration of each shot while simultaneously analyzing each still for its scale and clutter. It is clear from the figure that movie makers adjusted the length of their shots based on scale and also based on clutter. As in participants, the effect of scale is greater for cluttered shots than for uncluttered shots, suggesting that when people have control over how they display faces to others, as movie makers do, they are fine-tuning their presentation regime to our cognitive limitations. Movies are engineered to synchronize well with our perceptual apparatus, even though most of us are probably unaware of the variables that drive facial expression recognition. Accordingly, Cutting and Armstrong conclude their work by suggesting “that the craft of popular moviemaking is based on hard-won, practice-forged, psychological principles that have evolved over a long time, fitting stories and their presentation to our cognitive and perceptual capacities. … We as professional psychologists can learn much from studying the structure of [these] products.” |

Size matters—and not just in the movies

The Psychonomic Society (Society) is providing information in the Featured Content section of its website as a benefit and service in furtherance of the Society’s nonprofit and tax-exempt status. The Society does not exert editorial control over such materials, and any opinions expressed in the Featured Content articles are solely those of the individual authors and do not necessarily reflect the opinions or policies of the Society. The Society does not guarantee the accuracy of the content contained in the Featured Content portion of the website and specifically disclaims any and all liability for any claims or damages that result from reliance on such content by third parties.