Imagine that you’re in the center of a busy city watching a pedestrian traffic signal for your cue to walk. The green walking man gives the go-ahead and you start walking, a police car siren sounds and its emergency vehicle lighting flashes, capturing your attention, and sending you back to the sidewalk as the police car zooms past.

Attending to the traffic signal is an example of top-down control that is deliberate and voluntary, and therefore affected by prior knowledge. Attending to the siren and lights is a bottom-up example because it is externally driven and involuntary. In earlier research, the two were investigated separately but in more recent research, investigations have shifted to how the two may be integrated. By focusing on integration, we can better understand how information is prioritized.

Much of what is known about the allocation of attentional resources is based on results from participants tasked with finding targets in visual arrays (of lines displayed at different angles, for example) using a well-established paradigm common in the field. However, such impoverished arrays lack the richness of real-world environments. Past knowledge and biases likely play a bigger role in attention in the real world than they do in simple visual arrays.

To investigate if a richer environment would capture attention differently, Pereira and Castelhano (pictured below) in a paper recently published in Psychonomic Bulletin & Review examined how top-down and bottom-up processes interact when faced with directing attention in real-world scenes. Using eye-tracking, they tested if attention capture depends on the location of a distractor that is presented suddenly.

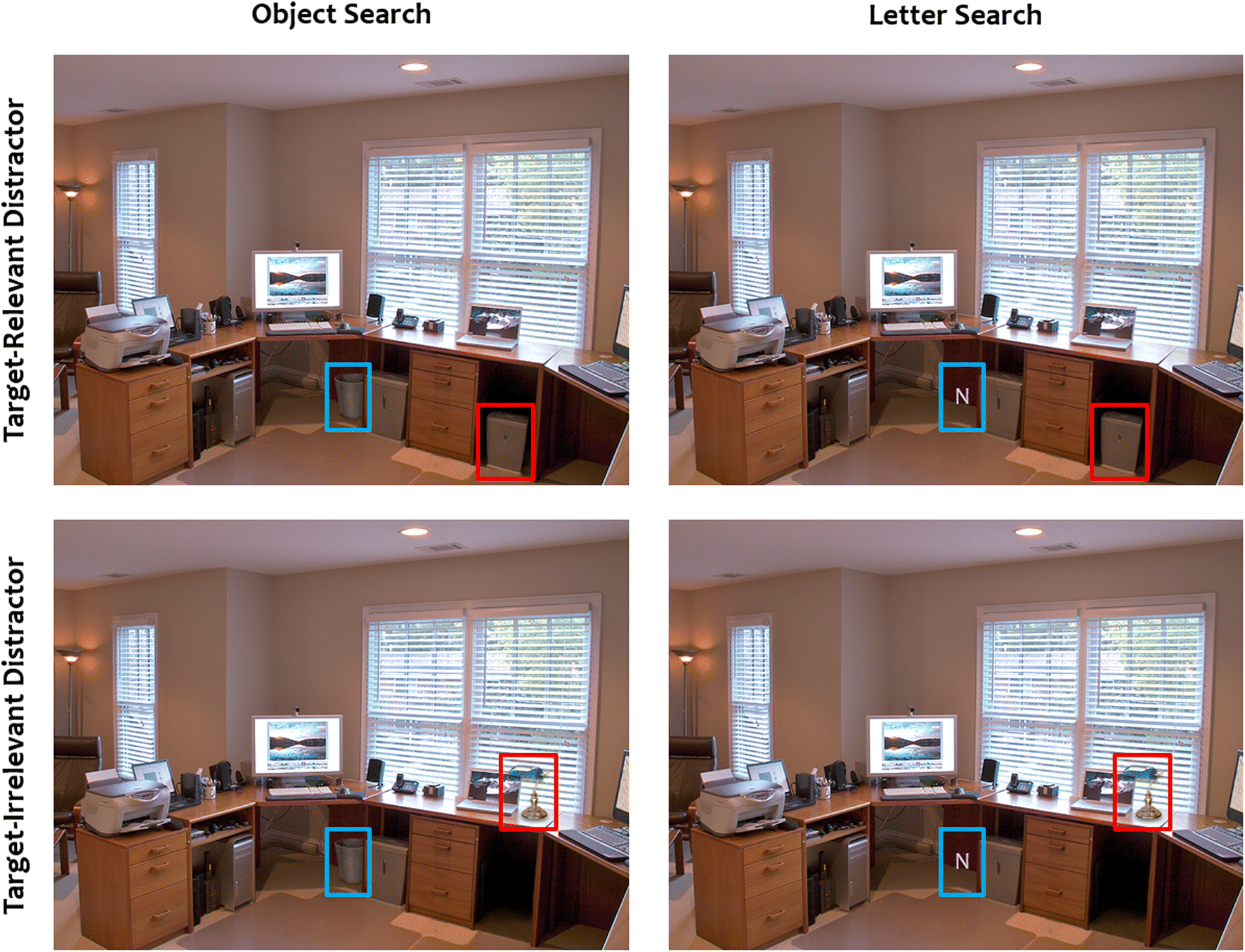

The task was to search for a target. There were two different types of targets: objects and letters. On each trial, for either type of target, a distractor was either absent or present. The distractor, if present, would appear suddenly. There were two types of distractor: target-relevant and target-irrelevant. Relevance refers to whether the distractor object appeared in the same contextual location as the target or not. The figure below shows scene examples for object search on the left and letter search on the right.

On the top panels are target-relevant distractors and on the bottom panels are target-irrelevant distractors. Targets have a blue box around them, and distractors have a red box around them. The letter searches were used as a control because they are not associated with any scene location. The objects, distractor items, and scenes were chosen because of prior knowledge about their strong associations.

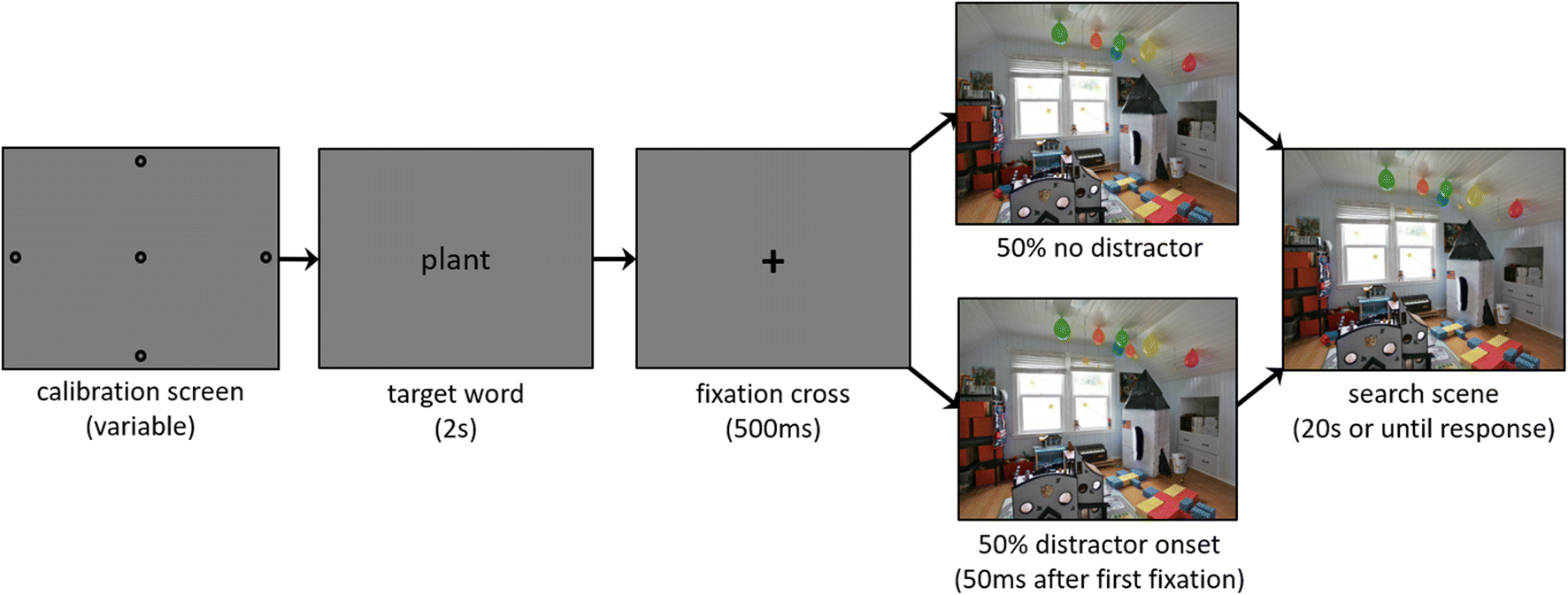

The task, shown in the sequence below, involved calibrating a participant’s eye movements with the eye-tracker, the appearance of the target word (plant in the example), a fixation cross, either a distractor or no distractor in the scene, and then the search scene (where the plant would be in the example). Once the plant is located, the participant would press a button.

In the absent condition, there was no distractor onset and in the present condition, a distractor appeared suddenly on half of the trials. During the present trials, the target was relevant or irrelevant.

Pereira and Castelhano reasoned that if attention were directed to target relevant locations when searching for objects, then there would be the most attention capture for distractors in the target-relevant conditions compared to the distractors in the target-irrelevant conditions. Of course, there should not be differences in attention capture during the letter searches because letters are not associated with locations within the scenes.

They conducted data analyses on visual search and attentional capture performance. The visual search analysis revealed that participants were good at locating the targets at 92% accuracy. It took longer for participants to find letters than to find objects, and the search was longer when a distractor was present than when absent.

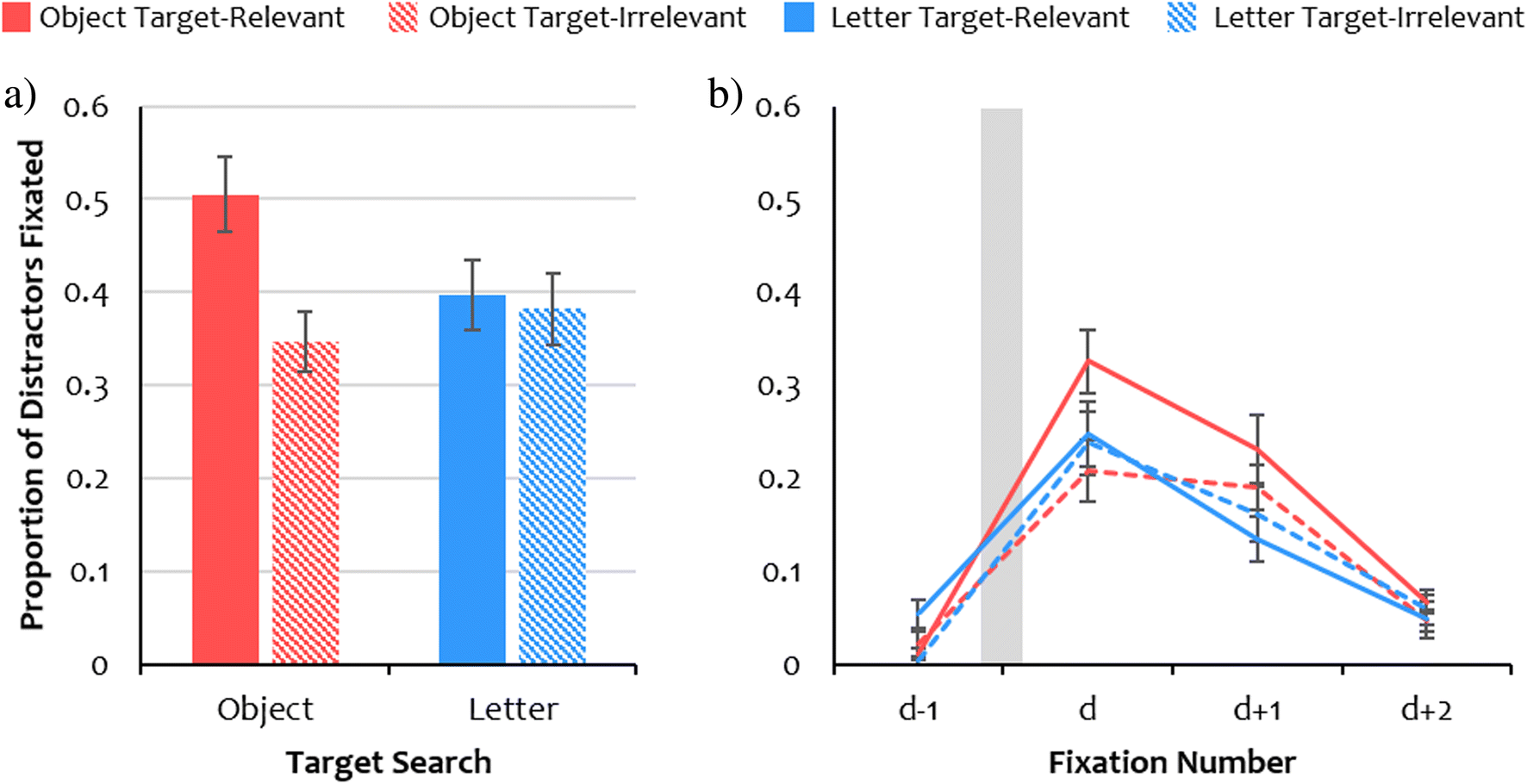

To conduct attentional capture analysis, they focused on the time before and after the distractor was presented. For target-relevant and target-irrelevant distractor trials, they compared the 1) proportion of fixations on the distractor, 2) proportion of saccades toward the distractor, and 3) fixation durations.

Panel a in the figure below shows the overall proportion of fixations on the distractor. Panel b in the figure below shows the fixation number after the onset of the distractor as denoted by the grey vertical bar. When the scene could be used to guide the search, participants fixated on the target-relevant distractors more in the object condition.

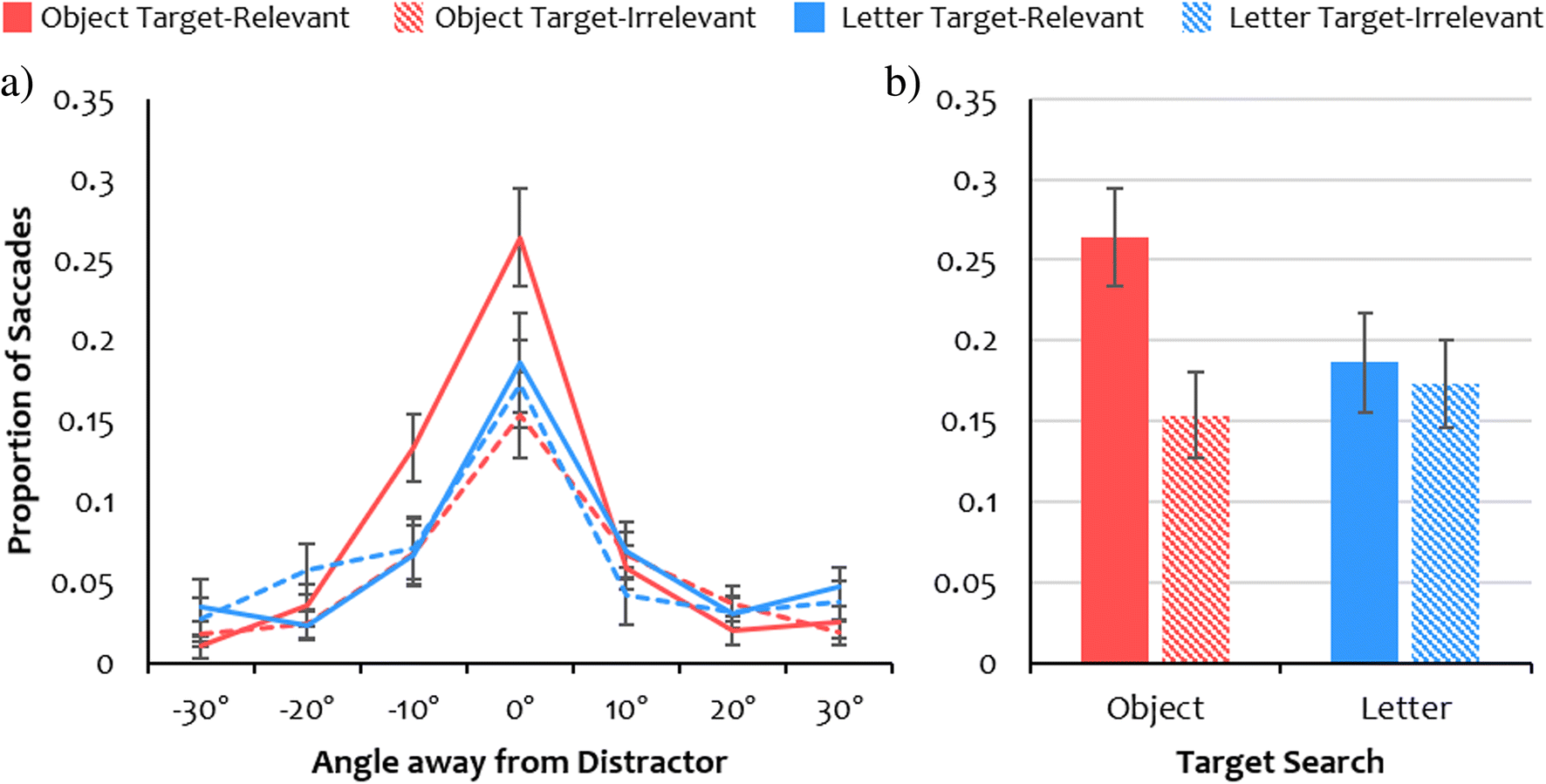

Similarly, there were also more saccades toward the distractors when the distractors were relevant to the target in the object condition. Panel a in the figure below shows the proportion of saccades toward the distractor by angle away and, in panel b, at the distractor (0°).

These findings suggest that the capture of attention is impacted by prior knowledge of the target and its likely location. The authors concluded that

…we demonstrate that the influence of context on the dynamic and integrative nature of attention is much broader than previously thought, furthering our understanding of these mechanisms in the real world and highlighting the necessity of studying attention through an integrative lens that ties together sensory information, current goals, prior knowledge, and experience.

This research builds on the findings from well-controlled laboratory experiments designed to measure attention capture on visual arrays and takes a step toward a better understanding of how attention is captured in the real world.

Psychonomics article focused on in this post:

Pereira, E. J., & Castelhano, M. S. (2019). Attentional capture is contingent on scene region: Using surface guidance framework to explore attentional mechanisms during search. Psychonomic Bulletin & Review, 26, 1273–1281. https://doi.org/10.3758/s13423-019-01610-z