Spoilers for Everything, Everywhere, All At Once: In the best movie of the year, protagonist Evelyn Wang (portrayed by the inimitable Michelle Yeoh) navigates, among many things, her taxes, complicated family dynamics, at least one raccoon, intense fight sequences (both kung fu and metaphorical in nature), and some unconventional uses of professional accolades in order to find an answer to an everlasting question: In the midst of all of this noise, what actually matters?

In some sense, this is a question that scientists constantly revisit (though perhaps with less of a brouhaha) each time they approach an existing literature. How do new discoveries align with previous ones? How do they contrast with studies using similar methods or asking similar questions? Which aspects are more likely to home in on some underlying reality, and what should be disregarded as noise? When I describe the scientific process to my students, I tell them that progress often happens slowly, with tiny forward steps made with each study carefully designed to isolate the Truth (“with a capital T,” I occasionally emphasize).

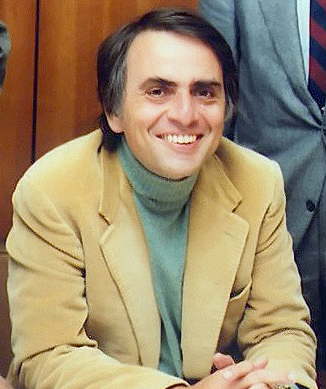

But sometimes the process of discovery isn’t that slow, and a big, extraordinary new claim enters the field. Carl Sagan perfectly described the ensuing responsibility of scientists: Extraordinary claims require extraordinary evidence. In a recent commentary article published in Learning & Behavior, appropriately titled “Extraordinary claims, extraordinary evidence? A discussion,” a veritable super-team of cognitive scientists—Richard M. Shiffrin, Dora Matzke, Jonathon D. Crystal, E.-J. Wagenmakers, Suyog H. Chandramouli, Joachim Vandekerckhove, Marco Zorzi, Richard D. Morey, and Mary C. Murphy—discuss how this mantra might be implemented within journal publication standards and what the implications such criteria might be.

But implementing Sagan’s mantra into real criteria immediately yields difficult questions. What, exactly, constitutes “extraordinary” evidence? How should journal reviewers decide what differentiates ordinary claims from extraordinary ones, when the definitions of either are completely subjective? And how strict or lenient should scientist gatekeepers be in deciding what to endorse and what to ignore?

The authors immediately identify a dangerous consequence of setting strict criteria: if standards for publishing extraordinary ideas are too difficult to meet, we’ll end up locking an entire scientific community in to “what is presently thought to be known.” In other words, it severely limits our capacity to consider alternative theories and keeps us stuck with theories that too limited or are just wrong.

On the other hand, setting liberal criteria could lead to a literature rife with noise, where real findings are hidden among under-powered studies, theoretical misrepresentations, inappropriate statistics, and the other infinite ways that misapplication of scientific rigor can obscure real discoveries. There’s even perhaps an argument that such liberal criteria have led to the replication crisis.

Some of the authors argue that, with time, this noise would eventually wash out, and important findings would remain and then inspire follow-up studies. After all, part of every scientist’s training involves finding a common thread within a sea of findings in order to synthesize new hypotheses, design new experiments, and generally help move the field forward. Adding to this optimism we now also have powerful tools, such as computational models, to simulate our theories and then streamline them to better reflect reality. And yet, as some of the other authors point out, it can take a lot of time for noise to wash out of a literature, and in that time even more noise can be added such that discerning real discoveries from spurious results become almost impossible. Even the use of powerful computational tools might not be helpful if they’re just attempting to simulate phenomena that may not actually exist. As E.-J. Wagenmakers writes, “…it would be like taking a Ferrari and driving it straight into a swamp.”

Time for another spoiler: The authors, unsurprisingly, do not find a concrete solution to these very complex issues in the span of a mere 11 pages of commentary. But they do float several promising ideas, one of which is to try and make the publication system a better reflection of the ways that scientists actually evaluate evidence. This can broadly be divided into two approaches. From one angle, reviewers are often evaluating whether the conclusions of a study are logical outcomes of the study’s results. With such a goal in mind, journal reviewers scrutinize methodological details, experiment design, statistical approaches, and other fine-grained aspects of “everyday science”. At the other perspective, scientists also evaluate whether the results of multiple studies can adequately be synthesized into a body of extraordinary evidence in support of an extraordinary claim.

Keeping in mind that both of these approaches are essential aspects of the scientific process, the authors play with the idea of a tiered approach to publication, where papers more focused on describing the immediate outcomes of studies can be separated from those aiming to propose more radical, or at least more extraordinary, claims. Introducing some separability between “basic results” papers and “claims/speculation” papers could allow for greater flexibility in setting publication criteria, and this flexibility then also could be a better reflection of the different approaches that scientists take when they evaluate new papers.

But even this intriguing proposal—and really any idea about setting a publication criterion—will open new avenues for unnecessary bias to infiltrate the publication process. For all the nuance that we may try to build into publishing, it is still a process that exists in the context of some powerful, and unfair, cultural pressures. As Dora Matzke points out, even if science is “ultimately self-correcting…that self-correction is often delayed by the current academic structures.” Namely, movement up the academic career ladder is largely predicated on publication record (the old, awful phrase “publish or perish” comes to mind), but publishing culture is notoriously biased against studies showing null results, and it also tends to favor papers that make strong claims, which further incentivizes scientists to overhype their findings.

Moreover, as Mary Murphy rightfully observes, the biased criteria already in place within publication standards tend to be even more biased against scientists from underrepresented backgrounds. These biases also cannot simply be resolved by having more individuals from underrepresented groups acting as reviewers (a task that is, by the way, somewhat compulsory despite almost never offering compensation) because these problems are “rooted in sociocultural and historical stereotypes and representations of science.” In the face of such deeply entrenched biases, how can we thus be sure that publication criteria designed to evaluate the extraordinary evidence for extraordinary claims isn’t actually creating more problems?

As a scientist at the very cusp of a research career, but also as a queer woman of color, confronting the sheer amount of noise that one must navigate—within both a research literature but also within external cultural factors—can feel daunting (and that’s putting it mildly). I, even more unsurprisingly, also do not have a concrete solution, nor do I have an extraordinary claim of my own about what else needs to change. Maybe all there is left to do is to make careful considerations, form new hypotheses about how to move forward, and then test them out. Those are, after all, the things that scientists do best.

Featured Psychonomic Society article

Richard M. Shiffrin, Dora Matzke, Jonathon D. Crystal, E.-J. Wagenmakers, Suyog H. Chandramouli, Joachim Vandekerckhove, Marco Zorzi, Richard D. Morey & Mary C. Murphy (2021). Extraordinary claims, extraordinary evidence? A discussion. Learning & Behavior, 49, 265–275 (2021). https://doi.org/10.3758/s13420-021-00474-5