So much stuff

If there was ever any doubt whether there could be too much of a good thing in science, we are currently witnessing a very clear demonstration of it—both in science in general, and in the behavioral sciences in particular. Stating the obvious: There is just so much COVID-19 related information emerging every single day.

And I’m not only referring to peer-reviewed articles and the many, many preprints. I’m also referring to

- already published data sets,

- studies in progress,

- calls for collaborating in emerging studies,

- reports from governments, think tanks, and NGOs,

- newspaper articles and opinion pieces,

- static and interactive visualizations of results and models,

- blog posts by researchers, policymakers, and others,

- videos,

- webinars organized by professional scientific associations and other institutions,

- discussions in publicly accessible mailing lists of professional societies,

- twitter threads on new findings and their critical discussion, and

- more things I wasn’t even considering. (Please add your ideas on further information sources in the comments below. Thank you very much!)

Boosting COVID-19 related behavioral science by feeding and consulting an eclectic knowledge base

Imagine you had access to an up-to-date knowledge base that would cover all these eclectic information sources as they emerge. Wouldn’t that be really helpful? Instead, you are faced with the current jungle of information.

To deal with the complex matter that is COVID-19, we argue that researchers, policymakers, and other stakeholders need a curated–even if not yet fully vetted—overview over this emerging knowledge. The reason we think that this knowledge base should be eclectic, that is, not only include peer-reviewed articles and preprints is this: To avoid many of the pitfalls of behavioral-science (and other) research on COVID-19 (see Ulrike Hahn’s previous post for an overview), researchers, policymakers, and other stakeholders need to know “what’s out there,” both the Good, the Bad, and the Ugly.

Here are a few use cases to ponder:

- Researchers, policymakers, and journalists need help in critically assessing the emerging research (as published in preprints or other non-peer reviewed formats). It seems that most of the (publicly accessible) critical discussion on emerging research is happening on twitter, blog posts, and other more ad-hoc outlets. Even preprints of replies to already published research (or preprints) are too slow for this or are not happening often enough.

- To avoid duplication of efforts, researchers need to know what studies have already been run, are running, planning to run, or are looking for more collaborators.

- When planning new studies, researchers should profit from the good, innovative, ideas floating around and avoid making mistakes that have already been made, learning about ways to circumvent them in the first place. Again, most such ideas and discussions currently emerge on twitter and other social media platforms, so only considering preprints, let alone published papers, is not enough to stay up to date.

- Meta researchers need to know what other initiatives are already running (e.g., tracking the fate of preprints), so they can avoid duplication or join efforts.

All these use cases emphasize how useful and important it is to harness the collective knowledge and evaluation of emerging research “out there,” which is dispersed across various fast-moving outlets. For example, if the current pandemic has shown one thing about twitter, it is that among all the “I’m stoked to announce that I’ve published this paper”-tweets and semi-serious meta-science twitter polls, there is seriously useful information for advancing behavioral-science research on COVID-19 (and beyond) to be found on academic twitter.

Of course, there are many more ingredients for doing proper science without the drag beyond such a knowledge base (see Ulrike Hahn’s previous post for more ideas on how to coordinate, speed up and consolidate some of the knowledge discussed above), but I would argue that such an eclectic knowledge base will be useful not just for the use cases sketched above, but also for other, yet-to-emerge cases.

There are already a number of (semi-)automated services out there aiming to help make sense of the massive amount of research information produced about COVID-19. To name just a few:

- covidscholar: “COVID-19 literature search powered by advanced NLP algorithms.”

- dimensions.ai: “a dynamic, easy-to-use, linked research data platform that re-imagines the way research can be discovered, accessed, and analyzed”

- CORD-19: “free resource of more than 63,000 scholarly articles about the novel coronavirus for use by the global research community”

- SciSight: “SciSight is a tool for exploring the evolving network of science in the COVID-19 Open Research Dataset”

- Semantic Map: “automatically detects relations between texts, maps texts to semantically related keywords and then generates a visual representation of data”

Although clearly invaluable to the research community in general, these (and similar other) services currently do not (yet) support behavioral-science research on COVID-19 along the lines outlined above. They either

- do not cover behavioral-science research in any meaningful way (we are currently trying to rectify this) and/or

- focus on peer-reviewed articles and preprints, excluding the discussion and review part of emerging research.

Therefore, we see our vision of an eclectic knowledge base as complementary to these services.

From ideas to a proof of concept

During the last weeks we have been putting our ideas on such a knowledge base into practice (see also www.scibeh.org). Now we’re, of course, stoked to showcase here an emerging and growing proof-of-concept of such an eclectic knowledge base on COVID-19 related research and information, focusing on the behavioral sciences, but also including other topics that seem pertinent to properly understand the issues at hand.

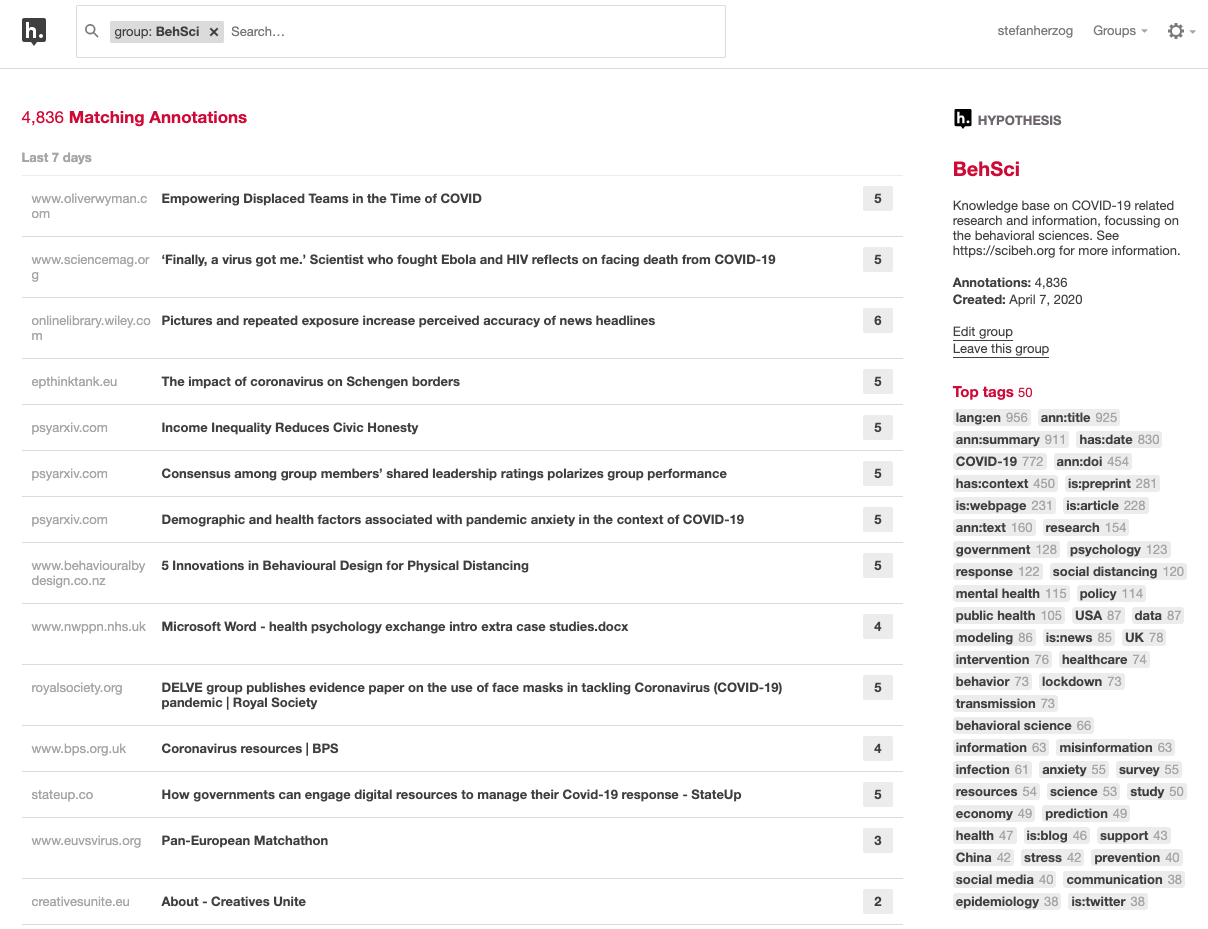

The knowledge base exists as a collection of annotations created using hypothes.is (see the screenshot in Figure 1), a “new effort to implement an old idea: A conversation layer over the entire web that works everywhere, without needing implementation by any underlying site”(see here for the basics of annotating with hypothes.is). Please take a moment and visit the knowledge base and explore what’s there. You can click on the tags on the right side to filter annotations or use the search bar at the top; see here for more information on how to search on hypothes.is.

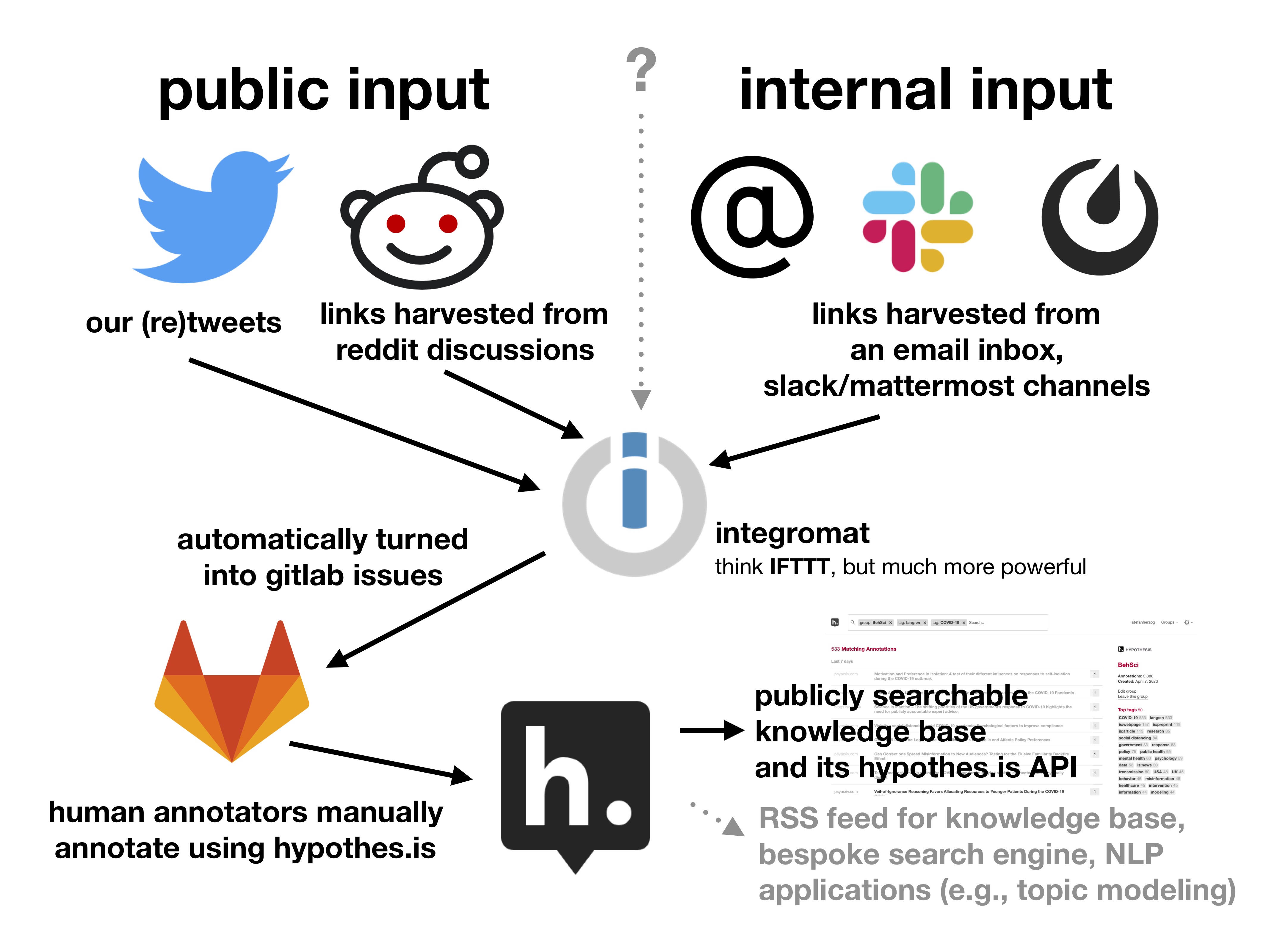

How do items get into the knowledge base? Figure 2 summarizes what input channels currently feed the knowledge base.

Using an automation service (here: integromat) we automatically collect links (URLs) from various sources, such as:

- the tweets and retweets of our twitter account @SciBeh;

- links showing up in any post or comment in the three subreddits we created (where you can ask questions and discuss research; see Ulrike Hahn’s previous post and scibeh.org for more on these subreddits);

- tweets from the PsyArXiv twitter bot (not illustrated in Figure 2); and

- links gathered via email and channels in our slack and mattermost teams.

Note that this is just the start and we are considering adding further input channels. Please let us know your ideas on what we should add in the comments below.

The automation service then turns each item into an issue in a gitlab project hosted at one of our institutions. A group of dedicated volunteers (see the Members section in the knowledge base then goes through the issues in this annotation inbox and annotates them. To our volunteers: Thank you so so much for your invaluable work!

Once annotated, an item will immediately show up in the knowledge base. Currently the hypothes.is group behsci underlying the knowledge base is a “restricted group“, which means that only our volunteers can add annotations, but anybody can see the annotations and work with its data (more on the latter below).

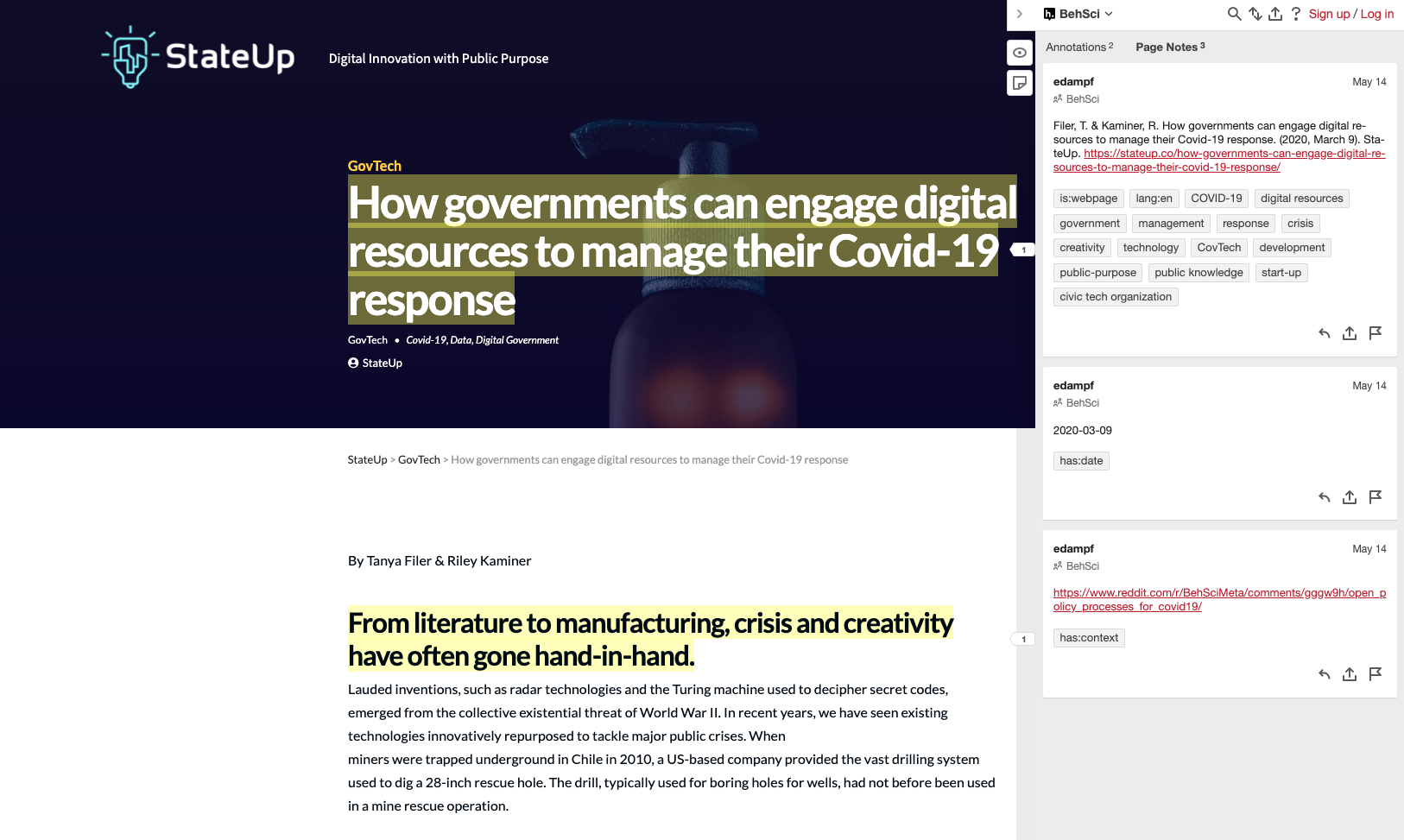

Here’s an example: After the post “Open policy processes for COVID-19” appeared on our subreddit r/BehSciMeta, the relevant links in that post got annotated. One of those links is https://stateup.co/how-governments-can-engage-digital-resources-to-manage-their-covid-19-response, which got annotated like this (click on the item to see all annotations), and looks like that in the original context of the website (click “show all notes” to see all annotations; see Figure 3 for a corresponding screenshot); note that one of the annotations indicates the context in which the item was harvested, leading you back to the original reddit post, where you can explore the context of this item.

When you search the knowledge base for, say, “government” and “digital”, this item will be part of the result list.

How can I contribute to the knowledge base?

First and foremost, we need your input: Whenever you see something that we should consider adding to the knowledge base, please

- mention our @SciBeh twitter handle—either when you tweeting or commenting on twitter; or

- post or comment in our subreddits and make sure to include the link(s).

It couldn’t be easier, could it now? Mention @SciBeh in a tweet or post something in the subreddits and you have suggested an item for the database.

We also are looking for more volunteers that join our annotation team. If you can see yourself annotating websites using hypothes.is for a couple of hours a week (following a simple protocol), please get in contact with us! We’d love to have you on board!

How can I do more with the knowledge base?

In addition to searching the knowledge base online, you can also export the annotations using this tool or access it via the hypothes.is API. Please don’t hesitate to contact us (see scibeh.org) if you have any questions.

Where to go from here?

As repeatedly discussed throughout this post, the knowledge base is in its infancy and there are many directions to go from here (see the grey parts in Figure 2), e.g.

- including more inputs (e.g., preprint bots other than the PsyArXiv twitter bot);

- adding more ways to access and use the knowledge base, e.g. converting the annotations into a more standard search engine (e.g., using vespa or elastic search);

- creating an RSS feed that lists new additions to the knowledge base, which can then be tracked by an RSS reader app or used in automated applications (i.e., a low-tech way for accessing the knowledge base compared to accessing it via hypothes.is’ API);

- apply natural language processing to, for example, discover emerging topics (e.g., using structural topic models or dynamic exploratory graph analysis);

and much more.

If you have ideas or want to work with us in implementing the above or other ideas, please let us know!