Signing a Swedish sound beats catching a ball: linguistic processing in sign language and working memory performance

Imagine that you are discussing a familiar topic with a friend in a quiet room. If you are a neurotypical individual, understanding them and knowing what to say next may seem effortless. Now imagine that you are having the same conversation, but on a familiar drive to a local park. Perhaps it still seems effortless.

Language processing can seem so effortless that we sometimes take a chat for granted even when we should not.

One situation in which the cognition of language processing has even impacted policy decisions involves the use of cell phones while driving.

Texting is understandably banned in 46 of the 50 U.S. states because it requires alternating visual attention between the road and a cell phone, but the situation is less straightforward for calls taken on both handheld and hands-free cell phone. Many states have yet to seriously legislate against hands-free cell-phone use, even though there is now pretty clear evidence that cell phone use while driving can be dangerous even with a hands-free set. We ran a post on this issue recently.

So why do most drivers think that they are not affected by talking on a cell phone? It is taken as a given that spoken language processing does not interfere with visuospatial processing precisely because it is easy. A lifetime of experience has made language processing easy, which frees up working memory and other cognitive processes for other tasks.

Now imagine that you are a speaker of a signed language — how easily can you listen to your passenger and drive? Signed languages are full-fledged languages that represent sounds in space and in time, with words that can be characterized by hand shape, hand position, and movement. The video below teaches a first lesson in American Sign Language:

[youtube https://www.youtube.com/watch?v=owXsDIKq4lk]

Intuitively, speakers of signed languages must perform language and visuospatial processing at the same time. Because speakers of signed languages are accustomed to processing language visually, visual processing of linguistic information may be less taxing, potentially freeing up cognitive capacity for other tasks. That is, preexisting representations might “compress” linguistic information into smaller chunks, which would free up processing resources in working memory. Preexisting representations could include semantic representations (words in the speakers’ vocabulary) or phonological representations (sounds in their language).This is exactly what Mary Ruder and colleagues tested in a recent study published in the Psychonomic Society journal Memory and Cognition. The researchers asked whether deaf signers, hearing signers, and non-signers have different amounts of working memory available when processing different types of visual information, from words in their language to nonlinguistic visual events. Signers should remember more linguistic stimuli compared to non-signers.

To understand the procedure used by Ruder and colleagues, we need to explain the “n-back task”, which is one commonly-used way of testing working memory capacity. In this task, items are presented in a sequence on the screen and participants have to report whether the current item was the same as or different from the nth item before. So, in a 3-back task, if participants saw a sequence like “A B C A” and they were asked to compare the last item (A) to the one three items back (A), they would answer “yes” here, but would answer “no” to a sequence like “A B C B” because B is different from A.

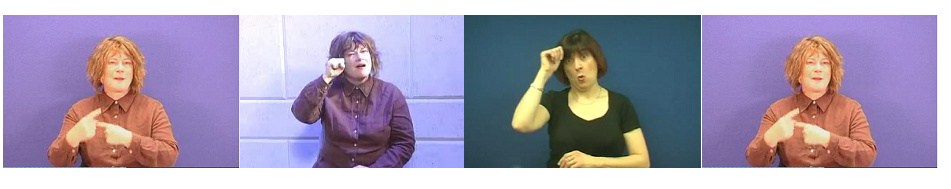

In the experiment by Ruder and colleagues, all of the items were videos, which could either be British Sign Language (BSL), Swedish Sign Language, nonsigns that were like signs in British Sign Language but made up of different “sounds”, and non-linguistic scenes of people catching balls. One sequence in a 3-back task might look like the one below.

If linguistic experience frees up resources for processing of visual information, then both deaf and hearing speakers of BSL should do best at remembering BSL stimuli. Similarly, if they can remember the phonological information in a word from another language that they do not speak (Swedish Sign Language), then their performance should be better than their memory for a nonsign “pseudo-word” which does not have sounds from BSL. Likewise, non-signers should process signs in both languages as well as non-signs and with greater difficulty than the signers.

The results of the study were intriguing. Ruder and colleagues found that speakers of BSL did better at remembering whether a BSL word was n trials back, but that despite knowing the sounds of words in Swedish Sign Language, they did not remember those words any better than nonsigns. That is, phonological information alone did not convey an advantage over a gesture fragment that did not contain any meaningful information.

The study also found that signers tended to outperform non-signers on nearly every type of stimulus except for the nonsigns, suggesting that speakers of signed languages process linguistic information differently: A lifetime of experience with a signed language seems to make sign and sign-like material easier to remember, showing that linguistic experience in general helps to compress information into smaller bits, freeing up working memory for other tasks—in this case the n-back recall of visual stimuli.

The study also revealed interesting differences between the hearing BSL and the deaf BSL speakers. The hearing BSL speakers also grew up listening to a spoken language, making them “bilingual” and sensitive to the phonological representations in speech. Deaf signers outperformed non-signers on both nonsigns and Swedish Sign Language, but hearing signers did not. This suggests that when hearing speakers of BSL encountered something clearly linguistic but unfamiliar, they engaged a different strategy that was more similar to hearing non-signers’ strategies.

Altogether, the results of the study show that having the tools to process what can look like “just” visual stimuli to a non-signing person can actually have profound cognitive advantages. In fact, it can free up working memory resources to process other visual information.

This does not mean one should necessarily have a conversation in sign language while driving. But it does mean that one is better off conversing in English with a passenger than trying to encode and remember a recording of backward speech while driving.

Focus article of this post:

Rudner, M., Orfanidou, E., Cardin, V., Capek, C. M., Woll, B., & Rönnberg, J. (2016). Preexisting semantic representation improves working memory performance in the visuospatial domain. Memory & Cognition, doi:10.3758/s13421-016-0585-z

6 Comments