I recently had the pleasure of meeting with Allie Sinclair to chat about her latest paper published in Psychonomic Bulletin & Review.

True or False?

- Diamonds are formed when coal undergoes high pressure.

- Coffee reduces the influence of alcohol.

- Sunflowers turn to track the sun across the sky.

All are false.

If you happened to say true to any of the statements above, and if you have high right-wing authoritarian attitudes (i.e., strong preference for order and traditional social norms), then you will be less likely to change your answers when asked again even after being told the right answers. These are some of the essences of the findings from research conducted by Sinclair and colleagues. Find out more about it by listening to the interview and reading the transcripts below.

Interview

Transcription

Myers: You’re listening to All Things Cognition, a Psychonomic Society podcast. Now here is your host, Laura Mickes.

Mickes: I’m speaking with Allie Sinclair who is currently a graduate student in the Center for Cognitive Neuroscience at Duke University about her paper recently published in Psychonomic Bulletin and Review.

Hi Allie. Thanks so much for talking with me.

Sinclair: Hi Laura. Thanks for having me.

Mickes: What’s the title of the paper?

Sinclair: The title of the paper is “Closed-Minded Cognition: Right-Wing Authoritarianism is Negatively Related to Belief Updating Following Prediction Error.”

Mickes: You wrote this with a couple of people. Who are your co-authors?

Sinclair: My co-authors [authors pictured below] are Matthew Stanley, who’s another graduate student finishing up his degree at Duke. And Paul Seli, who is a new assistant professor in the department. Actually, neither of them are in my lab or my mentors, but it was a side project that grew out of a wonderful conversation that we had where we were kind of just talking about the state of the world and how we can understand it in terms of cognition and social psychology.

So it was sort of a conversation that ran wild and then we ended up running some studies again.

Mickes: This conversation … did it have alcohol involved?

Sinclair: It did. [laughs]

It actually started during a class discussion for our cognitive psychology class, where we talked about the effect of misinformation in society right now and how we can sort of reconcile these competing understandings of the world. Because as cognitive psychologists, we often parse things up into really neat and tidy theories of learning and computational models. Um, and then when you actually look at what people do in the real world, that completely falls apart. And sometimes we see that people simply don’t learn, uh, in the way that we predict they should. So we started with that conversation in a classroom setting and then ended up taking it over a few drinks and flushing it out into a whole bunch of study designs.

Mickes: The term prediction error is in the title. Will you describe what that is?

Sinclair: One of my primary interests is in prediction error and how it influences the malleability of memory. So broadly speaking, prediction error is the idea that our brain is constantly trying to detect discrepancies between what we expect and what actually occurs. So we draw on our past experiences to try to predict the future, and then sometimes those predictions are wrong and when they are wrong, that’s a signal that we need to do something to adjust our model of the world so we can make better predictions in the future.

Prediction error is a measure of how wrong we were and uh, in a perfect world, then that measure of how wrong we were should drive the degree to which we update our memories and how much we learn from surprising information.

Mickes: This leads up to your research question.

Sinclair: So the research question, in this case, was, well, we have this theory of how people learn from prediction error. And yet we see that in the real world, there are cases where people are remarkably resistant to change.

So how can we understand that? How can we, um, look at the individual differences or sort of the contextual demands that might shape, uh, when people are receptive to prediction error, and when people reject that and choose not to update their memories or not to update their beliefs.

Mickes: Why did you choose a political angle?

Sinclair: We started off with a pilot study where we actually looked at a number of different individual differences measures. We were interested in cognition more broadly. For example, we looked at a construct called intellectual humility, which basically captures your willingness to be wrong. Um, so like, are you receptive to being challenged on what you believe?

And then we also looked at some other measures, like actively open-minded thinking, which did make it into the paper. It’s a cognitive style that’s related to openness, but then we also included right-wing authoritarianism because we thought that this ideological factor might actually reflect some underlying cognitive biases that might be captured even in a totally apolitical domain.

And right-wing authoritarianism is especially salient right now because we’re seeing a highly politicized, polarized climate where there’s a lot of misinformation being disseminated, especially when it’s about a political topic. And we also see that there seems to be more clashing of discourse when people are trying to share opinions or figure out what is correct and what is not, especially when it’s about politics.

Mickes: Why did you choose just right-wing, or did you also look at left-wing authoritarianism?

Sinclair: We did not in this one. there is a substantial body of literature out there that have shown before that radicals are extremists on both ends of the political spectrum can sometimes show very similar cognitive biases. It’s kind of like if you go far enough to one side or the other, you end up in a circle.

That’s one thing that we would like to explore with followup studies, whether you see a similar pattern of disrupted belief updating with left-wing authoritarians.

Mickes: Right. Academics are often criticized for being too liberal, but the decision to investigate right-wing authoritarianism was based on prior literature.

Sinclair: Yeah. That is actually a point that I’d like to address because one thing that’s really important to note is that we did not find any effects of conservatism. So if we just look at how liberal or conservative are you, or what’s your political affiliation, that’s not related to belief updating. So we’re not trying to say that everyone who’s conservative, um, has this impairment in belief updating, rather, we’re saying there’s something specific about this authoritarian attitude that seems to reflect some underlying cognitive tendencies.

Mickes: Before we get too ahead of ourselves, what were your hypotheses?

Sinclair: We had two main hypotheses.

The first was that people who score high on right-wing authoritarianism would tend to be less successful at updating their false beliefs.

And the second hypothesis was that specifically, they might show an altered pattern of response to prediction error. So on a trial by trial basis, if feedback is more surprising instead of that being more effective and leading them to be more likely to update their beliefs, we thought that they might be less likely or equally likely, I suppose, to show low levels of prediction error.

Mickes: How did you test this?

Sinclair: So to test this, we created a stimulus set of urban myths, which are a bunch of false beliefs that are really commonly held.

So a couple of examples of them are like dogs see in black and white; salting a pot of water makes it boil faster.

Mickes: You also included true statements.

Sinclair: Yes. We also had about 40% or so of the statements were true statements. And we ended up not really looking into those very much, but they were primarily included as filler items so that people wouldn’t realize that just everything in the test was false.

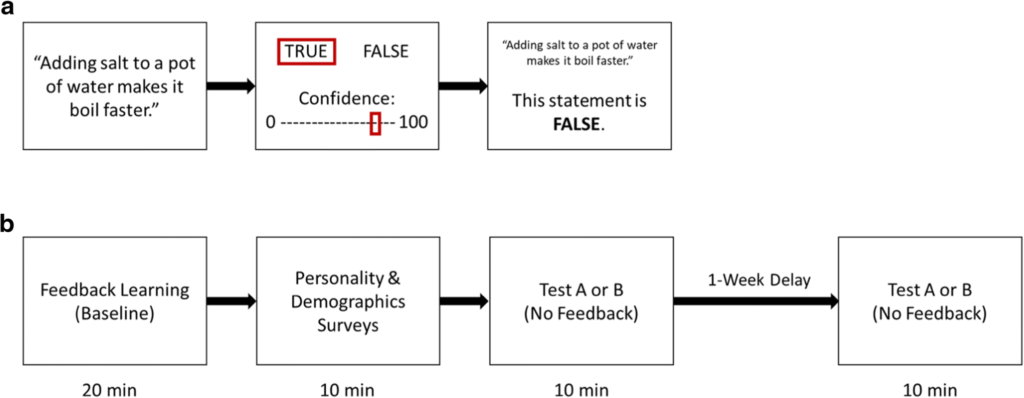

Mickes: What was their task?

Sinclair: Before they went into this test, we told them that some of the statements would be false. Um, so we did warn them that some of them were urban myths and some of them were true. So we told them that their job on each trial was to read a statement and judge, whether they believe that it was true or false.

And then we also had them make a rating about how confident they were in that judgment. That confidence rating is actually really important for analysis later, on to foreshadow, but they made that on a scale from zero to a hundred, just how strongly they believed that a given statement was true or false.

And during that baseline learning task, after they rated their confidence and made their judgment, then we gave them feedback about whether that statement was actually true or false. [Procedural schematic below.]

They did two different memory tests. One was an immediate test, which was at the end of the first session that they did. There was a short delay about 15 minutes or so where they did some individual differences measures in between study and test.

But at this point for the immediate test, they were tested only on half of the stimulus set. And at this point, they went back through and did the exact same procedure that they did before. So they report, if they believe a statement is true or false, they rate their confidence, the only difference is they don’t get any feedback this time. And then after a one week delay, we brought them back and we did the same thing again.

But with the previously untested half of the stimulus set. we were seeing whether the information that we had given them stuck. Did they alter their beliefs in response to the feedback that we gave them during learning?

Mickes: And what did you find?

Sinclair: The critical measure for us was belief updating. So we wanted to look at those questions that they answered incorrectly on day one. So most of those false beliefs. So for each subject, of course, they won’t fall for a hundred percent of them. So we took the subset of questions that each subject answered incorrectly during the baseline test. And then we wanted to see how many of those were correctly updated. So they realized now, oh, that’s actually a false statement when we test them on it either immediately or after a one week delay.

And our critical measure of prediction error was actually based on those confidence ratings that I mentioned earlier, the confidence ratings that we have from the initial baseline learning task is the measure of the strength of their belief, right before we contradict it.

So it’s an indirect way of getting at surprise that they must experience when we tell them that they’re wrong.

Mickes: I think your title says it all, but what did you find?

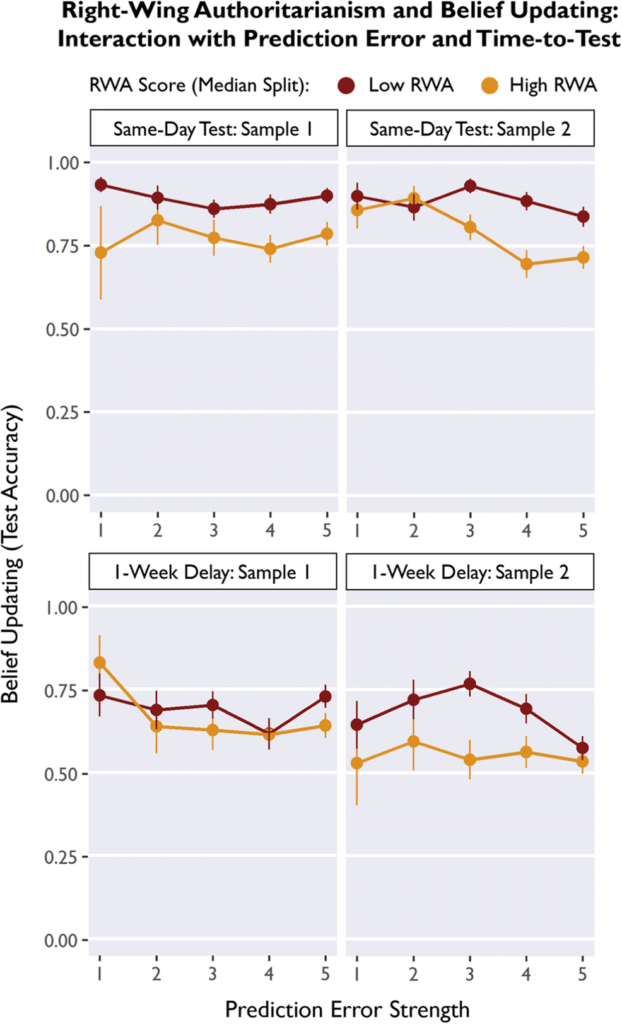

Sinclair: Our main finding was as we predicted people who score high on right-wing authoritarianism tend to be less successful at updating their beliefs, both on the immediate test and after a one week delay. And that’s a pretty strong effect. Um, and we replicated it across two samples. [Results in the figure below.]

And then we also had a secondary finding, which was consistent with our prediction about prediction error. And what we saw there was that indeed at the immediate test to people who scored high on right-wing authoritarianism tended to be especially poor at updating their beliefs when prediction error was really strong. So in other words, on the trials that were most surprising, they were less likely to update their beliefs.

And that goes directly against what we would predict from learning theories of cognition that say that when prediction error is strong, we should be more likely to learn from that information.

Mickes: That’s really interesting. It’s it’s not what is found typically, like you said.

Sinclair: Yeah. That’s the exact opposite of fact of what’s been shown in like hypercorrection studies in the education literature or in the whole wealth of reinforcement learning inspired studies.

Mickes: Is that why you did Experiment 2, to make sure that it replicated?

Sinclair: We wanted to do a replication sample, um, especially because that finding with prediction errors is a three-way interaction, we show an interaction between right-wing authoritarianism, prediction error, and testing session. So basically that prediction error effect was most evident at the immediate test. At the delayed test, people who scored high on right-wing authoritarianism tended to just have really poor belief updating across the board, regardless of what the level of prediction error was.

So we wanted to really see, you know, we’ve got this really cool finding, but hinging on this three-way interaction does that replicate and indeed it did.

Mickes: So what does it all mean?

Sinclair: So I think there are two take-home messages that we should draw from this.

The first at a broader theoretical level is that we should think critically about the context of learning and individual differences when we’re trying to understand cognition. So when we want to take a theory that we have about the way that people learn and the way that people revise their memories, we also need to look at what’s happening in the real world and think about what conditions might limit this updating process, what conditions might influence the way that people learn? And ultimately, how can we come up with strategies to get around that? How can we support learning across a variety of contexts and meet people where they are. Like, if people are bringing a certain motivational or emotional state into learning, how does that influence the process of learning and what can we do about that?

Mickes: And are these some of the things that you’re following up on?

Sinclair: I am just starting my third year. So I have plenty more studies ahead of me, I think. I am not planning on focusing really heavily on right-wing authoritarianism moving forward, but I’ve got a whole constellation of studies it’s around this idea of how we learn from prediction error and how we can understand the context of learning and all the other factors that make sometimes that learning process go wrong.

Mickes: [inner voice: OMG! Only starting year 3?! So impressive.] Is there anything else that you’d like to mention about the paper or cover something that I didn’t ask?

Sinclair: There was one other finding that I forgot to mention before, and it’s related to the first part of that title, the part about closed-minded cognition. So another finding that we had was that cognitive styles measure called actively open-minded thinking is really strongly, positively associated with belief updating.

So we, uh, kind of characterize this as sort of an opposing effect to the right-wing authoritarianism scale. Cause even for some of those interaction effects, we find a really nice sort of mirror flipped results with the actively open-minded thinking scale. So what we’re proposing is that right-wing authoritarianism might manifest in terms of like political ideology, but it might reflect some underlying cognitive styles that tend to lead people towards black and white thinking and being more, close-minded, more resistant to change in a variety of domains, even outside of politics. Whereas actively open-minded thinking is kind of the opposite of that. It’s about people who are willing to seek new experiences and new ideas and are really receptive to being challenged on what they believe and receptive to being wrong. We kind of characterize belief updating on this scale from close to, uh, open-minded thinking.

Um, and we want to think about moving forward, what factors might shift people along that scale? Because I don’t think these are hard immutable constructs.

Mickes: Have other people considered this dimension like you have?

Sinclair: there’s been a lot of research done previously on open-mindedness, but nobody has talked about right-wing authoritarianism in this way before, or sort of put it together with actively open-minded thinking. The one thing that comes to mind are some relevant research. That’s really interesting that shows that, uh, the emotional state that people bring into a task can actually influence how open- or closed-minded they behave. And that applies to both political topics and also apolitical topics.

Mickes: This line of research is really interesting.

Thank you so much for talking to me about your paper.

Sinclair: Thank you so much.

Concluding statement

Myers: Thank you for listening to All Things Cognition, a Psychonomic Society podcast.

If you would like to share your feedback on this episode, we hope you’ll get in touch! You can find us online at www.psychonomic.org, or on Twitter, Facebook, and YouTube.

If you liked this episode, please consider subscribing to the podcast and leaving us a review. Reviews help us grow our audience and reach more people with the latest scientific research.

—

True or false?

Try it again:

- Diamonds are formed when coal undergoes high pressure.

- Coffee reduces the influence of alcohol.

- Sunflowers turn to track the sun across the sky.

Did you update your responses based on the feedback that these commonly held beliefs are myths?

Featured Psychonomic Society article

Sinclair, A. H., Stanley, M. L. & Seli, P. (2020). Closed-minded cognition: Right-wing authoritarianism is negatively related to belief updating following prediction error. Psychonomic Bulletin & Review. https://doi.org/10.3758/s13423-020-01767-y