Online interactions and robots are increasingly common. We spend more time on video calls and we interact more and more with virtual assistants, chatbots, and avatars. We have created new verbs: it almost feels more natural to say that we are “zooming” than “having a video call”. And certain names, like “Alexa”, may bring a virtual assistant to mind more readily than the face of a human, much to the annoyance of human Alexas, considering that they are increasingly changing their names. I guess it won’t be long until we consider the presence of both robot and human participants in the same video call as commonplace.

An interesting phenomenon that has drawn the attention of researchers is that observing another person respond to a stimulus can result in an observationally acquired stimulus–response episode. These episodes can later be retrieved from memory, a process that is affected by factors such as the social relevance of the model for the observer. For example, whether we observe a stimulus-response pair from a stranger or someone closer to us, such as a romantic partner. However, evidence for these episodes has been limited to dyadic face-to-face interactions.

Interested in understanding better how the social relevance of partners influences observationally acquired stimulus-response episodes, Carina G. Giesen and Klaus Rothermund (pictured below) designed an experiment in which participants interacted online with either humans or robots. Results are reported in their recent article published in the Psychonomic Society journal Psychonomic Bulletin & Review.

Online interactions

A key objective of the study was ensuring proper control of confounding factors that have been present in previous experiments, like subtle changes in the positions of visual cues in the participants’ visual fields. The problem with these factors is that, if not controlled adequately, can produce “reliable retrieval effects for observed stimulus-response combinations even in situations of low social relevance.”

To provide a context where the social relevance of the agent showing the stimulus-response associations could be controlled, but which at the same time ensured consistency in the visual disposition and presentation of stimuli throughout the experiment, the authors created a task that could be completed online. In this task, half of their participants were led to believe that they were interacting with another person through an online platform, whereas the other half were informed that they were interacting with a computerized partner. In reality, everyone interacted with a computerized partner, which allowed to keep the characteristics of the interaction and position of visual cues constant for all participants.

The authors relied on a color classification task where, in alternated turns, words were presented to participants and their partners, who should press a key if it was written in red and another if it was written in green.

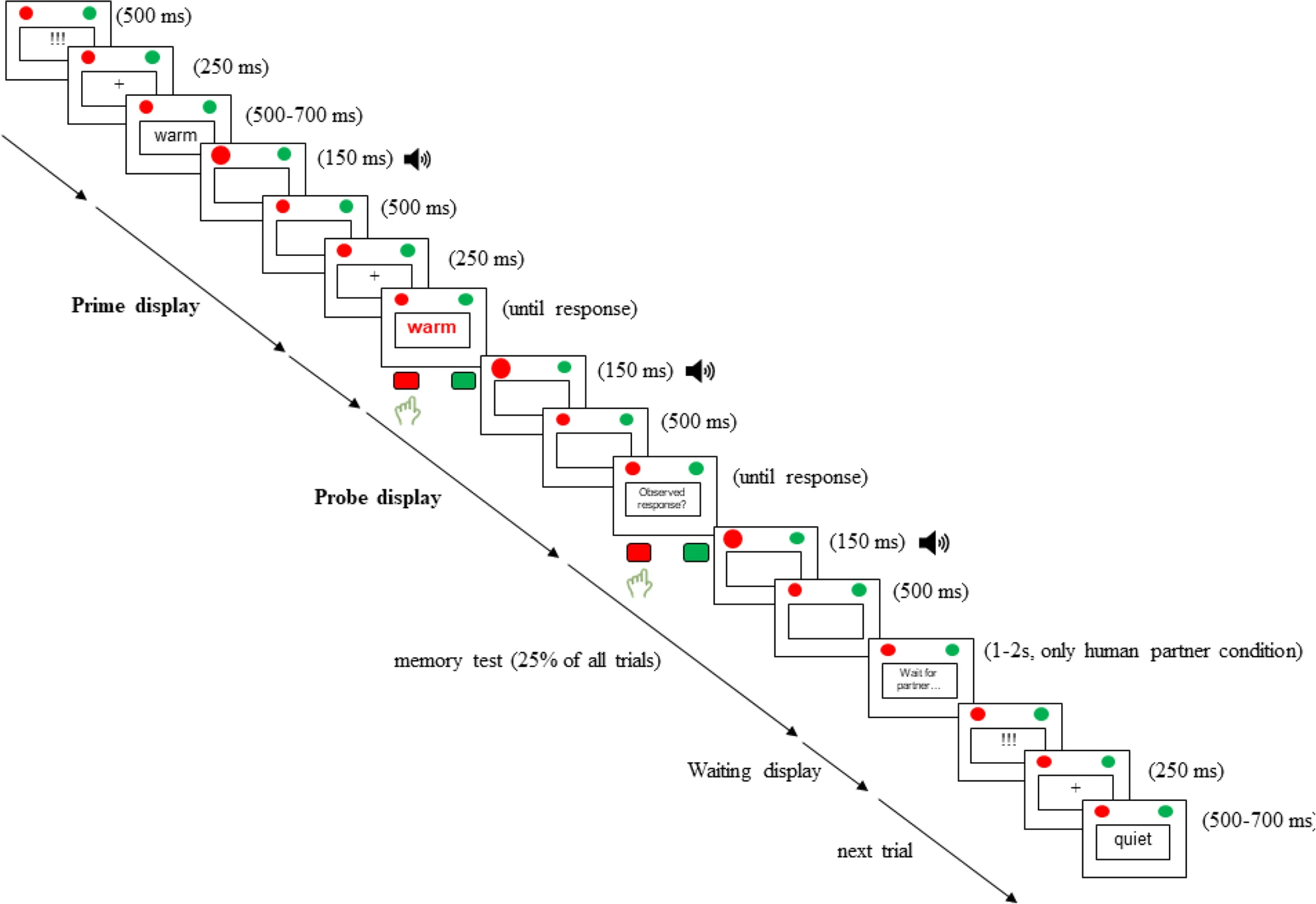

The figure below shows a schematic description of the events in the experiment. In the figure, the “prime display” events refer to the partners’ turn, where the word presented to the partner was visible to the participant, but not its color, so they should observe the partner’s response to know in which color the word was displayed (“memory tests” were presented at random after some trials to ensure that participants paid attention to the partners’ responses throughout the experiment). The “probe display” events refer to the participant’s turn, during which the color of the word was visible and the participant simply had to press the appropriate key to indicate if it was red or green. Only for those participants who were led to believe that they interacted with a human, a “waiting display” appeared occasionally, to indicate that the partner had not responded yet, aiming to increase the impression of interacting with another human.

Easy task, complex implications

The task itself was easy. In fact, participants correctly identified the color in which the words were written with high accuracy (>98% correct responses). So, how can observationally acquired stimulus-response episodes be measured in this context? Through reaction times!

Specifically, the analyses tested whether the stimulus-response pairs observed during the partner’s turn influenced the participant’s reaction time and whether such variations depended on the type of partner (human or computer).

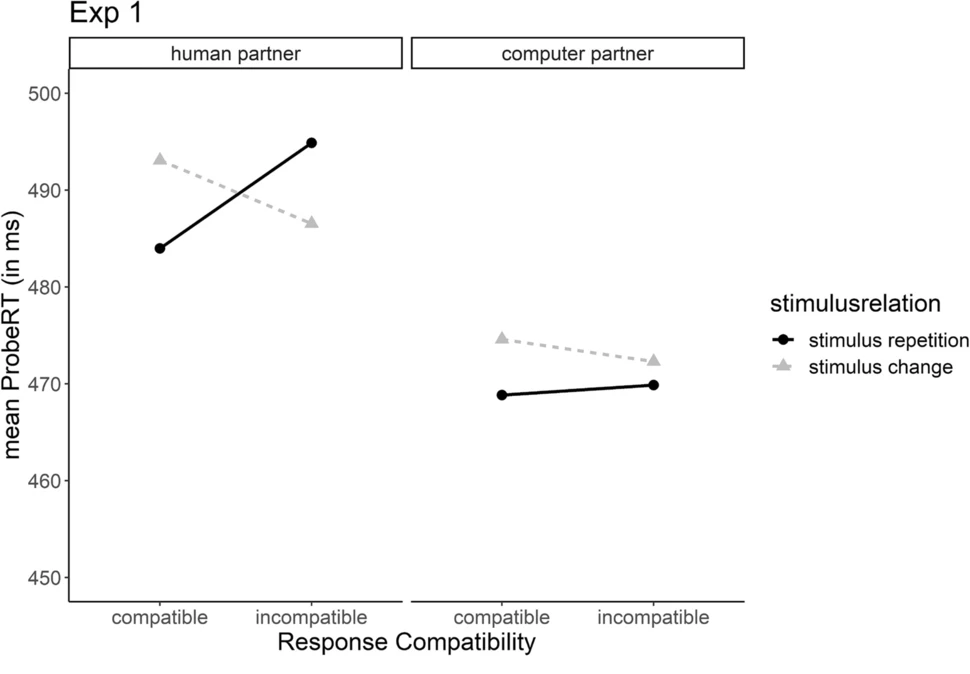

To understand the analysis, note that some trials had a “stimulus repetition,” where the word presented during the partner’s turn and the subsequent participant’s turn was the same, but in others, there was a “stimulus change,” where the words presented during the partner’s and participant’s turn were different. Moreover, in some trials, the partner’s response – which indicated whether the partner observed a red or green word – was “compatible” with the color in which the word presented to the participant was written (for example, if the partner’s response was “green,” the word presented to the participant was written in green), whereas in others it was “incompatible” (for example, if the partner’s response was “green,” the word presented to the participant was written in red). In this experimental context, if observationally acquired stimulus-response episodes are present, participants’ performance should improve (i.e. shorter reaction times) or worsen (i.e. longer reaction times) depending on whether the observed response of the partner was compatible or incompatible with the correct response in the participant’s turn.

This is what the researchers found, but only when participants believed their partner to be human. As the figure below shows, in trials with stimulus repetition, performance was better when the response of the partner and the word color presented to the participant was compatible (as compared to incompatible), which is even more apparent when compared to the reaction times observed in trials with stimulus change conditions. This interaction effect constitutes the statistical signature of the presence of observationally acquired stimulus-response episodes. In contrast, no such pattern was observed for participants who interacted with computerized partners.

Let’s go, team human!

Because these effects were only found when participants believed they were interacting with another human, the authors conclude that these observationally acquired stimulus-response episodes were observed because of the social significance of the human partner, a factor which was absent for computer partners.

The findings go beyond the original purpose of the experimental setup: avoiding confounds from previous studies (such as differences in the visual field configuration when interacting with humans vs computer partners). They also reflect that social modulations of automatic imitation behaviors are possible even in online environments, which is, in and by itself, a novel finding.

Sorry Wall-e, we humans still have a (social) edge over robots, much to the rejoice of human Alexas out there.

Featured Psychonomic Society article

Giesen, C.G., Rothermund, K. Reluctance against the machine: Retrieval of observational stimulus–response episodes in online settings emerges when interacting with a human, but not with a computer partner. Psychonomic Bulletin & Review, 29, 855–865 (2022). https://doi.org/10.3758/s13423-022-02058-4