(This post was co-authored by Thomas L. Griffiths).

Since Wilhelm Wundt established the first university psychology laboratory over 100 years ago, relatively little has changed in how we gather data in psychological science. Technology and statistical methods have evolved, but experiments are still run primarily by individual brick-and-mortar laboratories, test specific hypotheses, and rely on relatively small convenience samples. This approach has served us well in many instances, forming the bedrock of what it means to run a psychology lab.

However, cracks are beginning to form in this foundation. The special issue of Behavioral Research Methods edited by Gary Lupyan and Robert Goldstone highlights groundbreaking research which expands the type and amount of data which is used to inform the psychological sciences. Collectively, the special issue reports the data from approximately 1.7 million individuals not including textual analyses of books, websites, and so on. For one issue of a journal to include this many people is an astounding change from psychological research over the last century.

Perhaps the most notable trend across many of the papers of the special issue is a turn from experimental methods towards massive amounts of observational data obtained under more naturalistic conditions. Many of the papers argue, and then demonstrate, that large, observational dataset have higher ecological validity while also increasing statistical power.

At the same time, it is hard not to detect a tinge of tension in this special issue being published by the Psychonomics Society. The areas of psychology most closely identified with Psychonomics have made tremendous progress using carefully designed experiments to adjudicate between alternative theories. If observational, “big data” psychology is the future, will we be turning our backs on the experimental approach?

We suggest that the answer should be “no!” and that the next wave of innovation within the psychological sciences should not only be “big data” but “big experiments.”

Small experiments, wandering paths, and incompatible effects

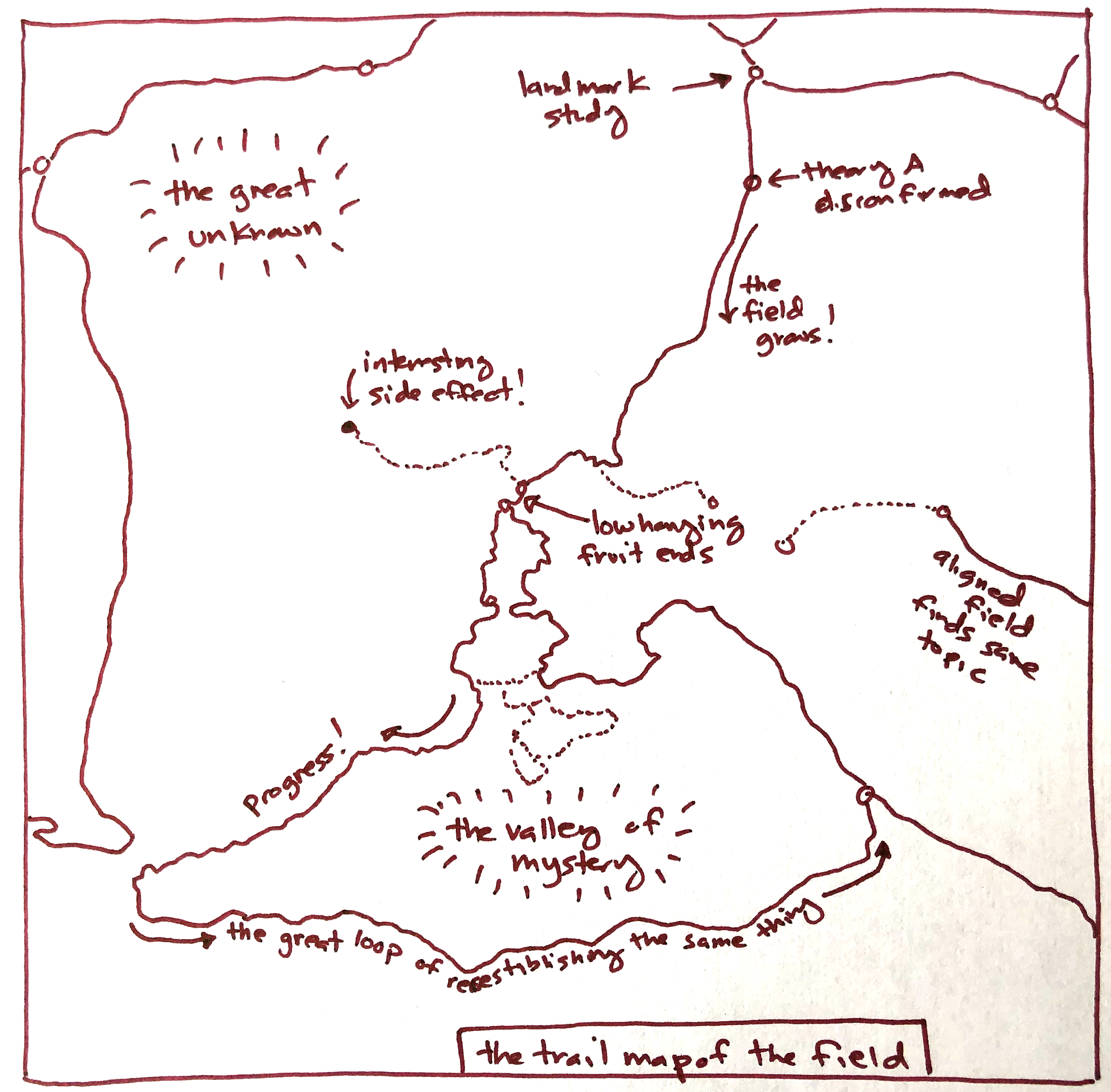

A scientific literature often unfolds in the style of wandering path or network of trails. At the trail-head is the first ground-breaking study which opens up a new area of research. Then subsequent studies flow as a kind of network exploring new conditions, testing alternative hypotheses, and building upon the “proto-paths” explored by others. If we imagine that a 2-D map captures, in simplified form, the space of all possible experiments we could perform, such trail networks of scientific inquiry tend to explore very little of the overall space.

The constraints of this approach to science are largely a vestige of the model of laboratory research established by Wundt. Individual labs only have the resources to run a few experiments per year, on a relatively small set of participants. Thus, progress is made by the chain of studies conducted over decades by labs which build upon the work of past publications.

This tends to limit the rate of progress in several ways:

First, it is likely that the theories do not generalize beyond the small range of conditions tested (see the “valley of mystery” and “the great unknown” on the trail map above).

Second, different empirical phenomena tend to be explored in different, non-intersecting paths. As a result, we often have little understanding about how these effects interact with one another. For example, having established in isolated studies that the spaced versus massed practice improves long term retention, and separately that the testing effect improves memory, what if these treatments are combined?

Third, this approach is incredibly resource intensive. Each experiment performed in a separate lab often requires a graduate student and undergrad research assistants along with the duplication of the setup and configuration of a lab.

Big experiments: A new framework for psychological science

An alternative approach borrows inspiration from large scale scientific endeavors in physics, biology, and astronomy. In these fields, important scientific questions become part of a larger community investment. From the large hydrogen collider (LHC) to deep space telescopes, many areas of science invest significant science funding (in the billions!) in technology to collect data sets that are, in broad consensus, likely to move progress forward in decisive ways.

What would this look like in psychology? In our view, the most natural approach is a fusion of the “big data” concept explored in the special issue and experimental approaches to psychology. One might call this approach simply “big experiments.” Under this idea, the community would develop consensus about the important factors to test within a large experiment. Such details might be worked out within specialized subfields via workshop, conferences, or though writing white papers with community feedback.

Next, a large investment in massive online behavioral experiments would fund a gigantic experiment testing multiple factors and stimulus conditions at once. The scale of such experiments would involve hundreds of thousands of participants tested in hundreds or thousands of conditions. Such large datasets would then become the benchmarks for subsequent theorizing and would enable future generations of researchers to develop and test comprehensive, generalizable theories.

As one example of this approach consider the Moral Machine project presented by Edmond Awad and colleagues in 2018, which is an online game that presents participants with moral dilemmas centered around autonomous cars. With roughly forty million decisions from users in over two hundred countries, the Moral Machine experiment is the largest data set ever collected on human moral judgment.

In addition to the large data set size, the experiment operated over a vast problem space: twenty unique agent types (e.g., man, girl, dog) alongside contextual information (e.g., crossing signals) enabled researchers to measure the outcomes of nine manipulations: action versus inaction, passengers versus pedestrians, males versus females, fat versus fit, low status versus high status, lawful versus unlawful, elderly versus young, more lives saved versus fewer, and humans versus pets. Instead of a network of incrementally built trails, the Moral Machines study maps out the entire space of this domain.

Crowd-sourced research and building the “behavioral telescope” for psychology

As most practitioners in the field now recognize, crowdsourcing recruitment services such as Amazon Mechanical Turk, Figure Eight (formerly CrowdFlower), and Prolific have been transformative in the behavioral and social sciences, enabling data collection at scales unattainable in brick-and-mortar labs. For example, a recent paper estimated that between 11% and 30% of articles published in high-impact journals devoted to the study of human cognition employ samples from Amazon Mechanical Turk.

However, most of this research simply adopts the same approach of small-scale lab science. For instance, many researchers use online data collection to speed the rate of data acquisition, lower costs, and increase the sample size per cell of traditional experiments.

Indeed, most of the experimental crowdsourced studies in the special issue included fewer than 1,000 participants, and only a small number of experimental conditions. This is in contrast to the large, combinatorial experiment designs such as the Moral Machine project.

However, “big experiments” will likely require new technological tools to better harness the evolving ways in which researchers can use the internet to facilitate subject recruitment. In effect, we have to invent the right type of “behavioral telescope” in order to make the types of observations we need as a field.

One excellent example of this is the article in the special issue on Pushkin by Joshua Hartshorne and colleagues. Pushkin is a new open-source library for conducting massive studies online. Pushkin is unique in that it specifically enables the type of technology stack needed for coordinating larger scale data collection. Pushkin moves online research forward in a number of significant ways by introducing the idea of auto-scaling (automatically increasing the number of computing instances assigned to the data collection website), allowing adaptive designs that explore the combinatoric space of experiments in “smart” ways, and by providing a compelling gateway for subject recruitment (leveraging mailing lists, crowdworking websites, and gamified elements).

Psychological theories in the age of big experiments

The “big science” revolution we envision for psychological science has consequences not only for the types of data we address as a field, but also the types of theories we develop. For example, as the size of a data set grows it tends to support more complex models (e.g., with a small data set, often a simple psychological model will suffice to enable broad prediction, but with a large data set a more complex and heavily parameterized model is often better supported). The problem is that complex models (e.g., deep neural networks) often lack explanatory principles for their higher performance. Thus, new approaches will be needed to balance the desire to develop comprehensive, predictive, and generalizable theories which also offer useful explanations of behavior.

As one example of this Agrawal, Peterson, and Griffiths explore an iterative theory building approach to the Moral Machines dataset. First, they utilize complex, highly flexible models to determine the maximum amount of “explainable” structure in a data set. They then incrementally increase the complexity of simpler, explanatory models to account for newly identified patterns in the larger experiment dataset. By iterating in this fashion one can incrementally close the gap between the explainable and predictive models.

In sum, it is an exciting time for psychological research. Nearly a decade ago, researchers interested in accessing large datasets on human behavior might have conceded that proprietary research within large technology companies was the only place to work. However, it is increasingly clear that the academic research community is well positioned to collect and analyze such powerful dataset ourselves. The only obstacle is developing the research infrastructure to make the kinds of robust measurements that we need. With projects like Pushkin “push”ing this frontier, this endeavor seems increasingly within our reach.