Dawn Weatherford (pictured on the right) recently published a paper about detecting fake IDs with co-authors William Blake Erickson, Jasmine Thomas,  Mary Walker, and Barret Schein. The paper is called “You shall not pass: how facial variability and feedback affect the detection of low prevalence fake IDs” and appears in the Psychonomic Society journal Cognitive Research: Principles and Implications.

Mary Walker, and Barret Schein. The paper is called “You shall not pass: how facial variability and feedback affect the detection of low prevalence fake IDs” and appears in the Psychonomic Society journal Cognitive Research: Principles and Implications.

Despite the distance between us of about 4,891 miles (7871 km) and the time difference of 6 hours, I had the pleasure of interviewing Dawn about this article. The interview took place in Dawn’s office in San Antonio, Texas, USA and in my office in Bristol, UK.

Wherever you are in the world and whatever time it is, I hope that you enjoy the interview.

Transcription

Mickes: I’m Laura Mickes and I’m interviewing Dawn Weatherford on behalf of the Psychonomic Society. Dawn recently published a paper in the Psychonomic Society journal Cognitive Research: Principles and Implications. Hi Dawn, thanks so much for agreeing to be interviewed about your paper.

Weatherford: Thanks!

Mickes: The title of the paper is “You shall not pass how facial variability and feedback affect the detection of low prevalence fake IDs.”

So I have an example and I wonder how related it is to the research that you’ve published. Recently I just sent in the paperwork for my new passport. My last passport, along with the passport photo, was nearly 10 years old and about to expire. And in the last couple of years, border agents have been saying that I don’t look like my photo. It’s not a fake, it’s just that I’m 10 years older.

Weatherford: In a sense that is impressive to me that people are noticing it and the way that we talk about fake IDs in the paper is not necessarily an identification card that you’re going to get from some sort of dubious source.

Instead, it’s a legitimate document that perhaps just doesn’t depict you on it. And under those circumstances, there are lots of opportunities where you’re going to make a mistake or someone who’s in a position where they’re trying to do that very same thing, either at the airport or at a liquor store or going into a pub. Um, those are the types of situations where we commonly are asked to present our ideas. It’s something we do without much thinking about it. And yet people are being paid to do these jobs and have some supposed expertise in their ability to detect whether or not it is the person depicted on the card. So again, we’re controlling for the idea that it is an inauthentic ID to begin with, but just that it is being possessed by an impostor. That’s, that’s kind of what we’re going for.

Weatherford: And yeah, I think one of the things that makes this task challenging in a way people don’t think about is the exact example you just used. It’s very common for a card to, you know, be good for a decade, five, seven years. And, and we change so drastically over that time and I’m glad to hear that there are individuals out there in the world who are noticing that difference, but had you told me they didn’t notice, I would have been less surprised just on the basis of the work that we’re doing and just the underpinnings in terms of the mechanisms that people are engaging when they’re making the decision about whether or not you are who you say you are when you present an ID.

Mickes: You think that they would not notice or just not say anything?

Weatherford: Perhaps. The crux of the paper and this is an early paper, this uses a student sample, something that I hope we’ll get to talk about a little bit more in-depth later on in the interview, but essentially when we have to deal with all of this visual variability associated with you across 10 years of your lifetime, next to someone who perhaps looks strikingly similar to you. If you’re seeing lots of passports, lots of IDs every single day, you are having to deal with all of that. You’re not necessarily put in a position where you can make a decision, about a picture that was taken on the very same day and so that 10-year difference kind of shifts the nature of what you expect to see in terms of facial variability and so it could open up the door for lots of mistakes like we were trying to investigate in the paper.

Mickes: Your research question had to do with low prevalence and feedback and fakes.

Weatherford: What we were interested in is the degree to which these general and relatively robust low prevalence effects that are found in lots of different types of visual search tasks would translate into a facial recognition paradigm or in this instance an identity matching paradigm.

So in the real world, these screeners who are asked to look at IDs day in, day out for long periods of time are tasked with basically doing that for quite some time and they see fakes or people who are misrepresenting their identity really, really, really infrequently. And so that low prevalence effect, whether it’s with faces and an ID card situation or a radiologist looking for tumors or someone at the airport looking for a weapon in your bag, the less often they see these types of these types of targets of their visual search, the more often they will miss them even when they are presented. And so we were interested in the degree to which number one, we could test that experimentally and then number two, some possible ways to mitigate it. If it does indeed emerge such that if I am expecting that is something is relatively rare that you’re going to miss it, is there a way I can shift you out of that kind of decision-making process and instead keep your accuracy relatively high over the course of several trials?

Mickes: Is that what you did with feedback?

Weatherford: That was an attempt at a pretty easy and intuitive approach. There have been some mixed results with the literature in terms of feedback, especially when it comes to the differences between ID matching tasks and basic object searches. So if you give people feedback about their performance, there is the possibility that it could improve it, that they’d say, well, I made the wrong choice. And for instance, the feedback that we gave them in this paradigm was after seeing the static image of the target ID picture beside some sort of larger picture that is about the same size as a face would be if you looked at it at that distance. If someone had said yes, basically accepting two individuals who are different people as the same, we’d say you just accepted a fake ID, it was red. We told them don’t do this.

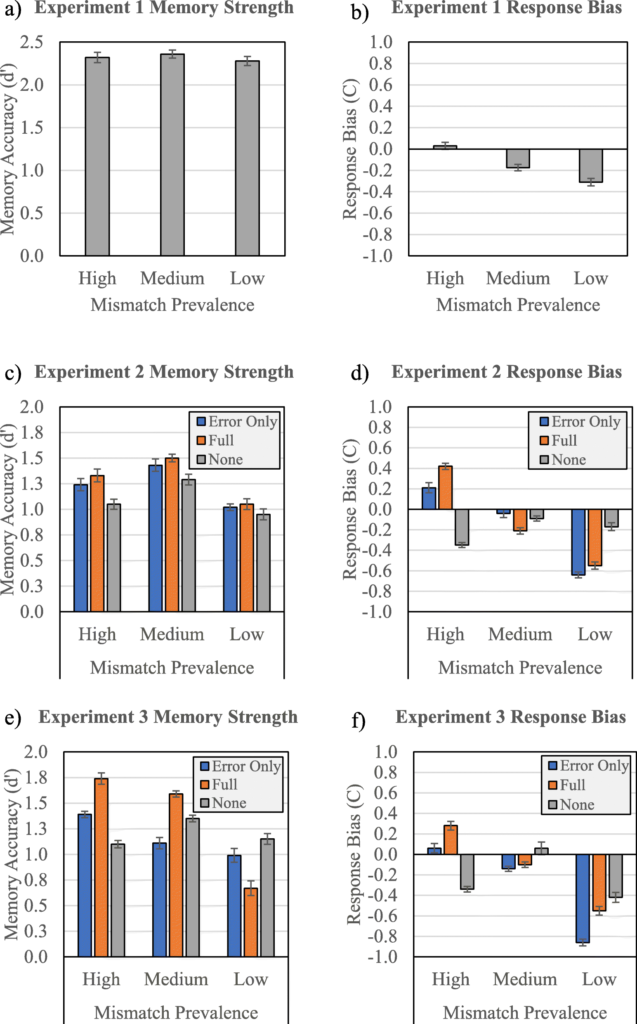

And, and again, trials would persist. But what we actually found is that integrating feedback only made you better at whatever was more prevalent. So to walk that back, what we actually did is we compared the prevalence of these fake IDs, so we called them just mismatches in the paper. So how often across 100 trials we presented mismatched identities and that was either 10% of the time, 50% of the time, or 90% of the time. In conditions when they’re 90% of the time, what actually becomes less prevalent is the real IDs. And so basically we were seeing to what extent does it have to do with the type of visual cues that you would be getting in terms of matches versus mismatches? And to what extent is this just a basic cognitive phenomenon. Across three experiments what we’ve found is that when you integrate feedback, it really just makes people shift their priorities towards being better at whatever is the more dominant or more prevalent trial type.

Weatherford: They get worse at the fake IDs when you give them feedback instead of making it better, which is the opposite of the effect you would want in the real world.

Mickes: Wow. Why is that?

Weatherford: We based a lot on like the feedback intervention theory work where essentially, yeah, you just have a series of shifting priorities and so you say, well, I can either prioritize, avoiding making errors or I can prioritize getting the right answers. But it didn’t really matter whether or not we told people to correct their behaviors, only under circumstances where they made errors or we told them every single trial which decision they made and whether or not it was correct. We just saw overall that yes, unless it was medium prevalence and basically under medium prevalence, you can use kind of a balancing hierarchy to effectively integrate feedback and improve performance. But when it was mismatched in a very low prevalence or high prevalence fashion, it just ended up shifting you to be worse than you were otherwise.

Mickes: That’s fascinating. There’s something else that I really love about the work that you did here, which I should say that you didn’t do it alone. You had coauthors.

Weatherford: I did. I’m very happy to say that, um, I had a research colleague who was my second author, but the other three authors were actually students who are working as undergraduates with me. They were able to be on the paper and help out and learn about that process.

Mickes: One of the really cool things is you looked at facial variability, the way you collected the stimuli. Did that take forever?

Weatherford: The very first study that we did in the three experiments sequence uses a pretty prominent identity matching set called the Glasgow Unfamiliar Face matching test set. And although it has a lot going for it and it certainly is well-published in the literature and I think the authors of this did a great job in curating these photos to begin with.

One thing that makes it relatively unrealistic when you’re trying to get a sense of how things operate in the real world is their lack of facial variability on two dimensions. So first, pictures were taken on the same day I’ll be at with different cameras, and like I said, that is unlikely to happen in a real-world identity matching situation. And then secondly, they are over-representing at least for an American audience, the diversity of faces within the set. So it was largely younger light-skinned people. And if you’re around a situation where that’s quite common, then those might represent and generalize to the real world quite well.

However, in San Antonio, Texas, right near the border between the United States and Mexico, it did not look anything like our participants. And so we were challenged to find another alternative. And I will say that back when I started the selfies for science initiative, I thought, yeah, there’s gotta be something out there that captures within-person variability.

So I have pictures that are of you with at least a couple of months if not years, distance in between as well as controlled images versus more ambient images or what we can call “selfies.” Um, all of the things that represent image variability in that way, but then also not just be all individuals who are young and light-skinned. So when I tried to embark on this journey to find a more appropriate image set, I didn’t. So yes, I made it. Actually, I’m super excited to say that we have 125 identities per gender. I’m working with another collaborator who does multidimensional scaling and we are actually working on quantifying the distances within individuals and between individuals so that we can try to make this image set available to more than just me. I’d love for more people to use this.

Mickes: This sounds good. I’m so glad you’re gonna make it available to other researchers.

Weatherford: Yeah. Collecting the images is, is super tedious. Um, especially given that, yeah, you have to collect a student ID, two ambient images or selfies and then we take a range of pictures of them so there are videos of their faces moving back and forth, videos of their whole bodies, five different emotions, profile, three quarters. We run the gamut and so we’re trying to make it just a great resource for a variety of different work within facial recognition.

Mickes: That’s going to be really valuable. Did I hear you call it selfies for science?

Weatherford: I did. That’s what we called it at the very beginning. My very first grant to support the work and get people to come in and let us take their images. We thought, well, what would be catchy? Selfies for science.

To address your question about how facial variability affected the low prevalence effect: Essentially in our first experiment when we use that established database we found not as great evidence of the low prevalence effect. Right, so it gave us hope that under conditions where you’re using faces that are incredibly similar looking to themselves on the same day that you wouldn’t see these issues when a fake ID is rare. However, in our second and third experiment when we increased the facial variability and made them more representative of what the real world actually looks like, not only did we see issues of the low prevalence effect, but we also saw just general reduced performance overall and it’s a harder task.

Mickes: What’s next or are you holding your cards close to the vest?

Weatherford: We’ve already collected the data on the second set of experiments that we ran, but essentially we were super excited to find this with the first three experiments that we established the utility of the new image database. But the ultimate question becomes is this really representative? Do professionals really engage in the same types of decision-making processes as our students did?

And you know there’s always that threat to external validity. So we are very, very, very close to submitting another three experiments sequence with professionals. We collected data from the general public, from individuals who work in a place where you could perhaps expect a higher frequency of fake IDs. So bar door security and then we compared them to individuals who are access security and those people who check IDs, either places of business, uh, border airport, those types of, uh, establishments. And so we basically gave them similar types of tasks.

We had the expressed goal of saying we’re not trying to, um, reveal some sort of issue other than to say like, as psychological scientists here, here’s an issue that perhaps we need to be made aware of, but what are the ways that we can mitigate it or explain it or come out and basically provide some sort of utility instead of just casting doubt on the process as a whole. So in our sequence, what we did is we compared those individuals in their performance, whether or not they showed evidence of the low prevalence effect. And we gave them professional surveys to see if the quality and the quantity of their professional experiences predicted whether or not they would be subject to these errors.

Mickes: Okay. Are you going to tell us the answer or do we have to wait for the paper?

Weatherford: You’re going to have to wait for the paper.

Mickes: <exaggerated sigh> okay, you’re killing me.

Weatherford: <laughter>

Mickes: I’ll wait. In the meantime, everyone should read this paper that’s now available on CR:PI or Cognitive Research: Principles and Implications. Thank you so much, Dawn.

Weatherford: Thank you, Laura.

Psychonomics article focused on in this post:

Weatherford, D.R., Erickson, W.B., Thomas, J. et al. (2020). You shall not pass: How facial variability and feedback affect the detection of low-prevalence fake IDs. Cognitive Principles: Research and Implications, 5, 3. https://doi.org/10.1186/s41235-019-0204-1