About 15 years ago, I tried computer speech dictation for the first time. I had a paper to write, and I thought speaking to the computer would be much more efficient than typing. It was a complete disaster; what appeared in my word processor bore no resemblance to what I was saying. Luckily I was not on stage like the unfortunate Microsoft presenter Shanen Boettcher:

[youtube https://www.youtube.com/watch?v=-0kDcUEDfmY]

My first experience with dictation taught me a keen appreciation for the difficulty of writing computer algorithms that understand spoken speech.

This difficulty stands in stark contrast to the ease with which we understand one anothers’ speech. The Microsoft video is funny because we can understand exactly what is being said, but the computer utterly fails. How do we understand speech so well? Upon brief reflection, one might think that our brains simply perceive each successive incoming sound, which are then strung together to form words. In recent decades, however, research has shown that this naive view is incorrect. The remarkable McGurk effect, for instance, shows that visual information about how a speech sound is produced can have a dramatic effect on how that sound is perceived.

As Liberman and Mattingly described it in 1985, the motor theory of speech suggests that human speech perception is not, fundamentally, driven by perceptions of speech sounds, per se; rather, we perceive speech gestures. To hear someone say “bat” is not to know the “b”, “a”, and “t” sounds put together; it is to understand the mouth movements that are required to say “bat”. The motor theory of speech is an “embodied” theory: how the brain perceives speech cannot be separated from how the brain and body together produce speech.

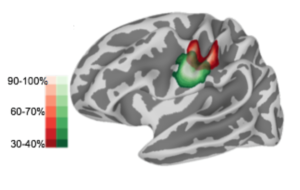

It is known that body movements are controlled by the motor cortex. Moreover, the motor cortex has an interesting structure: it is somatotopic, meaning that specific regions of the motor cortex map to specific body parts. Neurons in one region of motor cortex control the movement of the right index finger; in another, the left toe. The lips and tongue, too, have their own piece of motor cortex devoted to them. When a part of the body moves, this can be detected as neural activity in the specific part of the motor cortex devoted to that body part. The figure below, adapted from a new article by Arsenault and Buchsbaum, shows approximate regions of cortex devoted to the lip (red) and tongue (green).

This architecture of the motor cortex suggests a critical test of the motor theory of speech. The “lip cortex” is selectively active when producing speech sounds involving the lips, such as “pa”, while the “tongue cortex” is selectively active when producing sounds involving the tongue, such as “ta”. If the motor theory of speech is correct, one would predict that these same regions would be selectively active when listening to those sounds.

A decade ago, Pulvermüller and colleagues found important evidence for the motor theory of speech using functional magnetic resonance imagine (fMRI). When neurons in a particular brain region are active, more fresh oxygen-rich blood flows into these regions, replacing oxygen-depleted blood. fMRI detects these changes in blood flow, allowing a measurement of how “active” a brain region is during a particular activity. Pulvermüller and colleagues presented apparent fMRI evidence that indeed, the lip cortex is more active when listening to “pa” than when listening to “ta”; furthermore, tongue cortex is more active when listening to “ta” than to “pa”. The result has been cited by hundreds of other scientific articles.

A recent article in the Psychonomic Bulletin & Review followed up on this fascinating result. Researchers Arsenault and Buchsbaum extended the previous experiment by Pulvermüller and colleagues in two ways: first, by using a wider variety of speech sounds in addition to those used in the earlier study; for example, they added “ba”, “va”, and “fa” as lip sounds, and “da”, “za” and “sa” as tongue sounds. The second and more interesting extension, however, is the use of a powerful statistical technique called multivariate pattern analysis.

Arsenault and Buchsbaum asked participants to perform two tasks in separate fMRI scanning sessions. In the first task, participants listened passively to the various speech sounds mentioned above. Activity in the lip and tongue regions of cortex during this task provide the critical test of the motor theory of speech. In the second task, the participants were visually presented with the speech sounds in text form and asked to silently mime the sounds to themselves. This allowed the researchers to find the lip and tongue cortex for each participant, and gives a baseline against which to compare the brain activation when listening to the same sounds.

The earlier result of Pulvermüller and colleagues would lead us to expect that substantially more activation would be found in the lip cortex when listening to “ba” or “pa” than when listening to “ta” or “da”; however, this is not what Arsenault and Buchsbaum found. Activation of the lip and tongue cortex did not appear to be selective for which kind of speech sound was being heard. This represents an important failure to replicate the earlier study, particularly in the recent context of other high-profile failures to replicate results in psychological science. Good science requires that findings are replicated and checked.

Arsenault and Buchsbaum failed to replicate the Pulvermüller result when looking at the simple amount of increased activation in a region, which was the way the data were analyzed originally. But Arsenault and Buchsbaum decided to go one step further to ensure that there wasn’t something they were missing in the data: they applied multivariate pattern analysis (MVPA) to the data.

MVPA works by training a computer algorithm to classify the patterns of activation on each trial as showing “lip” or “tongue” activation. In training, 90% of the speech miming trials— as well as the correct classification—were offered to the classifier. In other words, as shown in the figure below, the classifier was presented with the activation of the regions of interest in the cortex together with the correct answer: namely, whether this particular activation was paired with a lip or tongue mime. Across trials, the classifier uses the different patterns of activation to “learn” how to classify each trial.

Using the remaining 10% of the speech miming trials, as shown in the next figure, the classifier can be validated to make sure that it can correctly classify speech miming trials it had never seen during training. Arsenault and Buchsbaum found that the MVPA classifier was able to successfully classify 75-90% (depending on brain region) of these new speech miming trials, indicating that the classifier was sensitive to patterns of activation in the motor cortex that corresponded to lip and tongue movements.

The motor theory of speech implies that brain activity associated with speech listening should be similar to brain activity associated with speech production. Arsenault and Buchsbaum reasoned, therefore, that the classifier that had demonstrably learned to classify lip and tongue movements should be able to classify the passive listening trials as well.

When Arsenault and Buchsbaum attempted to classify the passive listening trials using the classifier, however, the classifier only achieved 50% accuracy. Notice that because there were only two possible kinds of trials, lip and tongue trials, this level of accuracy is no better than flipping a coin.

Arsenault and Buchsbaum have thus failed to replicate the evidence for the motor theory of speech perception offered earlier by Pulvermüller and colleagues. Notably, the MVPA approach presented by Arsenault and Buchsbaum is actually a better test of the theory; the MVPA classifier looks at whole patterns of activation, instead of just the average change in activation in a brain region.

In Arsenault and Buchsbaum’s view, then, motor theories of speech perception have failed a critical test: motor cortex activation does not appear to be similar across speech production and speech perception. What might explain the fact that Arsenault and Buchsbaum obtained a different result from Pulvermüller et al.?

If I place my methodologist hat on, I should point out that the result of Pulvermüller and colleagues was modestly-statistically-significant result (p<0.02) with a small sample size (N=12). This is precisely the sort of result that one would not expect to replicate. The evidentiary value of such a study is slim, particularly without the methods and analysis being pre-registered. Attempted replications such as the one reported by Arsenault and Buchsbaum’s are critical if science is to be self-correcting. Methodological advances such as the use of MVPA are icing on the cake.

Article considered in this post:

Arsenault, J. S., and B. R. Buchsbaum. 2016. “No Evidence of Somatotopic Place of Articulation Feature Mapping in Motor Cortex During Passive Speech Perception.” Psychonomic Bulletin & Review. DOI:10.3758/s13423-015-0988-z

3 Comments