I don’t know about you, but I remember feeling pretty smug when the large Open Science Collaboration report showing that experiments in cognitive psychology were more replicable than experiments in social psychology was published.

Apologies to my social psychologist friends. Rest assured, I get my just desserts.

The number of participants you need to recruit to take part in your experiment may depend on whether you are interested in measuring:

- if the independent variable had a statistically significant effect on the dependent variable, or

- getting your samples statistic very close to that of the population.

Different techniques exist to arrive at a required sample size, including power analysis and a priori analysis. Power analysis is sensitive to the expected effect size whereas a priori analysis is not. On the other hand, a priori analysis is sensitive to the closeness to the population mean whereas power analysis is not.

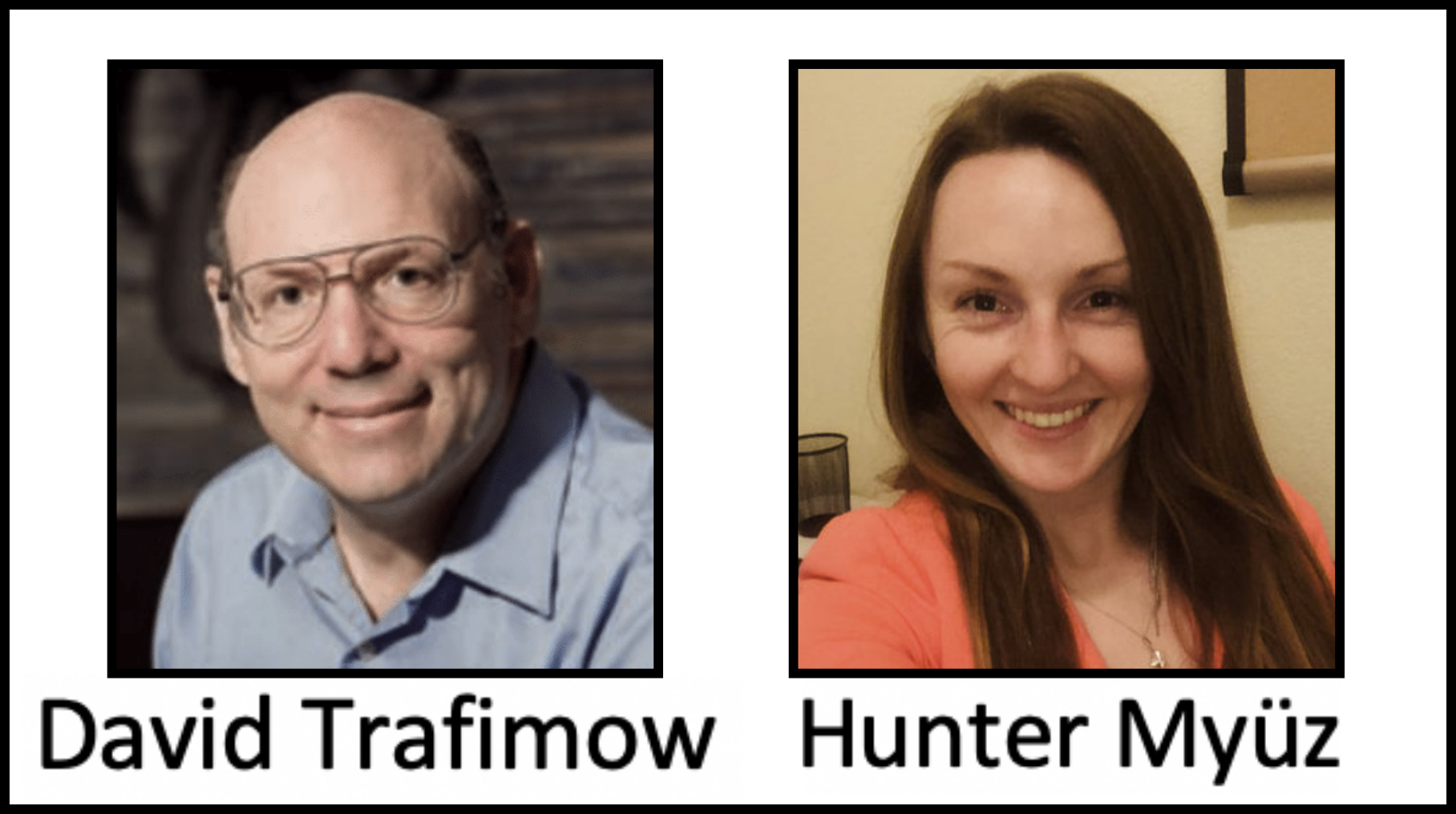

The a priori analysis is a measure of sampling precision. Precision in science is a measure of variability. The a priori procedure was developed by David Trafimow (first here, and then here, here, here, and here).  Recently, David Trafimow and Hunter Myüz authored an article about sampling precision published in the Psychonomic Society journal, Behavior Research Methods.

Recently, David Trafimow and Hunter Myüz authored an article about sampling precision published in the Psychonomic Society journal, Behavior Research Methods.

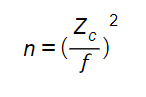

A straightforward a priori equation is given by:

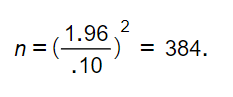

where n is sample size, Zc is the z-score of the desired level of confidence, and f is the fraction within a desired standard deviation of the sample mean to the population mean. To use the example provided by Trafimow and Myüz, say you want to be 95% confident that your sample mean will be within .10 standard deviations of the population mean. Then just plug those values into the equation and solve for n,

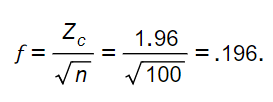

And voila! You have the sample size needed to get that level of precision: 384. Likewise, you could go back to your previous research and work out the sampling precision. Let’s say that you collected data from 100 participants and want to have the same level of confidence. Then plug those values into the equation and solve for f,

Your precision is .196. Not too shabby. The lower the number, the better the precision.

Using a slightly more complicated equation, Trafimow and Myüz compared precision of research from different subdisciplines of psychology. The goal was to assess which had the highest precision and if the quality of the journal was a factor. The researchers therefore selected papers from a variety of journals that publish papers from various subfields. The subfields were clinical, cognitive, developmental, neuroscience, and social psychology. To account for the quality of the journal, Trafimow and Myüz used impact factors. Impact factors are values that are based on numbers of publications and citations. Legend has it that the higher the number, the better the journal.

This calls for a digression. Google tells me that the journal with highest impact factor is the New England Journal of Medicine with an impact factor of a whooping 79.26! Psychological Review, by contrast, has an impact factor of 7.23.

Back to business.

Trafimow and Myüz selected 3-15 papers that were published in three journals per subfield that were ranked at the top or at the bottom. Impact factors ranged between 0.11 and 7.21, number of articles per journal ranged between 3 and 15, and the number of experiments ranged between 3 and 52. All of the papers reported experiments that were between-subjects designs, reported group means, and were published in 2015. Using an a priori equation, Trafimow and Myüz computed median levels of precision.

How did we do, fellow cognitive psychologists? I’m going to let Trafimow and Myüz tell you:

“Our findings indicate that developmental research performs best with respect to precision, whereas cognitive research performs the worst…”

If it makes you feel any better (or worse), they went on to say that “none of the psychology subfields excelled.”

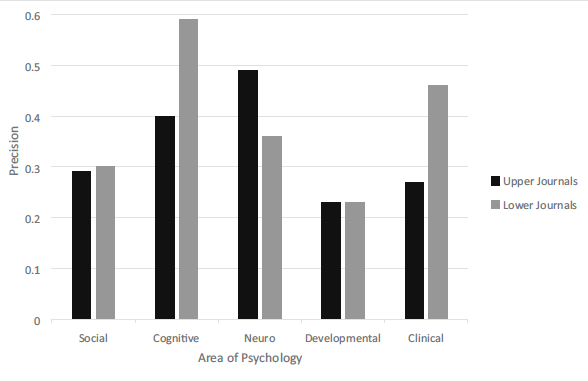

You may expect that the research reported in higher impact journals would have better precision than the research reported in lower impact journals. There were interesting differences across journals and subfields. In the figure below, median precision is plotted as a function of area of psychology. The lower the value, the better the precision.

According to this plot, in terms of precision, the quality of journal only mattered for cognitive and clinical psychology. For precision of experiments in developmental and social psychology, the quality of the journal did not matter much. And, interestingly, precision was better in papers published in lower impact neuroscience journals.

Trafimow and Myüz anticipated possible misinterpretations, including that they are not claiming that social psychology is “better” than cognitive psychology, for example. About that they wrote, “…although sampling precision is important, it is only one of many criteria that can be used to evaluate psychology subfields.” Trafimow and Myüz also acknowledged that a limitation may be that they focused on between-subjects designs, and experiments in cognitive psychology are often within-subjects designs.

After I sign off here, I plan to use the a priori procedure to see the level of precision of my experiments. Will you?

Psychonomics article focused on in this post:

Trafimow, D., & Myüz, H. A. (2018). The sampling precision of research in five major areas of psychology. Behavior Research Methods, DOI: 10.3758/s13428-018-1173-x