When I was a kid, I had a pair of sunglasses that had little mirrors on the side of the lenses that allowed you to see what was happening behind you. I would walk around my neighborhood feeling so cool, like a bona fide Spy Kid. James Bond had nothing on me. I thought someday a brilliant scientist should make glasses that have a camera on them so that a secret agent could covertly capture the inner workings of a villain’s lair. If that ever happened, it would be totally futuristic. Jump to 2022, and these products are now widely available to anyone in the market for camera glasses.

So you might think that camera glasses have brought us to the pinnacle of eyewear technology. In that case, you would be wrong. In recent years, cognitive psychologists have advanced the field with glasses that track the eye gaze of the wearer. Scientists have used these devices to understand the psychology of social communication and measure the natural movements of eyes during conversation.

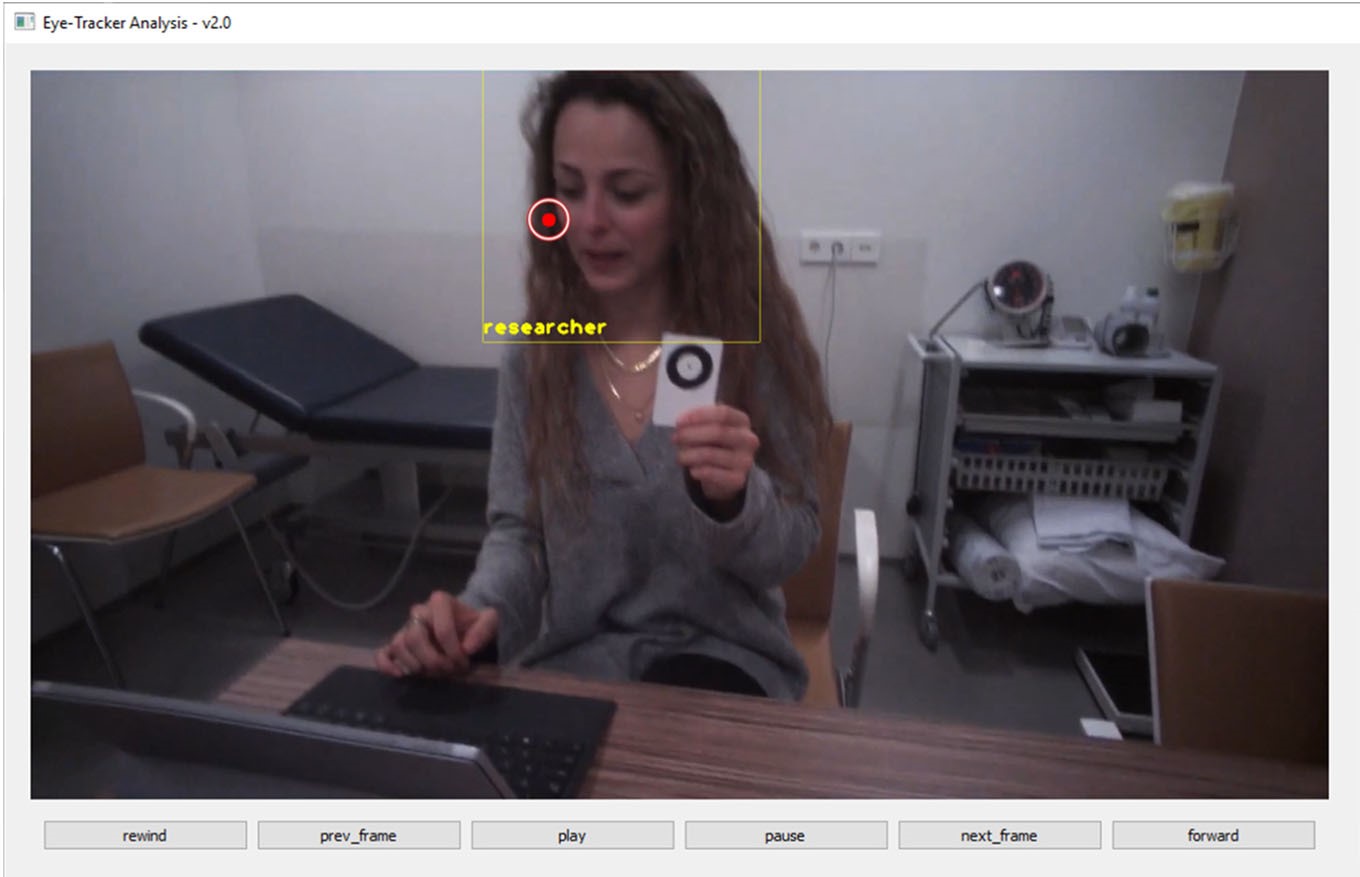

This idea stemmed from the traditional eye gaze techniques that use a computer screen and track where a participant looks around the screen. Advancing this technology to eyewear allows the participant to be mobile in the real world and have natural interactions with other people, all while tracking eye movements. The glasses produce a video of what the participant (i.e., the glasses-wearer) is seeing with a dot that shows where the participant is looking in the visual field.

Various studies have used different approaches for analyzing the eye gaze data. Some use manual frame-by-frame coding, others use heat maps to show levels of gazing, and others manually draw areas of interest where someone may look. With around 25 frames per second, the manual coding of this data can become extremely labor-intensive and time-consuming. Also, when a research assistant manually creates areas of interest, subjective interpretations can affect the reliability of the data.

Computer vision algorithms can be used to streamline the process and make analysis more objective. These algorithms can automatically detect areas of interest. For example, they can process a video and identify objects as cars, people, trees, buildings, etc. Researchers have even devised algorithms that can differentiate between people. If we apply computer vision technology to the mobile eye-tracking data, we may open a brand new door to the world of eye gaze analysis.

Chiara Jongerius and colleagues (pictured below) have indeed opened this door. Earlier this year, Jongerius defended her doctoral thesis at Amsterdam University Medical Centers, which focused on eye contact during patient and physician communication. As part of this work, she published a paper on automatic detection of areas of interest in the Psychonomic Society journal Behavior Research Methods.

In this paper, the authors compared their manual analysis to the automatic computer vision analysis. For the manual analysis, the researchers had to identify the area of interest (in this case, a patient’s face) in each frame of the video. With 25 frames per second, one minute of video can take nearly an hour to annotate. Meanwhile, the computer vision annotation can process one minute of video in less than one minute. The authors have made the software they designed for this project available here.

Okay—so the computer works faster than human coders—but is it accurate? Jongerius and her colleagues compared the accuracy of these methods and found high agreement scores (Cohen’s ĸ ranging from 0.85 to 0.98) between human coders and the algorithm. This is based on the duration of face-gaze in the first minute of video across seven videos, an analysis that included 10,526 frames. Furthermore, when two people are visible, the algorithm can distinguish the individuals with > 94% accuracy. Overall, the algorithm seems to perform very much like human coders.

With this new technology, Jongerius highlights an advancement that will certainly change the future of eye gaze analysis. This work uses mobile eye-tracking devices that can be used in real-life situations. Their software uses full body detection to automatically identify the heads of individuals and determine if one’s eye gaze is directed at those targets. This method can be readily applied to many studies focusing on face-to-face communication, and thankfully it reduces processing time, annotator fatigue, and subjectivity in the analysis.

More importantly, we are now one step closer to making cognitive psychologists even cooler than James Bond.

Featured Psychonomic Society article:

Jongerius, C., Callemein, T., Goedemé, T., Van Beeck, K., Romijn, J. A., Smets, E. M. A, & Hillen, M. A. (2021). Eye-tracking glasses in face-to-face interactions: Manual versus automated assessment of areas-of-interest. Behavior Research Methods, 53:2037-2048. https://doi.org/10.3758/s13428-021-01544-2