Imagine I flash you an image of an animal in a savannah scene, say an elephant. You briefly view the image, and then you are asked to report its content while referring to specific details such as the type of animal and its position in the scene (e.g., was it facing the right or the left). This is a rather easy task, as you’ll most certainly remember the elephant facing a large Acacia tree on the right. But what if I now flash another image immediately after the first, this time with a different animal (e.g., giraffe) facing the left, and perhaps even a third or a fourth image? How well would you perform on such a task? While people’s memory for the “gist” of a scene, such as the main object or character embedded in it, is typically quite robust, remembering the specific details of the scene under very brief exposure conditions can be very tricky. In fact, the position of one object (facing, e.g., the right) may interfere with the encoding and/or the consolidation of memory for the position of another object (e.g., facing the left). People in such cases may thus confuse one object’s position with another’s, a phenomenon often termed as the ‘misbinding’ of features across objects.

Clearly, there are important implications of such misbinding errors to memory processes. However, pioneering studies in the field of cognitive psychology (such as these conducted by Treisman, to whom an AP&P Special Issue has recently been devoted) have been examining the binding mechanisms activated even before memory consolidation or retrieval processes come into play. That is, at stages associated with real-time recognition of everyday objects. One factor that seems to be highly important in feature binding is spatial location, which serves as a ‘glue’ to different characteristics of the same object (e.g., its color, shape, orientation, and so on). This gluing factor depends on the serial deployment of visual attention to the object. Namely, according to Treisman and her colleagues, drawing focused attentional resources to an object greatly increases the chances that its various features would be registered and bounded within one, unified ‘object file’.

A separate, yet somewhat related question, concerns our ability to recognize objects from various viewing points. Returning to the savannah example, recognizing an elephant is typically easier when viewing it from a profile (e.g., facing the left) than from its back. Yet, despite the fact that these two viewpoints elicit very different visual percepts, we are usually able to recognize that they depict an elephant, perhaps even the same elephant. How does our visual system ‘bind’ all of these different visual percepts into one object representation? How do we know that different percepts represent the same object? And, are an object’s identity and its orientation represented separately or within a unified visual representation? These questions, too, have a long history and have yielded much controversy over the years. On the face of it, identity and orientation (or viewpoint) seem to be bound together, as we recognize an object as “an elephant facing the right”, or “an elephant viewed from its back”.

Processing the position of an object seems to be an inseparable part of its identification process. But several lines of research have shown that in fact an object’s identity may be represented independently of its position or its orientation. Thus, for instance, in some cases, patients suffering from brain damage that causes a visual recognition disorder (termed visual agnosia) may identify objects in a variety of orientations, but are unable to explicitly determine these orientations. This and other findings have led some researchers to propose that object identity and orientation may be processed by different mechanisms, and/or in different processing stages. For instance, it’s possible that we first encode an object’s identity (by recognizing local shape features or parts), and we activate an orientation-independent object representation. Only at a later stage, the item’s specific orientation is processed and integrated with the stored object representation.

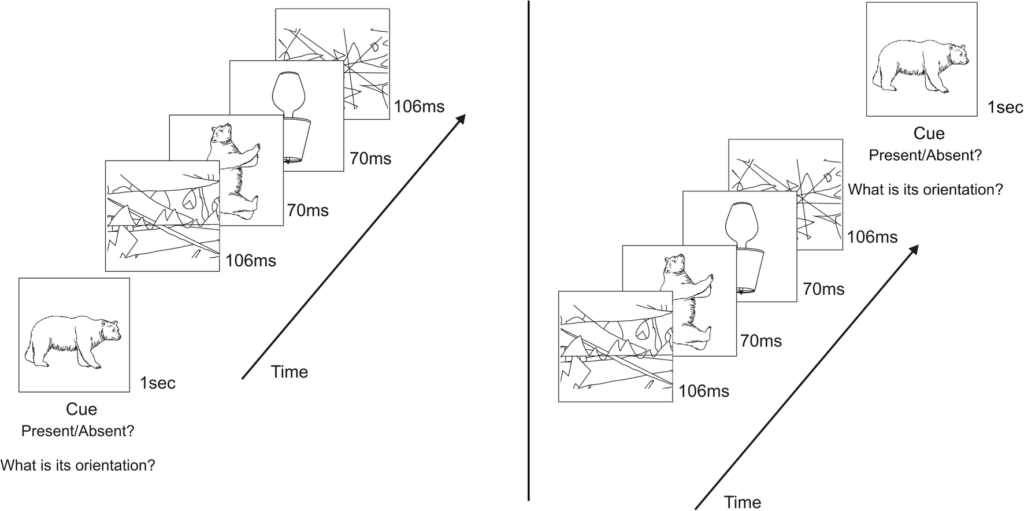

To further investigate whether object identity and orientation are bound together in a unified representation during perception and working-memory encoding, Harris, Harris & Corbalis conducted a series of studies in which they presented in each experimental trial two different rotated objects very briefly. Participants’ task was to detect whether an upright target object (that was presented either before or after these two objects) was present on the trial or not. In addition, they were asked to indicate the target’s orientation, i.e., whether it was rotated 90° clockwise, 90° counterclockwise, or 180° from the correct upright orientation (see figure below).

The results of these experiments showed that participants had incomplete information about the orientation of the objects they had identified correctly. In fact, they reported the correct orientation on only half to two-thirds of the trials in which they identified the objects. Interestingly, as suggested previously, many subjects mistook the target’s orientation with that of the distractor. Taking the example above, thus, they reported the orientation of the lamp instead of that of the bear. Or, in other words, they made frequent binding errors between the objects’ identities and their orientations. This propensity for misbinding of identities and orientations occurred whether observers had prior knowledge of the object they needed to look for (when the target item appeared prior to the two objects – left figure) or when they relied on their memory (when it appeared after the two objects – right figure). Notably, the tendency for misbinding of identity and orientation was dramatically reduced when the two objects appeared simultaneously at different screen locations. That is, participants performed the orientation task quite well if the lamp and the bear (both inverted) were presented side by side, within the same display. This latter finding may suggest that presenting objects in different spatial locations offers some protection from featural interference from distractor objects. Put differently, unique spatial locations can protect the integrity of object representations (by ‘gluing’ object features together), as previously suggested by Treisman and her colleagues.

As the authors conclude, these findings “provide clear evidence in favor of the idea that object identity and orientation are perceived independently of each other, but determining the object’s orientation is contingent on having first identified the object.” Thus, it appears that when viewing a snapshot of an elephant, an initial, rapid mechanism first determines the nature, or the ‘gist’ of the object (e.g., this is an animal, or this is an elephant). Only at a second stage, do we fully process the specific details of the elephant (it is viewed from the back), and we encode these details into visual working memory. Critically, however, under such brief viewing conditions, these details are highly vulnerable to interference from other distracting objects that enter our visual field. We are thus prone to misbinding errors among different objects, such as remembering an elephant from the front, and a lion from the back.

Luckily, paying close attention to an object or an item in a scene increases the probability that this object will be encoded properly, with all features conjoined correctly one to another. Attention, thus, guards us from erroneous object encoding, and/or from illusory bindings of visual elements in the scene. In the absence of focal attention, however, salient changes to objects may be overlooked, and the construction of a global scene representation may be largely incomplete.

Psychonomic Society article focused on this post:

Harris, I. M., Harris, J. A. & Corballis, M. C. (2020). Binding identity and orientation in object recognition. Attention, Perception, & Psychophysics, 82, 153–167. https://doi.org/10.3758/s13414-019-01677-9