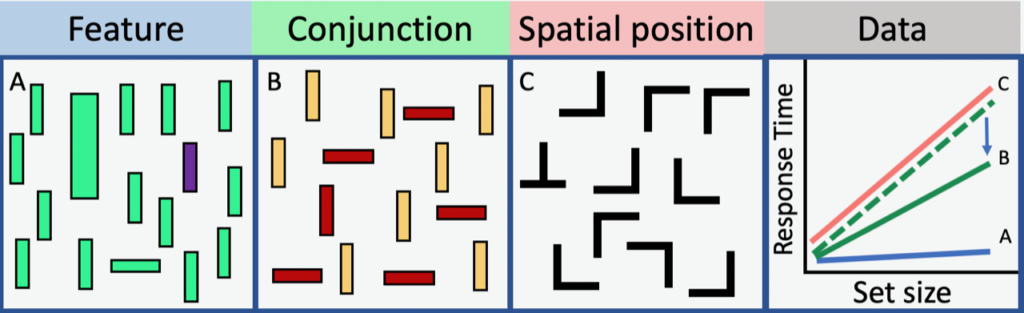

In my post to begin FIT week, I gave my account of the beginnings of my interest in Anne Treisman’s work and in her Feature Integration Theory (FIT). She had hypotheses about the relationship between preattentive features and early cortical processing that I wanted to challenge. As part of that project, since I had not done visual search experiments before, I set about replicating some of Anne’s core findings. To my surprise, I failed to replicate one of the most basic ones. In standard visual search experiments, the observer looks for some target amongst a number of distractor items. Anne had found that, if a target was defined by a unique basic feature like purple among green, vertical among horizontal, or big among small; the whole display could be processed in parallel and the time to find that unique target (the reaction time – RT) would be independent of the number of items in the display (the set size). There is an example in panel A of the figure.

If the target was not defined by a unique basic feature, Treisman held that observers would need to perform a serial, self-terminating search in which attention would be deployed to items in order to permit the binding of different features into a recognizable object. This serial search would be required for a conjunction of two features like the colors and orientations in panel B of the figure. The same type of search would be needed to make sense of the relative spatial positions of features, like the vertical and horizontal segments that make up the Ts and Ls in panel (C). We could replicate the Feature and spatial position results, but our conjunction searches produced search results that were consistently too efficient. Our data would look like the solid green line as opposed to the dashed line that Anne would have predicted. Obviously those ‘data’ are purely schematic.

Why were our conjunction slopes coming out shallower than Anne’s? Back in those pre-internet days, Anne and I were in touch by phone and even, I think, by mail – the paper kind. I should note that she was a hugely supportive senior colleague to me as we tried to figure out what was going on. We had all sorts of theories. Was there something different about East Coast vs. West Coast observers. Could my observers use “The Force” in a way that her observers could not? Don’t laugh. There is evidence that different states of mind can alter search (here’s an example). In the end, the answer turned out to be technology. Anne’s experiments were done with stimuli that she had hand-drawn with markers on white note cards and presented in a tachistoscope. Actually, if memory serves, she said that she put her young children to work on stimulus generation. I was using newer technology, a color monitor. That monitor was part of a Subroc-3D video arcade game released in 1982 by Sega (see images below). I had bought it (used) with my first NIH grant because it had a cool spinning shutter mechanism that allowed one to present an image with stereoscopic depth to the player. Even after we modified it to do rather more staid binocular vision experiments, you could still shoot down alien space ships. If you were a good subject, we didn’t even make you put money in the machine.

What turned out to be important was that my display was bigger, brighter, and had higher contrast than Treisman’s. My stimuli were simply more salient (see Exp 7). With more vivid features, my observers could ‘guide’ their attention in ways that Anne’s could not. So, in the conjunction example, above, observers can use information from Treisman’s preattentive stage of processing to guide attention to red stuffs and vertical stuffs. The intersection of those two sets of stuffs will be a good place to look for a red vertical object.

This is just one of the many times when a change in what I could display changed the science that I could do. In many cases, these advances in technology have allowed us to get closer to the real-world questions that gave the initial impetus to our research. Let’s stick with the example of visual search. As the opening paragraphs of most visual search papers will tell you, we are not actually that interested in the ability to find red vertical lines or Ts among Ls. We want to know how you find the cat in the living room or the tumor in the x-ray. A collection of items, scattered randomly over an otherwise blank screen can be used to elucidate many of the important principles of real-world search, but it is good to have computers with the speed, memory, and graphics capabilities to present real scenes or to create virtual ones. With those capabilities, we can ask questions that we had not really considered before. For example, over the last few years, I have done a series of studies of “hybrid search”. In hybrid search, observers look through a visual display for any of a number of target types, held in memory. You are doing a form of hybrid search when you look at an old picture to see if any of the members of your high school team are in there. People are not particularly good at holding multiple geometric forms in memory but it is easy to hold dozens of images of real objects in memory. Thus, because it is now easy to use photographs of real objects as stimuli, I was able to study hybrid search tasks in which observers looked at instances 100 different targets.

Beyond advances in computer graphics, vast improvements in other technologies have opened new areas of study. I swore off eye-tracking early in my career because, at that time, you needed to devote your life to the care and feeding of your eye tracker. Now, it is easy and I have unsworn myself. Similarly, though I am not a practitioner, new neuroimaging methods suggest new research questions as do computational modeling approaches and the use of big data from sources like surveillance video.

All of which is to say, it is time for the next special issue. This time, Cognitive Research: Principles and Implications (CRPI) is hosting a special issue on Visual Search in Real-World & Applied Contexts. CRPI is the peer-reviewed, open-access journal of the Psychonomic Society. Its mission is to publish use-inspired basic research: fundamental cognitive research that grows from hypotheses about real-world problems. As with all Psychonomic Society journals, submissions to CRPI are subject to rigorous peer review. Trafton Drew, Lauren Williams, and I are eager for the next set of interesting papers. The deadline is October 1. What do you have for us? (Send me an email, if you have questions about a possible submission: jwolfe@bwh.harvard.edu).

I am hoping that this new special issue, like the current special issues, honoring Treisman’s FIT, will be an interesting collection, reflecting the diverse topics and methods that make up current work in visual search. I will be totally unsurprised when I find that many of these new papers will cite Treisman and Gelade (1980).