We keep track of multiple objects every day. When we drive, we need to keep track of the cyclist near the curb, the dump truck bearing down on us from behind, and the lost tourist in front of us who is signaling turns at random. When we are on the beach on a family outing, we keep track of the children in the water, and if we are soccer referees we keep track of team members and opponents alike.

How do we do this? Where do we allocate our attention, and where do we look? Do our eyes track our attention exactly or do they lag behind? Or do they move ahead?

A recent article in the Psychonomic Society’s journal Attention, Perception, & Psychophysics addressed this issue. Researchers Jiří Lukavský and Filip Děchtěrenko provided a video abstract that sums up the problem and their paradigm really nicely, so let’s begin with that one:

[youtube https://www.youtube.com/watch?v=TkIbYg04xF4]

Let’s consider their method and results in a bit more detail. As shown in the video, their basic task consisted of 8 grey disks that were moving quasi-randomly across a computer screen, bouncing off each other and off the screen boundaries. Before each trial commenced, 4 target disks were identified by highlighting them in green for 2 seconds. After that, the targets also turned grey and the 8 disks started moving for 10 seconds. At the end of that period, the disks stopped moving and the participant had to select the 4 targets by mouse click. Throughout, the participants’ eye movements were recorded. As we have noted several times on this blog, eye movements provide us with non-intrusive access to our cognition, and they formed the principal measure in Lukavský and Děchtěrenko’s study.

The key innovation introduced by Lukavský and Děchtěrenko was that the sequence of trials contained exact repetitions of motion sequences as well as reversals of those exact sequences. That is, some of the same motion patterns were presented in forward and backward order on different trials.

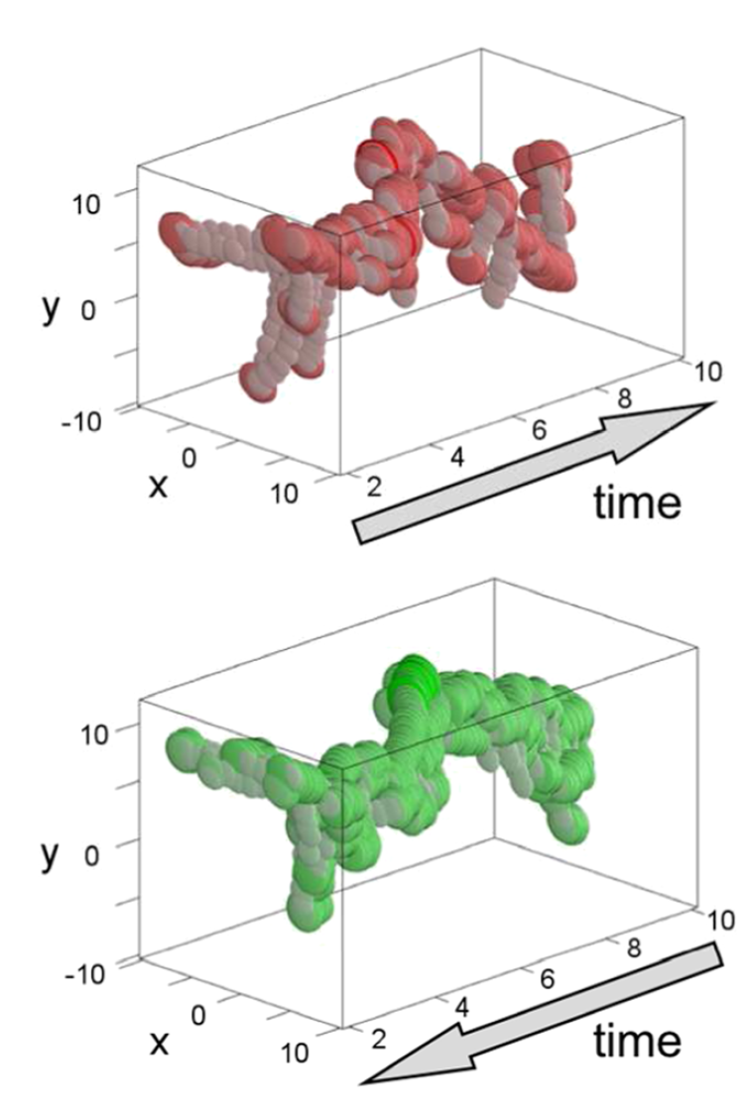

Lukavský and Děchtěrenko only considered the data from those trials on which participants correctly reported all targets at the end. Whatever eye movements were observed on those trials, they were relevant to tracking the correct objects. Specifically, the analysis focused on comparison of the forward and backward presentations of the same motion sequence. The figure below shows the point clouds of the observed eye movements for forward (red) and backward (green) presentations of the same stimulus.

Note that although the eye movements are plotted along a common temporal axis, the observations in the bottom panel (green) actually unfolded in the reverse direction. Each observation in each of the panels represents the gaze position (in X-Y coordinates relative to the center of the screen) at any particular point in time.

Once those point clouds were obtained, the next question was how gazes were temporally aligned with the objects being tracked. This question is quite tricky to answer because people were tracking multiple objects, and hence it would be very difficult to identify which particular object(s)—out of the 4 targets—people were tracking at any given moment.

Lukavský and Děchtěrenko resolved this problem by statistical means: They computed the correlations between the gazes that were obtained on forward and backward trials at each point in time. For example, at exactly 2 seconds into a trial (which in the backward condition would have occurred after 8 seconds—remember that the time axis is flipped for those trials), the X-Y values of all points in a slice of the red cloud above can be correlated with the X-Y values in the same slice in the green cloud. If people’s eyes tracked the objects exactly, then it shouldn’t matter whether the motion unfolded in one direction or the other, and the correlation between the two sets of points should be perfect (or nearly so).

But what if people’s eye movements either anticipated or lagged the objects? In that case, the red and green point clouds would be offset in time, because the direction in which a motion unfolded would matter. To test this possibility, Lukavský and Děchtěrenko computed the correlations between the red and green clouds repeatedly, shifting one of the observed sequences forward and backward in time by a small amount at each step.

The figure below plots the observed correlations as a function of the time difference between the two particular forward and backward point clouds.

Note that the zero point along the abscissa (X-axis) represents the situation in which the two series of eye movements are completely synchronized. As noted earlier, if people’s eyes tracked the objects perfectly, then we would expect the correlation to be maximal at that point.

However, it is clear from the figure that the correlation does not peak at the zero point: instead, it peaks at a point that corresponds to a lagging-behind of the eyes by 100 milliseconds (or 1/10th of a second). That is, whatever our eyes are doing while we are tracking multiple objects, our gazes (on average) lag 100 ms behind the motion of the objects. This effect was remarkably robust as it was present in all participants.

In another follow-up experiment, the same lag appeared even when only two objects had to be tracked. This is an interesting finding because one might explain the lag by assuming that people cannot anticipate the rather complex motion of 4 targets—hence their eyes must lag behind. However, with only 2 targets, predicting the motion is much easier and thus the lag might be reduced—but this failed to be observed.

Similarly, in a third experiment, the lag was more or less unaffected by how predictable the targets’ motion was. It was only when the motion became totally chaotic—thus preventing any prediction—that the lag increased significantly.

The figure below summarizes all experiments. It can be seen how all the lag values fall within the same statistical window, bar the very last one (that’s the chaotic motion).

Taken together, the data suggest that people’s eye movements are based on what they have observed in the past, not what they are looking at now or what they expect to see in the future.

Fortunately, this lag has not impaired the ability of air traffic controllers to keep track of numerous aircraft and keep them safely separated.

Article focused on in this post:

Lukavský, J., & Děchtěrenko, F. (2016). Gaze position lagging behind scene content in multiple object tracking: Evidence from forward and backward presentations. Attention, Perception, & Psychophysics, 78, 2456-2468. DOI: 10.3758/s13414-016-1178-4.